Calculate 3D position from stereo Images

Hi, I have two images taken with a stereo-camera setup (calibrated). I detect markers in both images using the aruco contrib module. How can I calculate the 3D position of the markers edges from the two 2D positions? I found tutorials on how to calculate the depth map, but I do not need the map of the whole image, but just the corners of the markers.

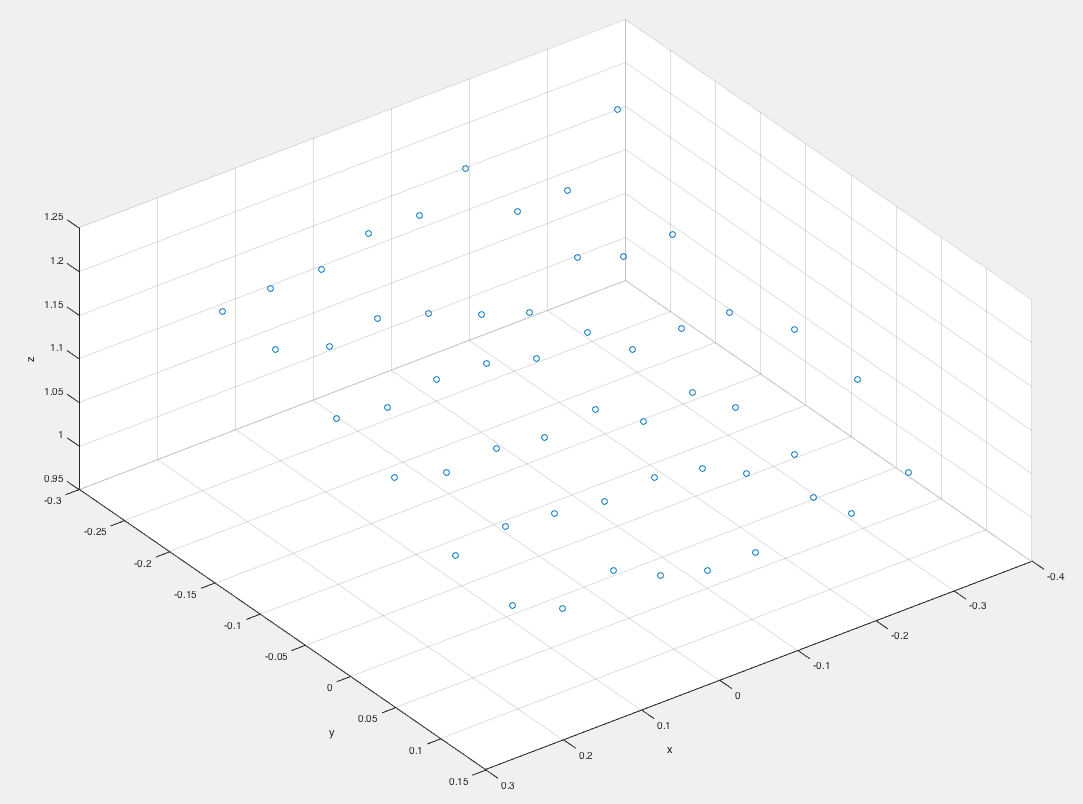

This is a sample plot of the values I get with triangulatePoints on the calibration checkerboard.

Have you had a look at the reprojectImageTo3D and stereoRectify functions? My understanding was that if you have all the intrinsics and extrinsics of your left and right camera....stereoRectify can produce an output mapping Q that takes the disparity of a pixel and triangulates it.

The documentation says something along the lines that given the rectified co-ordinates <u,v> and the disparity between them as a columnvector of <u,v,disparity, 1="">, you can triangulate them as <x,y,z,w> = Q * columnvector

So if you already have the pixels you want to triangulate and their disparity, you could potentially calculate individual points with the Q matrix. But I stand corrected if someone else knows a little bit more about the functions...see "stereo Odometry- a review of approaches" Peter Protzel