StereoBM truncates right edge of disparity by minDisparities

When using StereoBM, as the minimum disparities values is increased, the right side of the resulting disparity map is truncated by a corresponding pixel count.

Although the disparity can be correctly calculated in the truncated area, it is not performed. in contrast, StereoSGBM calculates disparity all the way to the right edge of the disparity map.

The StereoBM algorithm uses getValidDisparityROI() to determine the valid area of the disparity map over which to calculate disparity, but its calculation of the right margin is incorrect.

I've observed this behavior on OpenCV master (as of 01-Oct-2017) and the 2.4.8+dfsg1 as packaged with Ubuntu 14.04 on amd64 and armhf.

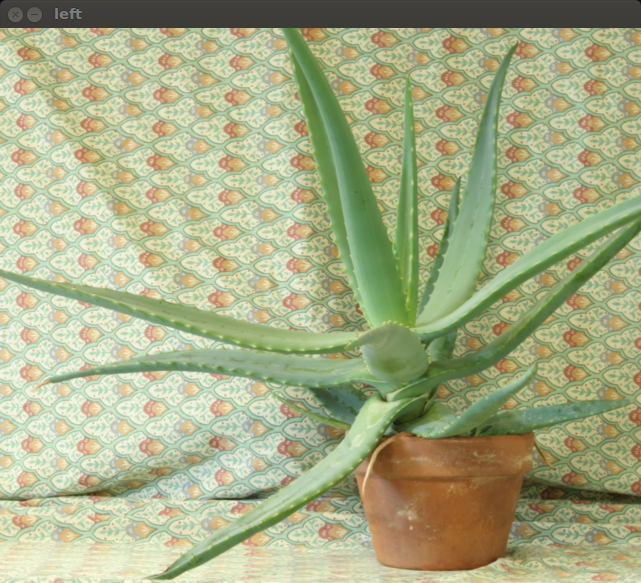

Example: Input left and right images are the data/aloeL.jpg and data/aloeR.jpg used by the samples/python/stereo_match.py example.

Left image is:

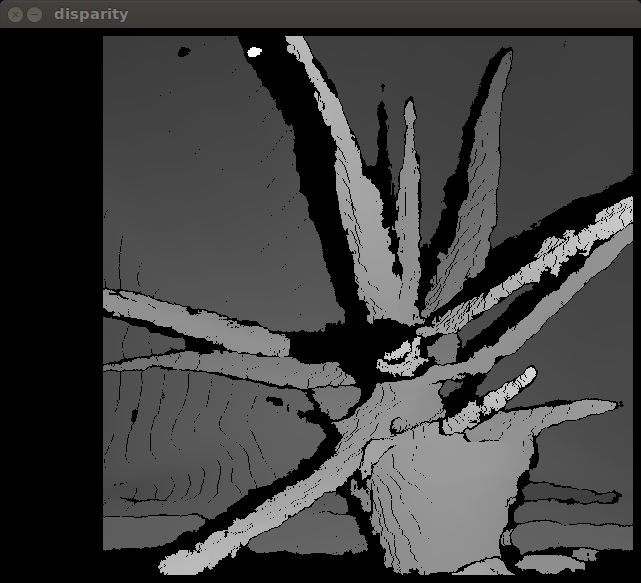

The disparity image at min disparities = 0 (below) has all the expected features: a left margin that is minDisparities + numberOfDisparities wide, and top, bottom and right margins of half the block match window width (17 / 2 = 8).

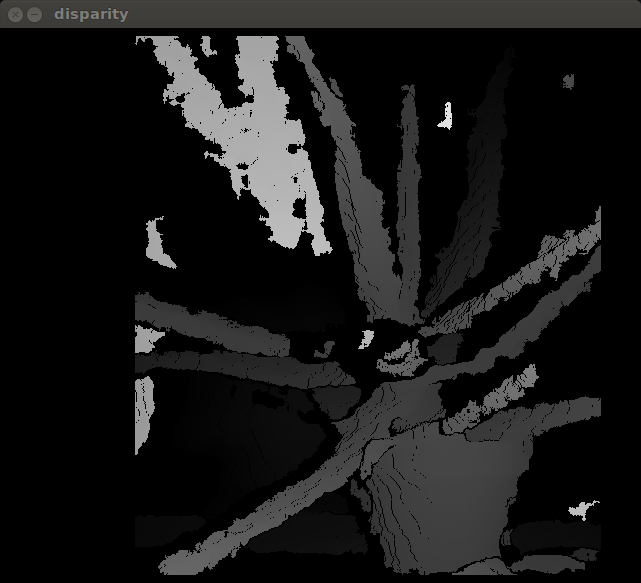

The disparity image at min disparities = 32 (below) shows both left and right side margin expanded by min disparities (right margin of 40 instead of 8). The expanded right margin is my concern:

Possible solution: If the line from getValidDisparityROI (from modules/calib3d/src/stereosgbm.cpp) which reads:

int xmax = std::min(roi1.x + roi1.width, roi2.x + roi2.width - minD) - SW2;

it is instead calculated as:

int xmax = std::min(roi1.x + roi1.width, roi2.x + roi2.width) - SW2;

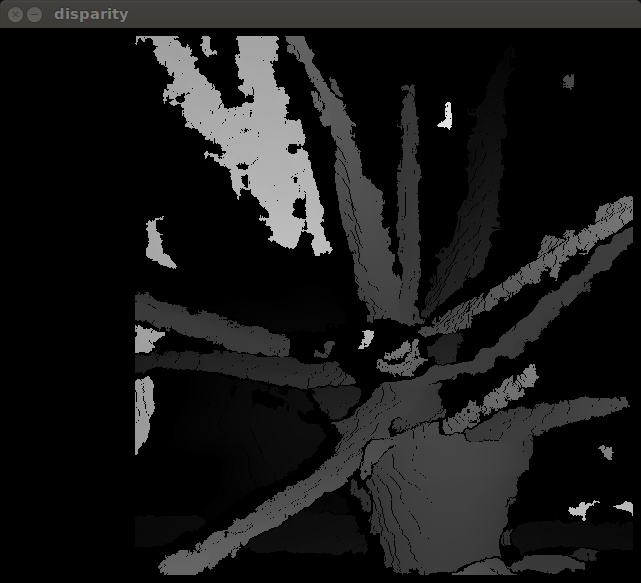

Then the output disparity map is correctly calculated, without truncation on the right side:

Discussion: I'm suspecting this is a bug that only affects StereoBM. Like StereoBM, StereoSGBM calculates correct disparity data to the right edge disparity image, but StereoSGBM does not truncate the right side.

My application needs the speed of StereoBM, and my cameras are relatively widely spaced for a relatively near target, meaning I'm expecting disparities of 100-250 on an image only ~1000 pixels wide - lopping off an extra minDisparity = 100 pixels on the right side is quite a sacrifice.

Question: Check my reasoning/assertions. Shall I file this as a bug and propose the above getValidDisparityROI modification as a fix? Is there a better or more correct fix?

Appendix: My Calculation/display code is:

#!/usr/bin/env python

# Python 2/3 compatibility

from __future__ import print_function

import sys

import numpy as np

import cv2

print('load and downscale images')

imgL = cv2.pyrDown( cv2.imread('../data/aloeL.jpg') )

imgR = cv2.pyrDown( cv2.imread('../data/aloeR.jpg') )

min_disp = 16

if sys.argv[1] > 0:

min_disp = int(sys.argv[1])

num_disp = 128 - min_disp

window_size = 17

stereo = cv2.StereoBM_create(numDisparities = num_disp, blockSize = window_size)

stereo.setMinDisparity(min_disp)

stereo.setNumDisparities(num_disp)

stereo.setBlockSize(window_size)

stereo.setDisp12MaxDiff(0)

stereo.setUniquenessRatio(10)

stereo.setSpeckleRange(32)

stereo.setSpeckleWindowSize(100)

print('compute disparity')

grayL = cv2.cvtColor(imgL, cv2.COLOR_BGR2GRAY)

grayR = cv2.cvtColor(imgR, cv2.COLOR_BGR2GRAY)

disp = stereo.compute(grayL, grayR).astype(np.float32) / 16.0

disp_map = (disp - min_disp)/num_disp ...

which opencv version do you use ? opencv 2.4.8 ? using opencv 3.3.0-dev I cannot see any problem in c++ using my stereo camera

Thanks LBerger, I will recode my test in C++, retest, and update my question if it isn't solved.

I have used opencv versions 2.4.8, 2.4.8+dfsg1 (as patched and distributed in Ubuntu 14.04), 3.3, and master (current development branch). In other words, all versions of OpenCV behave similarly.

In this post I cannot see any problem and this one too

Hi LBerger, C++ example code using StereoBM shows the identical issue to that seen with the Python example code. I've attached C++ code to my original problem description.

Unfortunately, both the examples you cited use the StereoSGBM disparity algorithm, which does not show the problem that the StereoBM disparity algorithm does, so they are not relevant.

A little background for those who do not know, there are several algorithms in OpenCV for calculating disparity, including the block match (StereoBM), acclerated (cuda::StereoBM), semi-global block match (StereoSGBM), belief propagation, graph cut, and others.

I cannot use StereoSGBM as a workaround as it does not meet my performance and hardware requirements - I need to use StereoBM.

Can you set blocksize to 1?

LBerger, thanks for your continued attempts to help me.

However, block size is irrelevant to this discussion. Changing it within its desired range does not affect the problem I'm seeing with the right margin, however it does reduce the quality of a depth map generated in the valid range.

To be specific, I cannot set block size to 1. The StereoBM implementation blockSize aka SADWindowSize must be odd and in the range 5..255 (from modules/calib3d/src/stereobm.cpp class StereoBMImpl function compute).

Block size is a factor which is tweaked for best depth results. Its value depends somewhat on image size, noise, contrast, and size/shape of objects present in the depth map range of interest. 17 is optimal for my application.

blocksize https://books.google.fr/books?id=SKy3...

Hi LBerger, I have to modify the range checking code in StereoBMImpl:compute() to not return an error for a block size of 1. When I reduce it to 1, I get a depth map with no matches at all (completely set to minDisparities-1, meaning no data.

When I set it to the normal minimum block size of 5 and a minDisparities of 32 I still have the same problem I'm describing above where the right edge of the disparity map has a margin of minDisparities. IN other words, it looks very much like this but with narrower top and bottom margins:

Ok minimum values is 5 for blocksize. can you try with this parameter minDisparity=0 and blockSize=5

I've edited the problem description to make my complaint a bit clearer: