Get depth map from disparity map

I can't get normal depth map from disparity. Here is my code:

#include "opencv2/core/core.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "opencv2/contrib/contrib.hpp"

#include <cstdio>

#include <iostream>

#include <fstream>

using namespace cv;

using namespace std;

ofstream out("points.txt");

int main()

{

Mat img1, img2;

img1 = imread("images/im7rect.bmp");

img2 = imread("images/im8rect.bmp");

//resize(img1, img1, Size(320, 280));

//resize(img2, img2, Size(320, 280));

Mat g1,g2, disp, disp8;

cvtColor(img1, g1, CV_BGR2GRAY);

cvtColor(img2, g2, CV_BGR2GRAY);

int sadSize = 3;

StereoSGBM sbm;

sbm.SADWindowSize = sadSize;

sbm.numberOfDisparities = 144;//144; 128

sbm.preFilterCap = 10; //63

sbm.minDisparity = 0; //-39; 0

sbm.uniquenessRatio = 10;

sbm.speckleWindowSize = 100;

sbm.speckleRange = 32;

sbm.disp12MaxDiff = 1;

sbm.fullDP = true;

sbm.P1 = sadSize*sadSize*4;

sbm.P2 = sadSize*sadSize*32;

sbm(g1, g2, disp);

normalize(disp, disp8, 0, 255, CV_MINMAX, CV_8U);

Mat dispSGBMscale;

disp.convertTo(dispSGBMscale,CV_32F, 1./16);

imshow("image", img1);

imshow("disparity", disp8);

Mat Q;

FileStorage fs("Q.txt", FileStorage::READ);

fs["Q"] >> Q;

fs.release();

Mat points, points1;

//reprojectImageTo3D(disp, points, Q, true);

reprojectImageTo3D(disp, points, Q, false, CV_32F);

imshow("points", points);

ofstream point_cloud_file;

point_cloud_file.open ("point_cloud.xyz");

for(int i = 0; i < points.rows; i++) {

for(int j = 0; j < points.cols; j++) {

Vec3f point = points.at<Vec3f>(i,j);

if(point[2] < 10) {

point_cloud_file << point[0] << " " << point[1] << " " << point[2]

<< " " << static_cast<unsigned>(img1.at<uchar>(i,j)) << " " << static_cast<unsigned>(img1.at<uchar>(i,j)) << " " << static_cast<unsigned>(img1.at<uchar>(i,j)) << endl;

}

}

}

point_cloud_file.close();

waitKey(0);

return 0;

}

My images are:

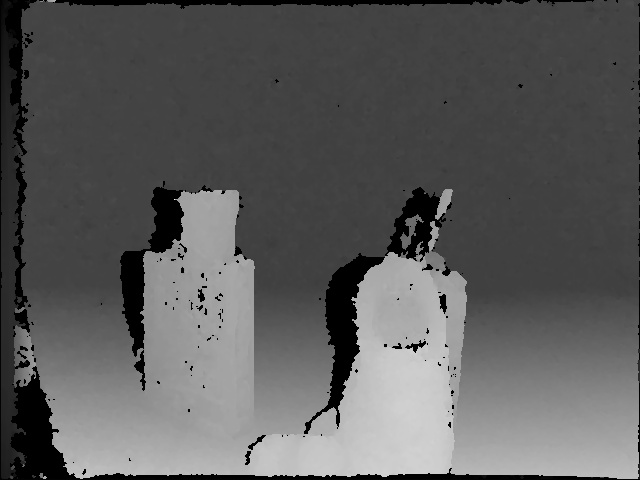

Disparity map:

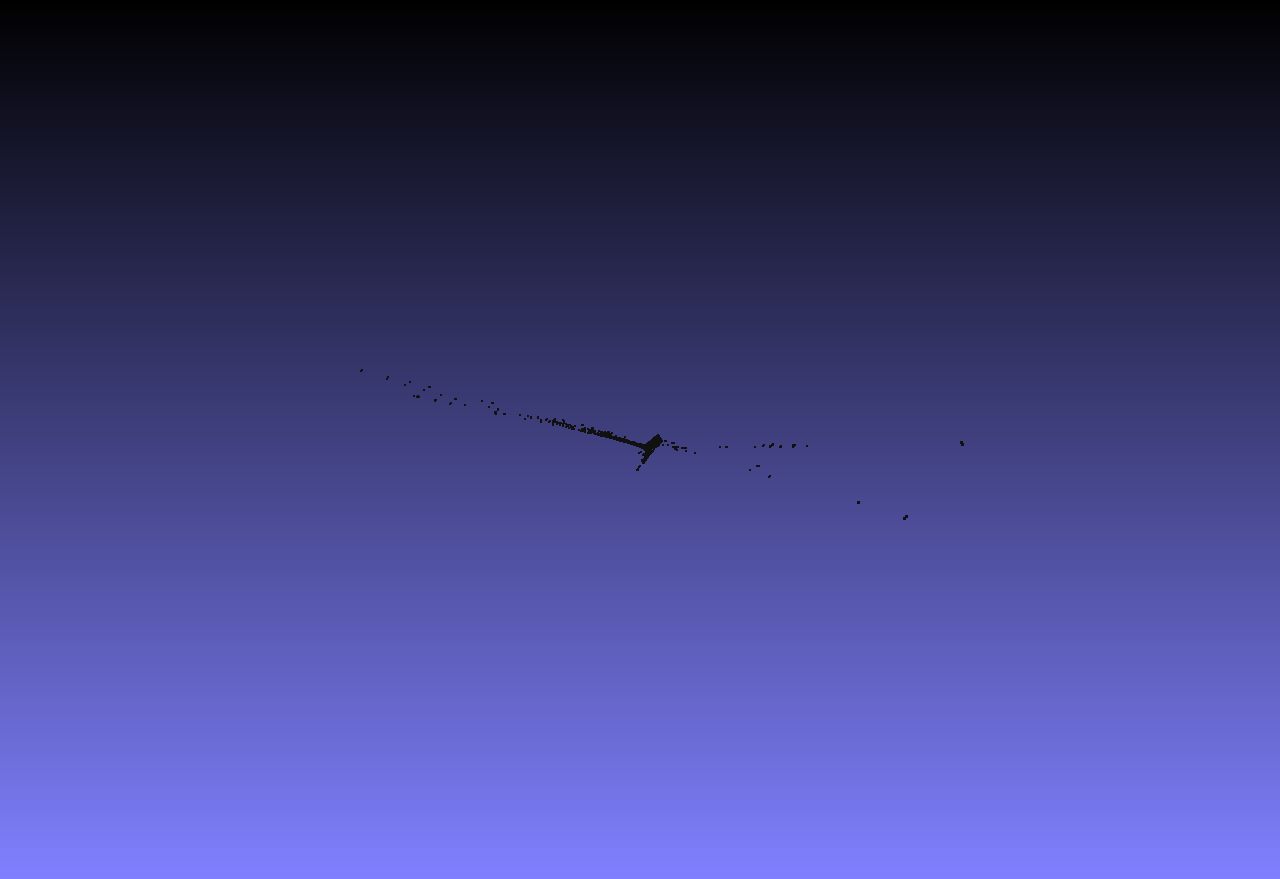

I get smth like this point cloud:

Q is equal: [ 1., 0., 0., -3.2883545303344727e+02, 0., 1., 0., -2.3697290992736816e+02, 0., 0., 0., 5.4497170185417110e+02, 0., 0., -1.4446083962336606e-02, 0. ]

I tried many other things. I tried with different images, but no one is able to get normal depth map.

What am I doing wrong? Should I do with reprojectImageTo3D or use other approach instead of it? What is the best way to vizualize depth map? (I tried point_cloud library) Or could you provide me the working example with dataset and calibration info, that I could run it and get depth map. Or how can I get depth_map from middlebury stereo database (http://vision.middlebury.edu/stereo/d...), I think there isn't enough calibration info.

Maybe you could try to write only 3D points that are located in a specific area (not too far for example) and see what happen when you visualize the new data with Meshlab ?

@Eduardo: I do exactly what you say: if( point[2] < 10) this if means that