object detection using SURF & FLANN

hi!

i write one program for finding one object in a image file in a video . i read the istruction in the opencv manual and i use the SURF and the FLANN matcher. the program draw lines between the corners in the mapped object in the video file. the problem is that in some frame the lines are not present or lines draw a strange figure.

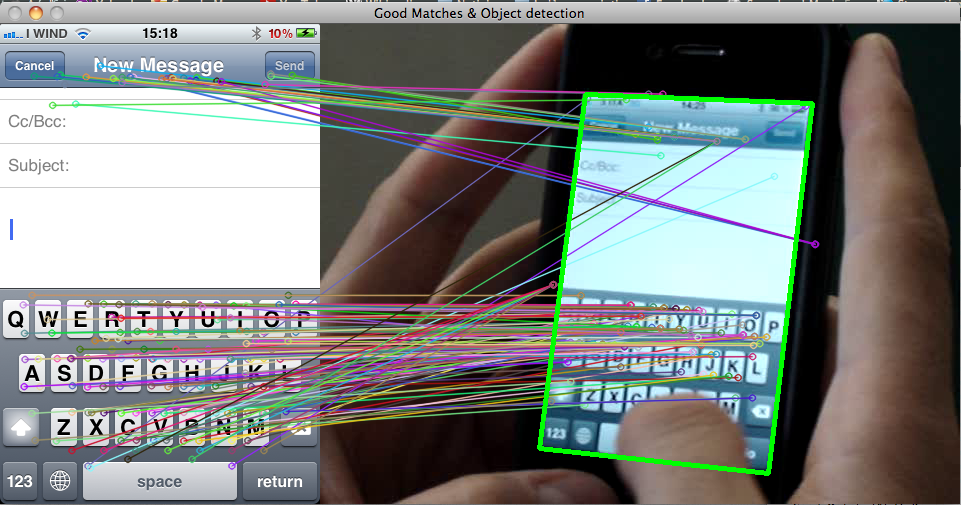

for example...this is the correct output

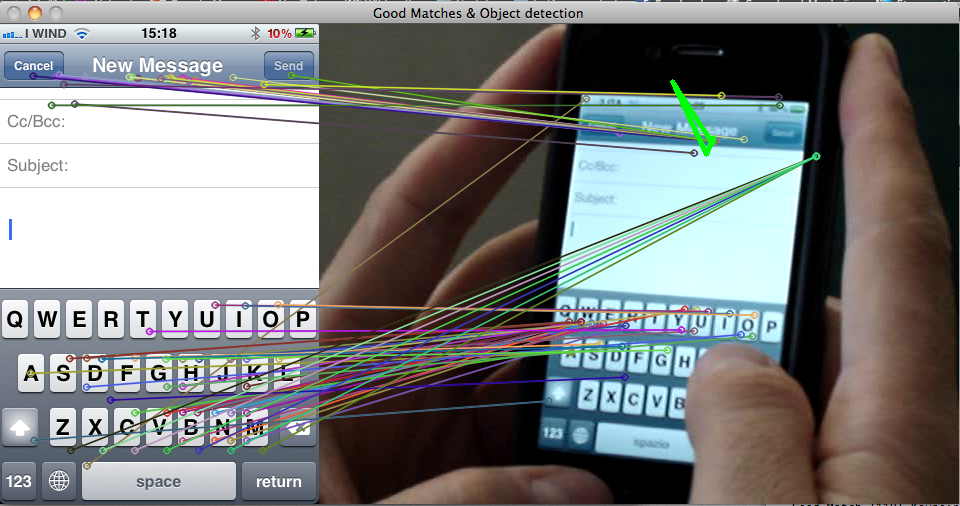

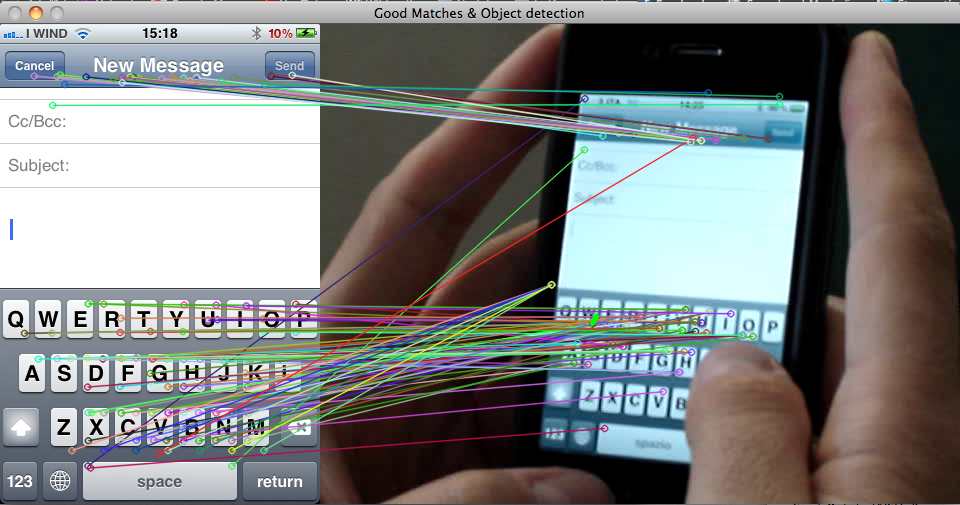

and this is the wrong result for some frame

this is the code for the program:

void surf_detection(Mat img_1,Mat img_2); /** @function main */

int main( int argc, char** argv )

{

int i;

int key;

CvCapture* capture = cvCaptureFromAVI("video.MPG");// Read the video file

if (!capture){

cout <<" Error in capture video file" << endl;

return -1;

}

Mat img_template = imread("template.png"); // read template image

CvVideoWriter *writer = 0;

int isColor = 1;

//Get capture device properties

cvQueryFrame(capture); // this call is necessary to get correct

// capture properties

int frameH = (int) cvGetCaptureProperty(capture, CV_CAP_PROP_FRAME_HEIGHT);

int frameW = (int) cvGetCaptureProperty(capture, CV_CAP_PROP_FRAME_WIDTH);

int fps = (int) cvGetCaptureProperty(capture, CV_CAP_PROP_FPS);

int numFrames = (int) cvGetCaptureProperty(capture, CV_CAP_PROP_FRAME_COUNT);

writer=cvCreateVideoWriter("out.avi",CV_FOURCC('P','I','M','1'),

fps,cvSize(frameW,frameH),isColor);

IplImage* img = 0;

for(i=0;i<numFrames;i++){

cvGrabFrame(capture); // capture a frame

img=cvRetrieveFrame(capture); // retrieve the captured frame

printf("Frame n° %d \n", i );

surf_detection (img_template,img);

cvWriteFrame(writer,img); // add the frame to the file

cvShowImage("mainWin", img);

key=cvWaitKey(20); // wait 20 ms

}

cvReleaseVideoWriter(&writer);

//waitKey(0); // Wait for a keystroke in the window

return 0;

}

/** @function surf_detection */

/** USE OF FUNCTION*/

/** param1: immagine del template*/

/** param2: immagine estratta dal video*/

void surf_detection(Mat img_1,Mat img_2)

{

if( !img_1.data || !img_2.data )

{

std::cout<< " --(!) Error reading images " << std::endl;

}

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

std::vector< DMatch > good_matches;

do{

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Draw keypoints

Mat img_keypoints_1; Mat img_keypoints_2;

drawKeypoints( img_1, keypoints_1, img_keypoints_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

drawKeypoints( img_2, keypoints_2, img_keypoints_2, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

double max_dist = 0;

double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist );

printf("-- Min dist : %f \n", min_dist );

//-- Draw only "good" matches (i.e. whose distance is less than 2*min_dist )

//-- PS.- radiusMatch can also be used here.

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance < 2*min_dist )

{

good_matches.push_back( matches[i]);

}

}

}while(good_matches.size()<100);

//-- Draw only "good" matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2,good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the ...

Hi Andrea, from which university do you come from?