projectPoints functionality question

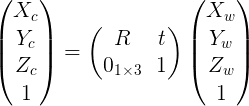

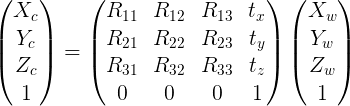

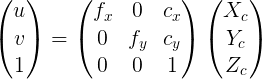

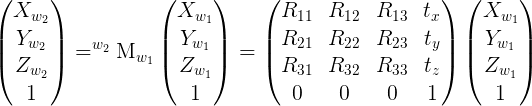

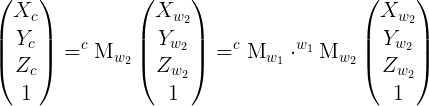

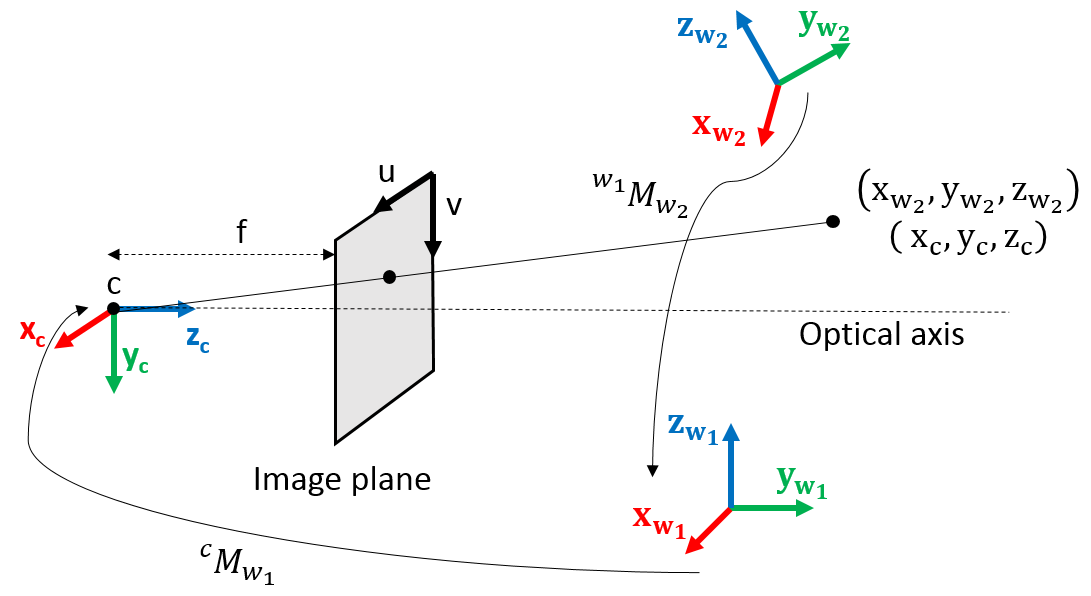

I'm doing something similar to the tutorial here: http://docs.opencv.org/3.1.0/d7/d53/t... regarding pose estimation. Essentially, I'm creating an axis in the model coordinate system and using ProjectPoints, along with my rvecs, tvecs, and cameraMatrix, to project the axis onto the image plane.

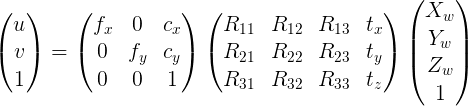

In my case, I'm working in the world coordinate space, and I have an rvec and tvec telling me the pose of an object. I'm creating an axis using world coordinate points (which assumes the object wasn't rotated or translated at all), and then using projectPoints() to draw the axes the object in the image plane.

I was wondering if it is possible to eliminate the projection, and get the world coordinates of those axes once they've been rotated and translated. To test, I've done the rotation and translation on the axis points manually, and then use projectPoints to project them onto the image plane (passing identity matrix and zero matrix for rotation, translation respectively), but the results seem way off. How can I eliminate the projection step to just get the world coordinates of the axes, once they've been rotation and translated? Thanks!