Understanding the camera matrix

Hello all,

I used a chessboard calibration procedure to obtain a camera matrix using OpenCV and python based on this tutorial: http://opencv-python-tutroals.readthe...

I ran through the sample code on that page and was able to reproduce their results with the chessboard pictures in the OpenCV folder to get a camera matrix.

I then tried the same procedure with my own checkerboard grid and camera, and I obtained the following matrix:

mtx = [1535 0 638 0 1536 204 0 0 1]

I am trying to better understand these results, based on the camera sensor and lens I am using.

Based on: http://ksimek.github.io/2013/08/13/in...

Fx = fx * W/w

Fx = focal length in mm W = sensor width in mm w = image width in pixels fx = focal length in pixels

The size of my images: 1264 x 512 (width x height) I am using the following lens: http://www.edmundoptics.com/imaging/i...

This has focal length 8 mm.

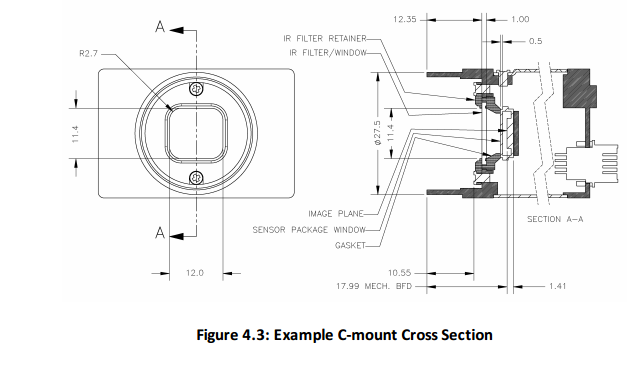

I am using a FL3-U3-13Y3 camera from PtGrey (https://www.ptgrey.com/flea3-13-mp-mo...), which has an image width of 12 mm, according to this picture:

From the camera matrix, fx is the element in the first row, first column. So above, fx = 1535. In short:

fx = 1535 pixels (from camera matrix I obtained) w = 1264 pixels (image size I set) W = 12 mm (from datasheet) Fx = 8 mm (from datasheet)

Using: Fx = fx * W/w, we would expect Fx = 1535 * 12 / 1264 = 14.57 mm

But the actual lens is 8 mm. Why the discrepancy?

I would think that the actual size of a chess grid would have to be known, but I did not see mention of manipulation of that in the tutorial link I provided. I basically had to scale down the chessboard grid so that it would work with my camera setup.

I would appreciate any help or insight on this.

Thanks in advance

EDIT:Actually to be more specific, the lens has a maximum camera sensor format of 1/3", while the camera sensor format is 1/2". I found an article on this: http://www.cambridgeincolour.com/tuto...

Focal length multiplier = (1/2) / (1/3) = 1.5 Focal length of lens as listed on datasheet = 8 mm Equivalent focal length of lens= 1.5 * 8 mm = 12 mm

Still, 12 mm is off from 14.57 mm. Am I not factoring something else in my calculation? Could this be happening from bad images that still happen to find the chessboard corners?

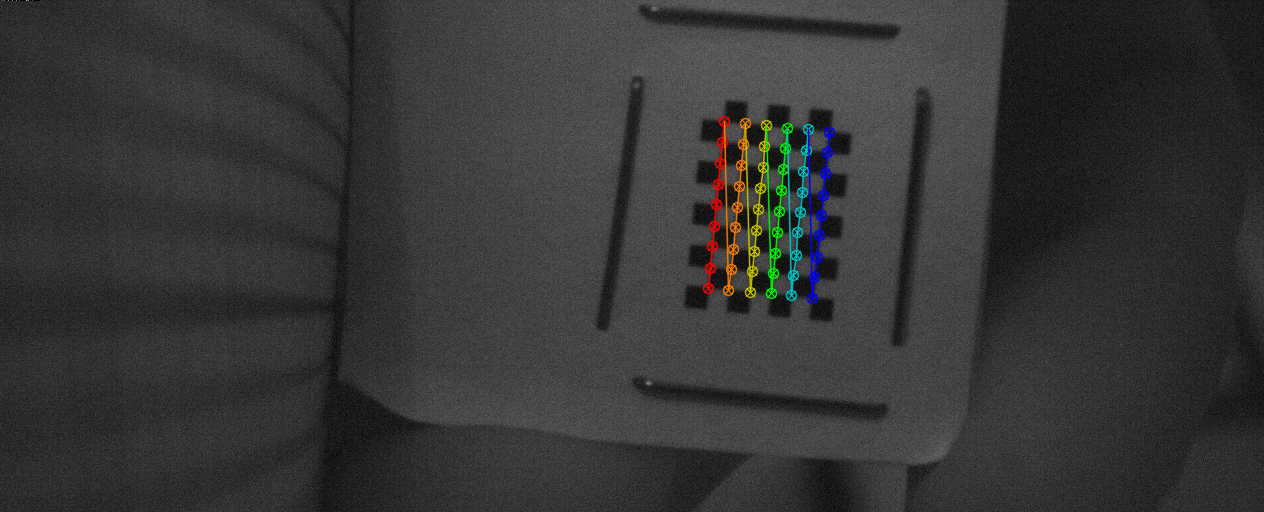

Below is an example image:

You have to supply the size of a corner in the chessboard pattern when you construct the object points, see the tutorial in C++ and also the C++ sample.

Hi Eduardo,

Thanks for your reply. From my reading, I see that it does ask for the size of the pattern: "Let there be this input chessboard pattern which has a size of 9 X 6."

However, as I understand it, this is referring to the number of grids, not the individual size of the grids (in either mm or in). Interestingly enough, to me it appears that their grid example is really 10x7.

In your C++ example link: static void calcChessboardCorners(Size boardSize, float squareSize, vector<point3f>& corners, Pattern patternType = CHESSBOARD)

I believe you are referring to the input "squareSize", which by default is 1. Is this the value that should be changed, and the input could be in any desired units? I don't believe anything else has to be changed except grid array size?

Thanks

This chessboard pattern is a 9x6, look at the image in the tutorial with the drawing.

The squareSize must be set to the real size (if you print it for example on A4 or A3 paper) in whatever unit you want.

Got it. The grid array refers to the number of inner corners, NOT the number of grids in a row by the number of grids in a column.

Other point I wanted to note is that I am dealing with a micro video lens and therefore dealing with smaller field of views / regions of interest. I don't believe this should play a role but just wanted to note that the size of a single grid is about 1.4 mm x 1.4 mm. So if I wanted units of mm, then squareSize = 1.4, correct?

Yes it is correct. Every function that will use the camera intrinsic matrix (for example solvePnP() function) will express the result in mm unit.

So unless I am mistaken, I do not see a similar "squareSize" variable in the python version: http://docs.opencv.org/2.4/modules/ca...

I can see that the routine is finding the corners of the chessboard in the images I have captured. Do I manually need to scale down these values?

I printed the original chessboard and each square seems to be about 21 mm. After measuring my chessboard more closely, each square is about 1.03 mm. However, by simply scaling, I do not see how the math could work out.

You have to manually build the chessboard 3D model, look here.

So that links back to the original C++ sample. Do you mean I have to redefine the python version of "calcChessboardCorners" function?

Actually, looking at this example more closely, it looks like there is no adjustment made based on "squareSize" if the chessboard pattern is used as "patternType"?

Python code: calibrate.py.

So the key line is: "pattern_points *= square_size"

I added that in and started playing with the parameters. I made "square_size" have a value of 1 and then 2. In either case, the camera matrix is the same. The rvecs are also the same. The tvecs however, are twice as great when "square_size" has a value of 2. Still, I don't see why the calculation for focal length doesn't work out, using the data I get from the camera matrix. Is there something I am not taking into account?

NOTE: I made an edit to the original post regarding focal length.