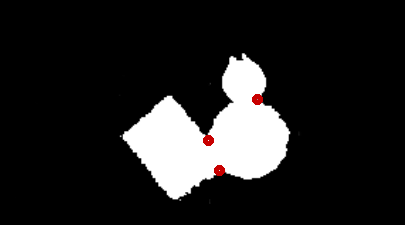

Single blob, multiple objects (Ideas on how to separate objects)

Hey friends

I'm developing a object detection and tracking algorithm. The available CPU resources are low so I'm using simple blob analysis; no heavy tracking algorithms.

The detection framework is created and working accordingly to my needs. It uses information from background subtraction and 3D depth data to create a binary image with white blobs as objects to detect. Then, a simple matching algorithm will apply an ID to and object and keep tracking it. So far so good.

The problem:

The problem arises when objects are too close together. The algorithm just detects it as a whole big object and thats the problem I need to solve. In the example image above, I have clearly 3 distinct objects, so how to solve this problem?

Things I've tried

I've tried a distance transform + adaptiveThreshold approach to obtain fairly good results in individualizing objects. However, this approach is only robust with circular or square objects. If a rectangle shaped object (such as in the example) shows up in the image then this approach just doesn't work due to how distance transform algorithm is applied. So, Distance Transform approach is invalid.

Stuff that wont work

- watershed on the original image is not an option, firstly because the original image is very noisy due to the setup configuration, secondly because of the strain on the CPU.

- Approaches solely based on morphological operations are very unlikely to be robust.

My generic idea to solve the problem (comment this please)

I thought about a way to detect the connection points of the objects, erase the pixels between them with a line and finally let the detector work as it is.

The challenge is to detect the points. So I thought that it may be possible to do that by calculating the distance between all contour points of a blob, and identify connection points as the ones that have a low euclidean distance between each other, but are far away in the contour point vector (so that sequential points are not validated), but this is easy to say but not so easy to implement and test.

I welcome ideas and thoughts :)

take a look at my answer as a hint analyse distance between points . if you post the original image it would be nice.

@PedroBatista I would like also to see the original image, because it might be easier to discriminate the objects before you reach to the binary image. The point is to somehow preserve and sharpen the edges of each object before you use the distanceTransform() for example.

There is no "normal" image in this project because I use a Axus Xtion 3D sensor (instead of usual camera) and use the Infra-Red image as one of the inputs. The infrared image is good because is resistant to illumination changes (good for background subtraction), but it is bad for almost everything else because its very noisy.

The other input is the 3D data, so I guess this binary image is really the starting point

Am I missing why the following would not work?

More info on similar problem: http://docs.opencv.org/master/d3/db4/.... It is a python tutorial but it does fairly identical things like the C++ interface.

Even assuming that the distance transform + threshold outputs perfect seeds for all scenarios (which is not the case, mainly for non-round objects), then it requires the original image to perform watershed, am I right? I really do not know what happens in the watershed algorithm, so there might be a misconception here, but I'm assuming that it computes the edges of image and then "fills" the image with different labels according to the edges.

My original image is really noisy, and not coherent edges can be computed from it.

You have the binary image or not? What watershed does is, taken for each blob a pooring center, then one center in the background, start pooring water until edges bounce on eachother. there a seperation is made. the binary image is used to define borders on how far the fluid can flow.

Oh, now I get it. I had the wrong idea about watershed then, thanks. I'll give it a try.

Yep I use it for fruit segmentation that hangs close together after applying a detector which yields 1 big blob... it works perfectly fine for me.