For example, you can check this paper:

"An experimental comparison of gender classification methods"

http://www.inf.unideb.hu/~sajolevente/papers2/gender/2008.%20An%20experimental%20comparison%20of%20gender%20classification%20methods.pdf

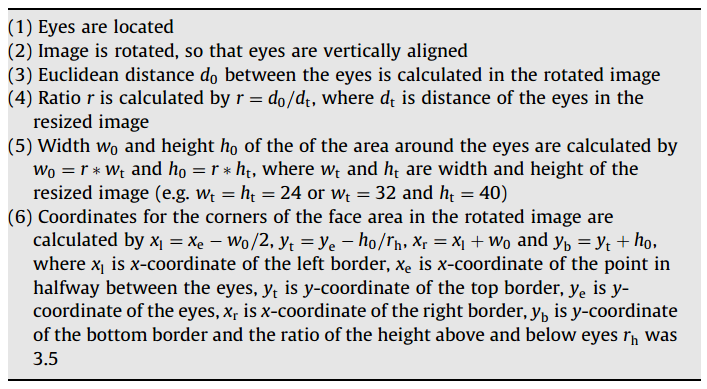

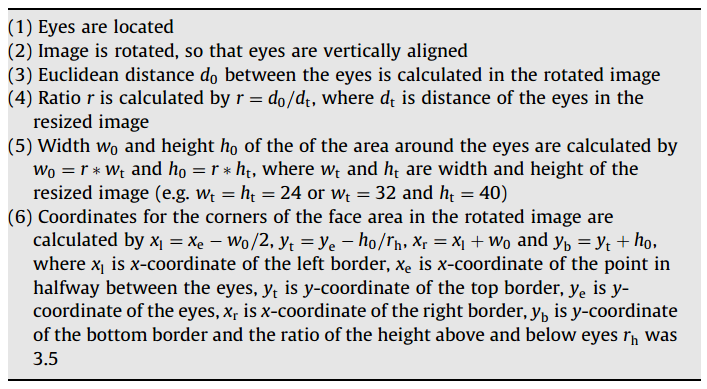

In table 1 you can see a face normalization algorithm.

This code need to be checked:

class RegionNormalizer{

public:

RegionNormalizer(){};

virtual ~RegionNormalizer() {

}

;

virtual cv::Mat process(const cv::Mat& aligned_image, const cv::Rect& region, const std::vector<cv::Point2d> & landmarks) = 0;

inline double euclidean_distance(const cv::Point &p1, const cv::Point &p2){

double dx = p1.x - p2.x;

double dy = p1.y - p2.y;

return sqrt(dx * dx + dy * dy);

}

};

class GenericFlandmarkRegionNormalizer: public RegionNormalizer{

public:

GenericFlandmarkRegionNormalizer(const cv::Size& size, double eyes_distance_percentage, double hr);

virtual ~GenericFlandmarkRegionNormalizer();

virtual cv::Mat process(const cv::Mat& normalized_image, const cv::Rect& face, const std::vector<cv::Point2d> & landmarks);

private:

cv::Size size_resized;

double eyes_distance_percentage;

double hr;

};

/*

example values

size(80,84) , eyes_distance_percentage = 0.75 , hr = 3.5

*/

GenericFlandmarkRegionNormalizer::GenericFlandmarkRegionNormalizer(const Size& size, double eyes_distance_percentage, double hr){

this->size_resized = size;

this->eyes_distance_percentage = eyes_distance_percentage;

this->hr = hr;

}

Mat GenericFlandmarkRegionNormalizer::process(const Mat& normalized_image, const Rect& face, const vector<Point2d> & landmarks){

// wt = width of the resized image

int wt = this->size_resized.width;

// ht = height of the resized image

int ht = this->size_resized.height;

// 1. Eyes are located

Point2d right_eye = landmarks[RIGHT_EYE_ALIGN];

Point2d left_eye = landmarks[LEFT_EYE_ALIGN];

//2. Image is rotated, so that eyes are vertically aligned --> image is aligned

//3. Euclidean distance between the eyes is calculated in the rotated image

int d0 = euclidean_distance(right_eye,left_eye);

//4. Ratio r is calculated by r = d0 / dt, where dt is distance of the eyes in the resized image

//dt is distance of the eyes in the resized image

int dt = this->size_resized.width * this->eyes_distance_percentage;

double r = (d0*1.0) / dt;

//5. Width w0 and height h0 of the area around the eyes are calculated (in the source image)

int w0 = r * wt;

int h0 = r * ht;

//6. Coordinates for the corners of the face area in the rotated image are calculated

//rh = ratio of the height above and below eyes

//xe = is x-coordinate of the point in halfway between the eyes

int xe = d0/2 + left_eye.x;

//yt = y-coordinate of the top border

int ye = left_eye.y;

//xl is x-coordinate of the left border

int xl = xe - w0/2;

//yt is y-coordinate of the top border

//int yt = ye - h0/hr;

int yt = ye - h0/this->hr;

//xr is x-coordinate of the right border

int xr = xl + w0;

//yb is y-coordinate of the bottom border

int yb = yt + h0;

Point2d cropXY = Point2d(xl,yt);

int width = xr - xl;

int height = yb - yt;

Size cropSize = Size(width,height);

Rect cropRect(cropXY, cropSize);

if (cropRect == Rect()){

return Mat();

}

//check valid ROI:

Rect image_rect = Rect(0,0,normalized_image.cols,normalized_image.rows);

if((cropRect & image_rect) != cropRect){

cout<<cropRect.width <<" "<<cropRect.height <<endl;

cout<<image_rect.width <<" "<<image_rect.height <<endl;

cout<<"Bad ROI"<<endl;

return Mat();

}

}

1) see http://docs.opencv.org/ref/master/d3/...

2) did you mean intensity/lighting normalization ? or shape/geometry normalization ?

i want to normalize the face region using left eye, right eye, nose, mouth left and right points. but haar detector gives more regions for face, so i have detected the facial feature points based on that i want to normalize the face