So, this is how it is done.

First, you calibrate your camera intrinsically. Get the matrices required for the calculations. (D and M)

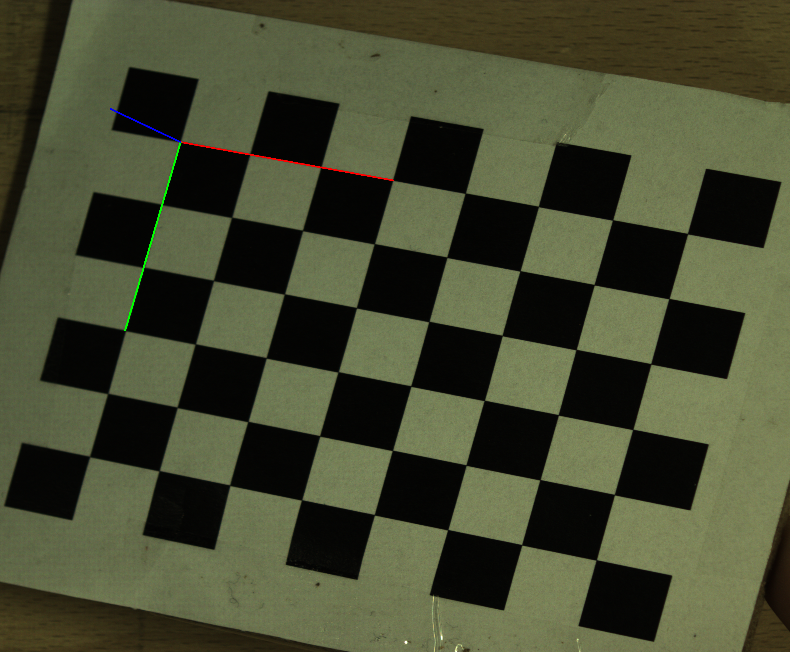

Then, you place your pattern on a

fixed location in the world (work station, desk, whatever you use,

just mark it and make sure the board

doesn't move at all. Note the world coordinates of the pattern (whichever reference point you have. Robot base, desk corner, whatever). This means you measure X and Y precisely from your world center to the board's center (or corner point, whichever reference point for board you want to use, I took the top left square's center).

Use the code given by OpenCV, get the pose of the checkerboard in camera frame. (rvecs and tvecs) Now you know the board's location in camera and in world frame. It now became a game of matrices, which can be solved. One can use ROS (another framework, not directly related to OpenCV, but nevertheless it can be used) or simply mathematical calculations (see below edit).

Using ROS facility of TF to get the camera pose is quite straightforward, but mathematically speaking, it is also fine to just do the matrix multiplications. So in short, OpenCV itself doesn't give you the camera pose, but you can use checkerboard's pose to estimate the camera pose. It's totally doable. I'll update the answer with a better explanation and perhaps add a psuedo code in a while.

EDIT: So here is the pseudo code of the entire story:

UNIT_SIZE = 15 # one square on checkerboard has this much length in mm

world_point_pose = np.asarray(0.55,0.32, 0)

transform_from_board_to_world = world_point_pose # i.e. the measured point, if the coordinate systems are aligned (=rotation)!

board_pose_in_camera_frame = matrix_from_vecs(camera.tvecs.flatten() * UNIT_SIZE, camera.rvecs.flatten())

pose_of_camera_in_board_frame = np.linalg.inv(board_pose_in_camera_frame)

transform_from_camera_to_board = pose_of_camera_in_board_frame

transform_from_board_to_camera= np.linalg.inv(transform_from_camera_to_board)

camera_in_world = np.dot(transform_from_board_to_world, transform_from_camera_to_board) # this is what we want

it's the position / rotation of the checkerboard relative to the camera (in camera coords, and specific to that single image of the checkerboard).

what are you trying to find out ? the position of the camera in the world ? you can't.

Yes, I am trying to find

rvecsandtvecsfor the camera, so that I can modify theurdfmodel in ROS and set it up. I thought this is why this was used. If not, just out of curiosity: why in the world would anyone be interested in the pose of a checkerboard? I mean, isn't the whole point is to figure out the camera pose, so that we could set it up and use transformation matrices to switch frames and get relative coordinates?sorry, but how would we know, what "modify the urdf model in ROS " means ?

maybe, to cross-check, if the calibration makes sense ?

@berak, @Raki,

np.load('B.npz'). Where do I get filename? The filename is missing.@supra56, blind guess: since this is specific to your own calibration data (chessboard images),

b.npzis not part of the samples.you'll have to do your own calibration, and save cam-matrix, dist-coeffs, rvecs & tvecs on your own

@supra56 That is the output of your intrinsic calibration procedure, but you can also simply create two arrays containing distortian coefficients and camera matrix, since the functions only require these two. Simply open your calibration file, copy these matrices and paste them in your OpenCV example as arrays. That's it.

As an example, here are mine:

M_MATRIX = np.asmatrix([21696.117836, 0.000000, 1956.264838, 0.000000, 22075.059632, 531.607994, 0.000000, 0.000000, 1.000000]).reshape(3,3)D_MATRIX = np.asmatrix([-0.111606, -2.056716, -0.000301, 0.003957, 0.000000]).reshape(1,5)These matrices can be passed in to the function like this :

retval, rvecs, tvecs, inliers = cv2.solvePnPRansac(objp, corners2, M_MATRIX, D_MATRIX)and that's basically it.ah, now you're using the rvecs and tvecs from solvePnP(), you can use an arbitrary chessboard image !

@Raki. Did you used the code from that link code?