Isn't the calcOpticalFlowFarneback example calculating hue wrong?

I believe the canonical optical flow example is the one provided at https://docs.opencv.org/master/d7/d8b/tutorial_py_lucas_kanade.html. It takes a flow angle, converted from Cartesian to polar, in the range (0, 2Pi) and converts it to hue by ang*180/np.pi/2, producing hue in the range (0, 180). This doesn't make any sense to me. I think hue, as a polar entity itself, should fully encompass the direction of the flow, hence it should have the range (0, 360), which is simply achieved by ang*180/np.pi.

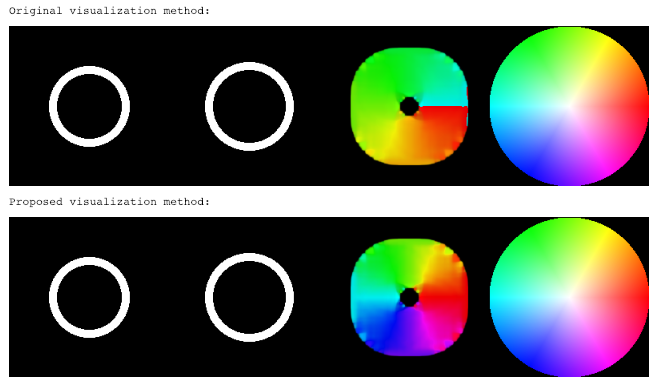

I am very perplexed by this since the seemingly correct equation is actually the simpler option. Someone actually added an extra division by two, thereby breaking the equation (by my reasoning). Consequently, I am concerned I am misunderstanding this somehow. But if you run the optical flow algorithm on artificially generated data (painted rectangles offset in the four cardinal directions), you get much more sensible results using my recommended equation. Hue encompasses and utilizes the entire hue range and depicts smooth direction gradients. The existing example only utilizes half the available hue (from red to cyan), and worse than discarding half the hue availability, it also yields a discontinuity between directions 359 and 0, depicted as a sudden jump from cyan to red. This really doesn't make any sense.

How has this example stood for so long in this form? Further compounding my confusion, this apparent error has propagated to other projects, as shown at https://www.programcreek.com/python/example/89313/cv2.calcOpticalFlowFarneback. Consequently, as I stated above, I genuinely feel I am making some sort of mistake on all of this. I can't be the first person to have ever noticed this, so I must be interpreting it incorrectly, right? I'm very confused.

What does everyone else think about this?

Thanks.

Here is an example of the old method of flow-direction/hue mapping and my proposed method. The optical flow consists of a radially expanding ring, so as to show flow in all possible directions and their associated color mapping. I have included a typical HSV color wheel for comparison. I won't bother pasting all the code in here. I'll add it as an answer tomorrow (I can't answer it today because my account is too young).

maybe it helps, if you know, that hue is in [0..180], not in [0..360] in opencv (since it has to fit into a byte)

Oookay, but the example is still wrong. I guess it could explain how someone might create the example incorrectly to begin with, but it's still easy to get around this one-byte discrepancy to create a properly working angle-hue encoding. So the example should probably be updated.

if you WANT the hue in [0..360], convert the image to float before the hsv conversion.

and again, it's just some attempt at visualizing something, don't take it too serious ....

I'm honestly surprised at your response. The example reveals discontinuous hue transitions from cyan to red to indicate an epsilon change in optical flow direction, while also not honoring the intuitive mapping between hue and direction shown on an HSV color wheel. I spent a bit of time figuring out how this was occurring, and your response is "go ahead and fix it so it works properly if you want, but don't 'take it too seriously' that the documented example of how to use this function is basically broken because attempting to 'visualize something' isn't very important anyway."

So, in other words, the example won't be fixed and therefore someone else will likely needlessly puzzle over this conundrum in the future.

I was just trying to help. I'm sorry if I intruded. Good luck.

oh , if you can please go ahead and do so !

yea, that would be actually a good idea.

Fair enough, but I admit to having no history of contribution to OpenCV. I'll see if I can get in there and submit the change myself. Cheers!

@kebwi Are you talking about Dense Optical Flow in OpenCV example ?If yes I don't understand your problem. HSV space is only use for display : result is an image with false color. You can use full scale for H but is result displayed visualy better?

I have described two ways in which the visualization would be better. Utilizing the full hue range doubles the useful colors available for discerning flow direction. More critically, the proposed method does not introduce color discontinuities at minute differences in flow direction between 359 and 0 degrees (whereas the existing method jumps from cyan to red). See my example in the answers section below.

Oh, since I'm a new user, it won't let me answer my own question. I guess I'll paste the answer in tomorrow. Sheesh. I can't believe this.

In lieu of waiting until tomorrow to post an answer, I added it as an edit to the original question. Please see above for further clarification.