360° lens for panoramic view and depth

Hi to all,

I'm very sorry if my question is too much stupid, I tried to search on google for possible answers, but I haven't found a lot about this topic.

I would like to use a 360-degree lens with my RGB camera (or to directly use a 360° camera) in order to get a panoramic view with depth information. To be more precise, I would like to get a 3D view in a panoramic video.

Several years ago, I used OpenCV to implement stereo vision by using two RGB cameras with a fixed baseline. Should I implement something similar with two 360-degree cameras or can I obtain the 3D view by using just a single camera?

Is there any sample or document which I can read to get more information?

Thank you!

EDIT 1 - 27/06/2018 : figures and 360-degree lens

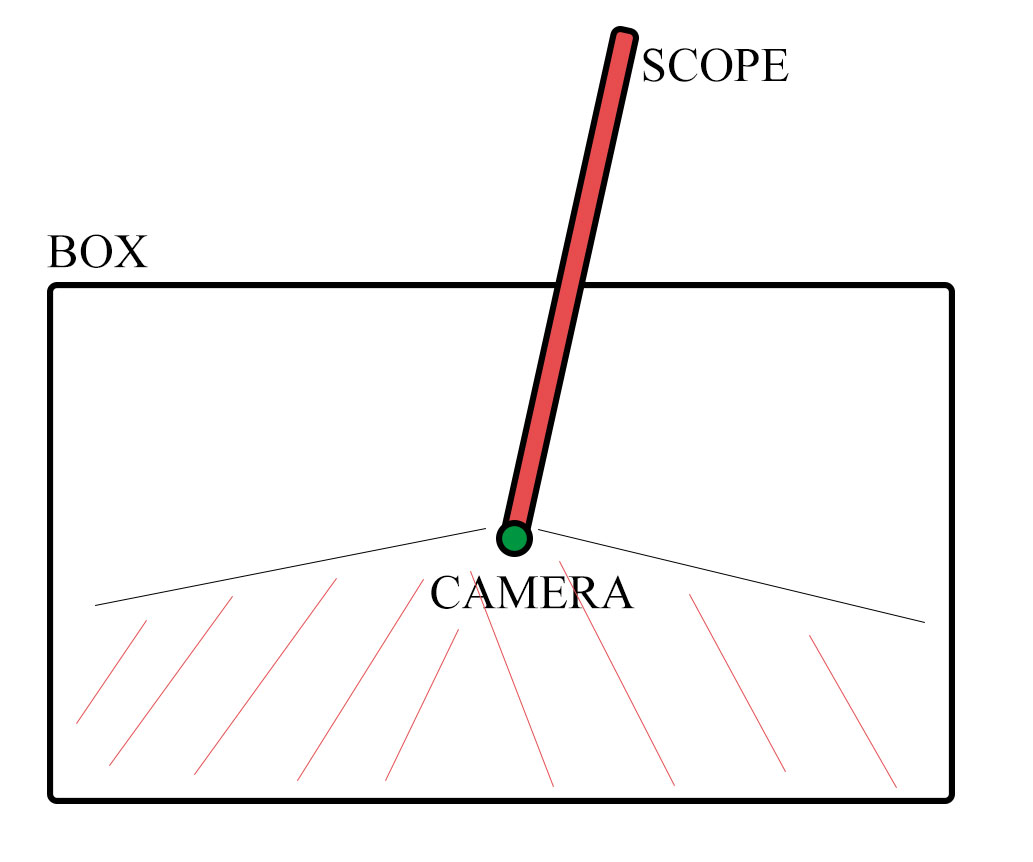

In order to make things a little bit clearer, I tried to draw a figure about my system. I need to put the camera (or two cameras) at the bottom of a scope and use it to inspect a black box. I need to be able to see everything which is in front of the camera with a 3D panoramic view in order to get also the depth information. Usually, the scopes only have camera with a very narrow FOV (only few degrees) and so you cannot get an overall idea about the content of the box, moreover, it's like you have only an eye and so you do not have any idea about the depth. I would like to use 360 degree lens to maximize the FOV and I also need to get an idea about the depth.

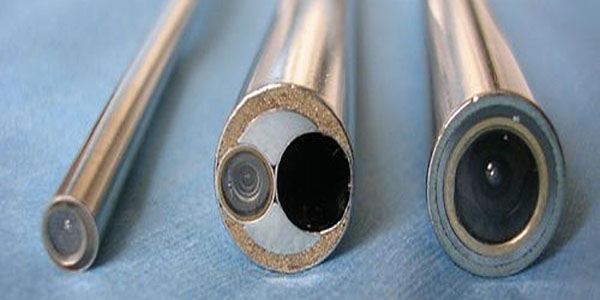

The scopes are usually like the ones in this figure and have a very limited FOV:

The lens I would like to use is something like this one (or similar):