Svm using Surf Features

This is the code i did for train svm with surf features , it didn't show me the any syntax error but i think there is something logical wrong in it

string YourImagesDirectory="D:\\Cars\\";

vector<string> files=listFilesInDirectory(YourImagesDirectory+"*.jpg");

//Load NOT cars!

string YourImagesDirectory_2="D:\\not_cars\\";

vector<string> files_no=listFilesInDirectory(YourImagesDirectory_2+"*.jpg");

// Initialize constant values

int nb_cars = files.size();

const int not_cars = files_no.size();

const int num_img = nb_cars + not_cars; // Get the number of images

const int image_area = 30*40;

// Initialize your training set.

Mat training_mat(num_img,image_area,CV_32FC1);

Mat labels(num_img,1,CV_32FC1);

// Set temp matrices

Mat tmp_img;

Mat tmp_dst( 30, 40, CV_8UC1 ); // to the right size for resize

// Load image and add them to the training set

std::vector<string> all_names;

all_names.assign(files.begin(),files.end());

all_names.insert(all_names.end(), files_no.begin(), files_no.end());

// Load image and add them to the training set

int count = 0;

vector<string>::const_iterator i;

string Dir;

for (i = all_names.begin(); i != all_names.end(); ++i)

{

Dir=( (count < files.size() ) ? YourImagesDirectory : YourImagesDirectory_2);

tmp_img = imread( Dir +*i, 0 );

resize( tmp_img, tmp_dst, tmp_dst.size() );

Mat row_img = tmp_dst; // get a one line image.

detector.detect( row_img, keypoints);

drawKeypoints( row_img, keypoints, img_keypoints_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

extractor.compute( row_img, keypoints, descriptors_1);

row_img.convertTo( training_mat.row(count), CV_32FC1 );

labels.at< float >(count, 0) = (count<nb_cars)?1:-1; // 1 for car, -1 otherwise*/

++count;

}

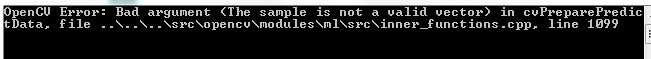

and When i am going to predict the image for result , it give me the runtime error in it and didn't give me the prediction result

Edit

Also tried this loop in the code above , this is working fine , but as i am new to opencv and beginner i don't know whether my approach in this loop is right or not , because my next step is to recognize the object from video

int dictionarySize = 1500;

int retries = 1;

int flags = KMEANS_PP_CENTERS;

BOWKMeansTrainer bowTrainer(dictionarySize, tc, retries, flags);

BOWImgDescriptorExtractor bowDE(extractor, matcher);

for (i = all_names.begin(); i != all_names.end(); ++i)

{

Dir=( (count < files.size() ) ? YourImagesDirectory : YourImagesDirectory_2);

tmp_img = cv::imread( Dir +*i, 0 );

resize( tmp_img, tmp_dst, tmp_dst.size() );

Mat row_img = tmp_dst;

detector.detect( row_img, keypoints);

extractor.compute( row_img, keypoints, descriptors_1);

bowTrainer.add(descriptors_1);

labels.at< float >(count, 0) = (count<nb_cars)?1:-1; // 1 for car, -1 otherwise

++count;

}

Do you have any idea on how can I do the same as what you did but in Python ?