numberOfDisparities clarification (StereoBM)

Hi there,

im using StereoBM to estimate the depth from a stereo camera (parallel camera viewing direction).

I did the usual stuff like undistortion and recfification of the images etc.

I also set the min disparity to 0 and the number of disparities a number that is a multiple of 16.

The images I used have a resolution of FullHD (1920x1080)

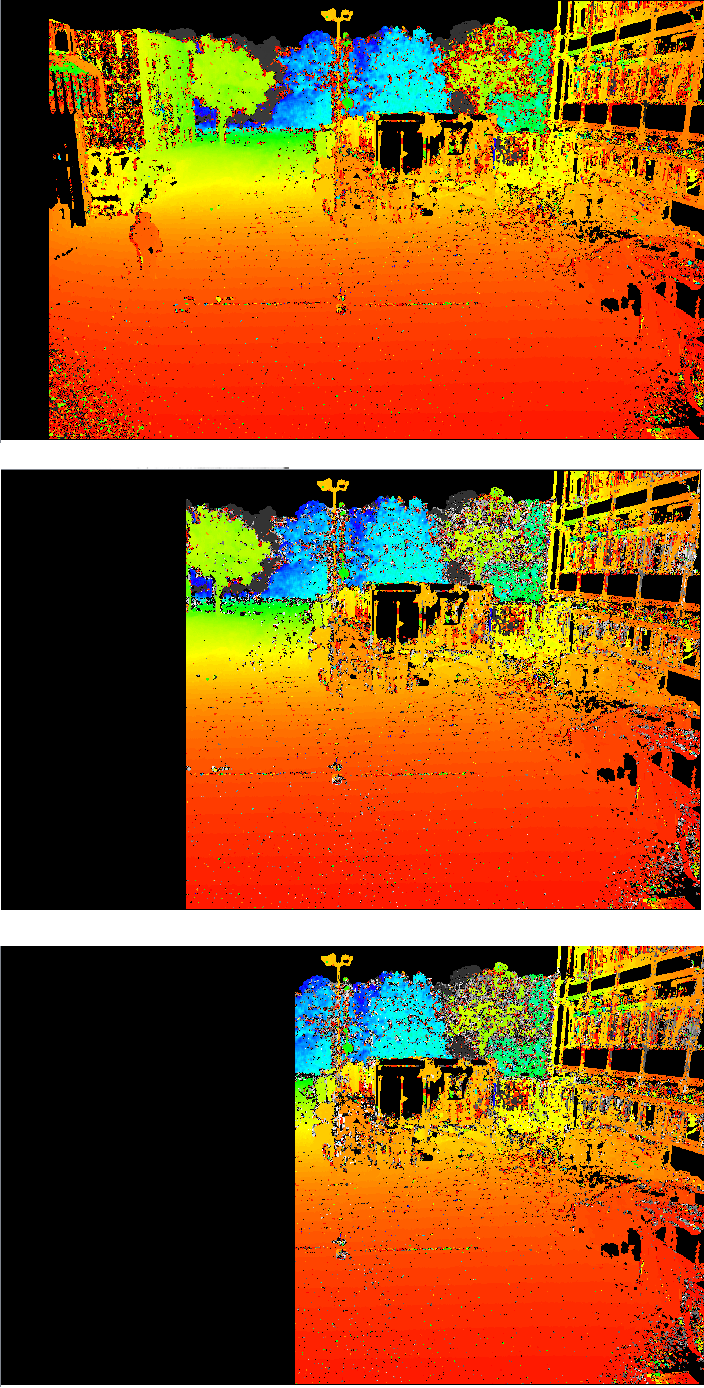

In the first image i used the parameters mindisparity=0, numOfdisparities=128 You can see the color coded depth map, where red denotes that the pixels are close and blue means far.

When i increase the numOfdisparities to 448, then i get the middle image, where i have a lot of depth values missing on the left side.

In the bottom image i have set the numOfdisparities value to 800 and almost half of the image does not contain depth information anymore.

Does this make sense, that the numOfdisparities value can have an influence on getting less pixels with disparity values? I thought that the numOfdisparities just influences the search range from starting for each pixel at with the minDisparity offset and a range of numOfdisparities?

Shouldnt that mean that with numOfdisparities i only increase the range where the block matching algorithm can look for possible candidates along the epipolar line?

Edit: You can finde a video here where i play with mindisparities and numberOfDisparities