|

2020-10-06 12:28:24 -0600

| received badge | ● Notable Question

(source)

|

|

2020-05-24 14:05:34 -0600

| received badge | ● Notable Question

(source)

|

|

2020-03-10 07:23:16 -0600

| received badge | ● Notable Question

(source)

|

|

2019-04-12 06:05:00 -0600

| received badge | ● Popular Question

(source)

|

|

2018-02-15 02:12:33 -0600

| received badge | ● Popular Question

(source)

|

|

2018-02-03 16:37:00 -0600

| received badge | ● Famous Question

(source)

|

|

2018-02-01 23:25:44 -0600

| received badge | ● Popular Question

(source)

|

|

2017-06-19 03:58:19 -0600

| received badge | ● Popular Question

(source)

|

|

2017-03-08 11:18:00 -0600

| received badge | ● Famous Question

(source)

|

|

2017-02-22 05:58:16 -0600

| received badge | ● Notable Question

(source)

|

|

2016-08-17 09:10:17 -0600

| received badge | ● Notable Question

(source)

|

|

2016-06-30 21:26:59 -0600

| received badge | ● Popular Question

(source)

|

|

2016-03-31 08:43:46 -0600

| received badge | ● Popular Question

(source)

|

|

2015-08-22 05:56:09 -0600

| asked a question | use Tesseract lib for reading a static image from ´the hard drive I am new to Tesseract OCR lib. and I am using it with Android. what i want to do as my first test is, to read an image using Tesseract lib, i check some postes on the internet but i did not find any tutorial for beginners!! please let me know how to use Tesseract lib for reading a static image fro mthe hard drive? |

|

2015-08-19 09:57:49 -0600

| asked a question | how to detect numbers written on a speed sign i want to use opencv for detecting the speed signs on the road, and extract the number written on the sign. is there any library that does task? note: i know how to make image matching, but i do not know how to extract specific information "numbers" from the detected sign. please let me know if there is any library or a class in opencv that does that. |

|

2015-07-12 04:35:04 -0600

| commented question | How to use FloodFill algorithm @berak actually when i seqarched for tutorials to know how to use FloodFill algorithm, i found an example and canny was used...therefore, i used canny as well..i thought that's how flood fill works..so do you suggest using only FloodFill without canny? |

|

2015-07-11 06:26:22 -0600

| asked a question | How to use FloodFill algorithm i want to use the FlooFill algorithm, i reviewd some posts and i found that i have to apply canny edge detection algorithm then the output of it will be the input for the FloodFill function as shown below in the code.

the problem is, at run time i receive the below posted errors and i do not know how o fix it. please help be to set it right. code: Size maskSize = new Size(gsMat.size.width()+2, gsMat.size.height()+2);

Mat edges = new Mat(maskSize, CVType.CV_32F);

Imgproc.Canny(gsMat, edges, 100, 200);

Imgproc.floodFill(gsMat, edges, new Point(350, 50), new Scalar(255), new Rect(), new Scalar(255), new Scalar(255), Imgproc.FLOODFILL_FIXED_RANGE);

ImageUtils.showMat(bgrMat, "");

errors: OpenCV Error: Sizes of input arguments do not match (mask must be 2 pixel wider and 2 pixel taller than filled image) in cvFloodFill, file ..\..\..\..\opencv\modules\imgproc\src\floodfill.cpp, line 552

Exception in thread "main" CvException [org.opencv.core.CvException: cv::Exception: ..\..\..\..\opencv\modules\imgproc\src\floodfill.cpp:552: error: (-209) mask must be 2 pixel wider and 2 pixel taller than filled image in function cvFloodFill

]

at org.opencv.imgproc.Imgproc.floodFill_0(Native Method)

at org.opencv.imgproc.Imgproc.floodFill(Imgproc.java:6119)

at com.example.seedgrowtest.MainClass.main(MainClass.java:40)

|

|

2015-06-28 08:23:04 -0600

| asked a question | How to calculate the determinante of a mtrix is there any function in opencv that calculates the determinant of 3x3 matrix? |

|

2015-06-10 09:34:39 -0600

| asked a question | How to calculate the distance from a pixel in the Lab-color space to a specific prototype I have Lab-Image and for each pixel in it, i want to know how Red, Green, Blue and Yellow that pixel is. which means, for the input Lab-Image i should get four images one for Red,Green,Blue and Yellow. as far as I researched, i knew that could be done by calculating the ecludian distance from each pixel in the Lab-image to the prototype of the color (R,G,B or Y). for an example, if i want to produce an image contains how Red the pixels in the Lab-Image, then for each pixel in the Lab-Image, i have to calculate the distance from that pixel in the Lab-Image to the prototype of the Red color in the Lab-color space kindly please provide some advice and guidance. |

|

2015-06-05 04:16:10 -0600

| asked a question | Why i am getting different global maximum value for the same image while i am trying to get the global maximum in an image, i recieved two values for the global maximum for the same image.first time, in the method "globMaxValLoc" i get the global maximum and this method yielded 101 at location

{111,66}. second time in the methods "findCandidateLocMax", and it yielded 255 at location {106,61}. please also have a look at the output section below as it contains the output results i am getting. please let me know why i am getting different global maximum values each time, and is there any better way to get the global maximum? Update Or in other words, when I use Core.MinMaxLoc two consecutive times it geives differen values!! why that is happening and how to avoid it. globMaxValLoc mathod: private void globMaxValLoc(Mat saliencyMap) {

// TODO Auto-generated method stub

MinMaxLocResult saliencyGlobMax = Core.minMaxLoc(saliencyMap);

Log.D(TAG, "globMaxValLoc", "saliencyGlobMax.maxVal: "+saliencyGlobMax.maxVal);

Log.D(TAG, "globMaxValLoc", "saliencyGlobMax.maxLoc: "+saliencyGlobMax.maxLoc);

Core.rectangle(saliencyMap, new Point(saliencyGlobMax.maxLoc.x-5, saliencyGlobMax.maxLoc.y-5),

new Point(saliencyGlobMax.maxLoc.x+5, saliencyGlobMax.maxLoc.y+5),

new Scalar(255, 100, 200));

ImageUtils.showMat(saliencyMap, "saliencyGlobMax");

this.setGlobMaxVal(saliencyGlobMax.maxVal);

this.setGlobMaxLoc(saliencyGlobMax.maxLoc);

}

findCandidateLocMax private void findCandidateLocMax(Mat saliencyMap, Point[] frameMinMaxDims) {

// TODO Auto-generated method stub

double topLeftX = frameMinMaxDims[0].x;

double topLeftY = frameMinMaxDims[0].y;

double bottomRightX = frameMinMaxDims[1].x;

double bottomRightY = frameMinMaxDims[1].y;

double diffX = (bottomRightX-topLeftX) + 1;

double diffY = (bottomRightY-topLeftY) + 1;

int cnt = 0;

HashMap<Point, Double> seedsMap = new HashMap<Point, Double>();

Valuecomparator vc = new Valuecomparator(seedsMap);

TreeMap<Point, Double> treeMap = new TreeMap<Point, Double>(vc);

//here i get the global maxima

MinMaxLocResult saliencyGlobMax = Core.minMaxLoc(saliencyMap);

Log.D(TAG, "findCandidateLocMax", "saliencyGlobMax.maxVal: "+saliencyGlobMax.maxVal);

Log.D(TAG, "findCandidateLocMax", "saliencyGlobMax.maxLoc: "+saliencyGlobMax.maxLoc);

Core.rectangle(saliencyMap, new Point(saliencyGlobMax.maxLoc.x-5, saliencyGlobMax.maxLoc.y-5),

new Point(saliencyGlobMax.maxLoc.x+5, saliencyGlobMax.maxLoc.y+5),

new Scalar(255, 100, 200));

ImageUtils.showMat(saliencyMap, "saliencyGlobMax");

OutPut: 6: Debug: FOA -> globMaxValLoc: saliencyGlobMax.maxVal: 101.0

7: Debug: FOA -> globMaxValLoc: saliencyGlobMax.maxLoc: {111.0, 66.0}

8: Debug: FOA -> findCandidateLocMax: saliencyGlobMax.maxVal: 255.0

9: Debug: FOA -> findCandidateLocMax: saliencyGlobMax.maxLoc: {106.0, 61.0}

|

|

2015-06-03 09:16:57 -0600

| asked a question | How to implement region growing algorithm? I want to use the Region Growing algorithm to detect similar connected pixels according to a threshold. I have also check some posts in the web but non of them offered a pseudo code for an example. I am also wondring if that algorithm is implemented in opencv library? kindly please provide a pseudo code for the Region Growing algorithm or let me know how to use it if it is implemented in opencv library with java API. |

|

2015-05-21 04:32:51 -0600

| commented question | How to get the values of the local maxima in an image @berak ok fine, but if i just used minMaxLoc(img) i will get the global maximum and minimum, while i am looking for the local Maxima.. i how my point is clréar and sorry for any inconviences |

|

2015-05-21 04:20:36 -0600

| commented question | How to get the values of the local maxima in an image @berak MatFactory and SysUtils are my own classes i implemented. concering what you wrote in your second comments i really do not get it sorry..please let ne know how to get the local maxima in an image..regards |

|

2015-05-21 04:08:36 -0600

| asked a question | How to get the values of the local maxima in an image I wrote the below code to know how can i get the local and global maximum and minimum, but i do not know how to get the local maxima? also as you see in the code below, I am using mask, but at run time i receieve the below mentioned error message. so please let me know why do we need mask and how to use it properly. update: Line 32 is: MinMaxLocResult s = Core.minMaxLoc(gsMat, mask); code: public static void main(String[] args) {

MatFactory matFactory = new MatFactory();

FilePathUtils.addInputPath(path_Obj);

Mat bgrMat = matFactory.newMat(FilePathUtils.getInputFileFullPathList().get(0));

Mat gsMat = SysUtils.rgbToGrayScaleMat(bgrMat);

Log.D(TAG, "main", "gsMat.dump(): \n" + gsMat.dump());

Mat mask = new Mat(new Size(3,3), CvType.CV_8U);//which type i should set for the mask

MinMaxLocResult s = Core.minMaxLoc(gsMat, mask);

Log.D(TAG, "main", "s.maxVal: " + s.maxVal);//to get the global maximum

Log.D(TAG, "main", "s.minVal: " + s.minVal);//to get the global minimum

Log.D(TAG, "main", "s.maxLoc: " + s.maxLoc);//to get the coordinates of the global maximum

Log.D(TAG, "main", "s.minLoc: " + s.minLoc);//to get the coordinates of the global minimum

}

error message: OpenCV Error: Assertion failed (A.size == arrays[i0]->size) in cv::NAryMatIterator::init, file ..\..\..\..\opencv\modules\core\src\matrix.cpp, line 3197

Exception in thread "main" CvException [org.opencv.core.CvException: ..\..\..\..\opencv\modules\core\src\matrix.cpp:3197: error: (-215) A.size == arrays[i0]->size in function cv::NAryMatIterator::init

]

at org.opencv.core.Core.n_minMaxLocManual(Native Method)

at org.opencv.core.Core.minMaxLoc(Core.java:7919)

at com.example.globallocalmaxima_00.MainClass.main(MainClass.java:32)

|

|

2015-05-09 09:27:55 -0600

| asked a question | different theta for Gabor filter return images with no orientation ,i applied Gabor filter on images with the follwoing theat {0,45,90,135}. but the resultant images were exactly the same with the same orientation angle!! i expected that the results of applying Gabor filter with theta = 90 will be different in orientation that the one with theat = 45, but after using Gabor filter with different theta, i get images with no difference in orientation! am i using Gabor filter wrong the parameter i set for Gabor filter were as follows: kernel size = Size(5,5);

theta = {0,45,90,135}

sigma = ,2

type = CVType.CV_32F

lambda = 100

gamma = ,5

psi = 5 |

|

2015-05-08 06:30:23 -0600

| asked a question | How to use Gabor Filter using Opencv Java API i want to use Gabor filter usin gopencv with java API. i referred to the docs, and it is as follows: getGaborKernel public static Mat getGaborKernel(Size ksize,

double sigma,

double theta,

double lambd,

double gamma,

double psi,

int ktype)

my problem is that i do not know how to specify the parameters, for an exampel, the Size, should it be the size of the image or what? please provided brief explanation concerning how to use the parameters. |

|

2015-05-07 04:17:38 -0600

| commented question | How to use the integral images in opencv java Api @berak sorry i did not get what you want to say..would you please clarify? |

|

2015-05-07 04:01:05 -0600

| asked a question | How to use the integral images in opencv java Api i am making some image processing and intensive calculations, so the result is very slow, and i want to use the integral image. is there any function i opencv with java API that allow using integral images? |

|

2015-04-22 08:15:45 -0600

| commented question | .subMat() does not cut the designated part of a Mat object oh, was it because it is RGB? i thought i should get 10 cols and 10 rows |

|

2015-04-22 08:01:33 -0600

| asked a question | .subMat() does not cut the designated part of a Mat object I am testing how to use Point and Rect classes inopencv with java API, i wrote the below code and as you see, pStart is (0,0) and pEnd(0+10, 0+10). the rectangle i created is of 10 width and 10 height. then i wanted to cut an area

from a Mat object equal to the rect height and width as show i the code, but when i displayed the contents of the sub-mat, as you see below in the output, it is of 10 rows and more columns and i expected to have 10 rows and

10 columns. please let me know what i am doing wrong Code: Point pstart = new Point(0, 0);

Log.D(TAG, "MainClass", "" + pstart.x);

Log.D(TAG, "MainClass", "" + pstart.y);

Point pEnd = new Point(pstart.x+10, pstart.y+10);

Rect rec1 = new Rect(pstart, pEnd);

Log.D(TAG, "MainClass", "" + rec1.height);

Log.D(TAG, "MainClass", "" + rec1.width);

Mat subMat = matFactory.getMatAt(0).submat(rec1);

Log.D(TAG, "MainClass", "" + subMat.dump());

OutPut Debug: MainClass -> MainClass: 0.0

5: Debug: MainClass -> MainClass: 0.0

6: Debug: MainClass -> MainClass: 10

7: Debug: MainClass -> MainClass: 10

8: Debug: MainClass -> MainClass: [0, 38, 46, 0, 38, 46, 0, 38, 46, 0, 37, 45, 0, 35, 40, 0, 32, 37, 0, 34, 40, 1, 37, 43, 1, 34, 43, 5, 38, 47;

4, 45, 47, 0, 41, 43, 0, 38, 40, 0, 38, 40, 0, 39, 41, 0, 39, 41, 0, 38, 40, 0, 37, 39, 3, 38, 42, 5, 40, 44;

12, 52, 51, 6, 46, 45, 2, 42, 40, 1, 41, 39, 3, 43, 41, 4, 44, 42, 3, 42, 40, 2, 41, 39, 1, 39, 39, 1, 39, 39;

9, 48, 50, 6, 45, 47, 2, 41, 43, 0, 39, 41, 0, 38, 37, 0, 37, 36, 0, 37, 37, 1, 39, 39, 1, 39, 41, 2, 40, 42;

13, 48, 58, 9, 44, 54, 5, 41, 49, 2, 38, 46, 0, 36, 44, 0, 35, 43, 0, 35, 43, 0, 37, 45, 0, 36, 46, 2, 38, 48;

19, 52, 67, 11, 44, 59, 5, 38, 53, 4, 37, 52, 5, 39, 52, 5, 39, 52, 2, 37, 50, 0, 35, 48, 0, 34, 48, 2, 37, 51;

16, 47, 62, 10, 41, 56, 3, 37, 50, 2, 36, 49, 4, 38, 51, 4, 38, 51, 1, 36, 49, 0, 35, 48, 0, 36, 50, 1, 38, 52;

15, 47, 58, 13, 45, 56, 12, 44, 55, 10, 42, 53, 6, 41, 51, 5, 40, 50, 5, 41, 51, 8, 44, 54, 4, 42, 54, 5, 43, 55;

19, 47, 64, 17, 45, 62, 13, 44, 59, 11, 42, 57, 8, 42, 55, 7, 41, 54, 5, 44, 53, 5, 44, 53, 3, 44, 53, 4, 44, 56;

12, 41, 56, 12, 41, 56, 10, 41, 56, 9, 40, 55, 7, 41, 54, 8, 42, 55, 5, 44, 53, 5, 44, 53, 6, 44, 56, 6, 44, 56]

|

|

2015-04-21 06:59:32 -0600

| commented question | regarding center-surround filter thanks, bu the problem the research given to introducd this filter as center surround and i downaded and read many paper a n the explained nor mentioed the name DoG.thankx |

|

2015-04-21 05:15:54 -0600

| asked a question | regarding center-surround filter I am trying to learn and implement the center-surround filter used in image anlysis and processing. of course i made research but the litratures i read offered explanation which still not clear and lacks clariy of te most of that filter's aspects, such as, 1-the min and max raduis f the cener in pixels 2-the min and the max radius of he surround in pixels 3-whether shoud be an over lap or not, 4-how to calculate he surround? questions like that.. kindly please provide a peseudo code or clear explanation of that filter used in image processin. |

|

2015-04-13 09:53:18 -0600

| asked a question | How to separate query and train image after doing the matching since the Mat object returned from .DrawMatches(..) method includes both of the query/object image on the left side and the train/scene image on the right side. is there any way get two separate Mat objects, one for the query/object image and the other for the train/scene image? i am asking this question because i am tryin to detect an object when the camera spots it, and what i got displayed on the designated area from the camera stream is, NOT only the train/scene image with the detected object highlighted, BUT an image with the query/object on the left side and the train/scene on the right with the detected object highlighted. in such case, how to got only either the query/object image or the train/scene image after doing the matching? |

|

2015-04-10 23:11:18 -0600

| received badge | ● Student

(source)

|

|

2015-04-10 09:14:40 -0600

| asked a question | how to correctly detect corners of the scen image with perspectiveTransform In the below code i am tryin to draw lines between the scene's corners "the train image", i followed this tutorial page 367 here, but when i run the code, i receive the below posted image which displays detection of wrong object in the scene image "train image". please see the image that indicates wronge detection, and the detected area is in red please let me know what i am missing and how to correct it. MatOfPoint2f objPointMat = new MatOfPoint2f();

MatOfPoint2f scenePointMat = new MatOfPoint2f();

objPointMat.fromList(objPoint);

scenePointMat.fromList(scenePoint);

Mat H = Calib3d.findHomography(objPointMat, scenePointMat, 8, 3);

Mat objCorners = new Mat(4, 1, CvType.CV_32FC2);

Mat sceneCorners = new Mat(4, 1, CvType.CV_32FC2);

objCorners.put(0, 0, new double[] {0, 0});//top left

objCorners.put(1, 0, new double[] {matFactory.getMatAt(0).cols(), 0});//top right

objCorners.put(2, 0, new double[] {matFactory.getMatAt(0).cols(), matFactory.getMatAt(0).rows()});//bottom right

objCorners.put(3, 0, new double[] {0, matFactory.getMatAt(0).rows()});//bottom left.

Core.perspectiveTransform(objCorners, sceneCorners, H);

Log.D(TAG, "descriptorMatcher", "objCorners.dump: " + objCorners.dump());//to display the contents of objCorners matrix

Log.D(TAG, "descriptorMatcher", "sceneCorners.dump: " + sceneCorners.dump());//to display the contents of sceneCorners matrix

/*matFactory.getMatAt(0) refers to the object image */

double p10 = sceneCorners.get(0, 0)[0] + matFactory.getMatAt(0).cols();

double p11 = sceneCorners.get(0, 0)[1] ;

double p20 = sceneCorners.get(1, 0)[0] + matFactory.getMatAt(0).cols();

double p21 = sceneCorners.get(1, 0)[1];

double p30 = sceneCorners.get(2, 0)[0] + matFactory.getMatAt(0).cols();

double p31 = sceneCorners.get(2, 0)[1];

double p40 = sceneCorners.get(3, 0)[0] + matFactory.getMatAt(0).cols();

double p41 = sceneCorners.get(3, 0)[1];

Point p1 = new Point(p10, p11);

Point p2 = new Point(p20, p21);

Point p3 = new Point(p30, p31);

Point p4 = new Point(p40, p41);

Core.line(outImg, p1, p2, new Scalar(0, 0, 255), 4);

Core.line(outImg, p2, p3, new Scalar(0, 0, 255), 4);

Core.line(outImg, p3, p4, new Scalar(0, 0, 255), 4);

Core.line(outImg, p4, p1, new Scalar(0, 0, 255), 4);

MatFactory.writeMat(FilePathUtils.newOutputPath("detectedCorners"), outImg);

OutPut of sceneCorners matrix and objcorners matrix: Debug: MainClass -> descriptorMatcher: objCorners.dump: [0, 0;

225, 0;

225, 221;

0, 221]

Debug: MainClass -> descriptorMatcher: sceneCorners.dump: [313.53854, 296.17175;

263.7662, 292.51437;

2429.9856, 2136.5327;

309.76358, 91.591682]

|

|

2015-04-10 03:31:35 -0600

| asked a question | what does the dimensions of the desriptors and MatOfDMatch indicate? I just want to understand why the .match(descrip_1, descrip_2, matches) produces MatOfDMatch object whose width == 1 and its height == height of descrip_1?

I created an example and as you see below in the result, both descrip_1 and descrip_2 has width of 64 columns?! and the matches has i columns as width and 354 rows as height?! can you please explain why always the matches object has one columns and number of rows == descript_1's number of rows? and as descript_1 and descript_2 has width of 64 column, what the width of descrip_1 or descript_2 contains? Debug: MainClass -> descriptorMatcher: descrip_1.size: 64x354

Debug: MainClass -> descriptorMatcher: descrip_1.height().height: 354.0

Debug: MainClass -> descriptorMatcher: descrip_1.rows: 354

Debug: MainClass -> descriptorMatcher: descrip_2.size: 64x554

Debug: MainClass -> descriptorMatcher: descrip_2.height().height: 554.0

Debug: MainClass -> descriptorMatcher: descrip_2.rows: 554

Debug: MainClass -> descriptorMatcher: matches.size: 1x354

Debug: MainClass -> descriptorMatcher: matches.size().height: 354.0

Debug: MainClass -> descriptorMatcher: matches.rows: 354

|

|

2015-04-09 10:38:20 -0600

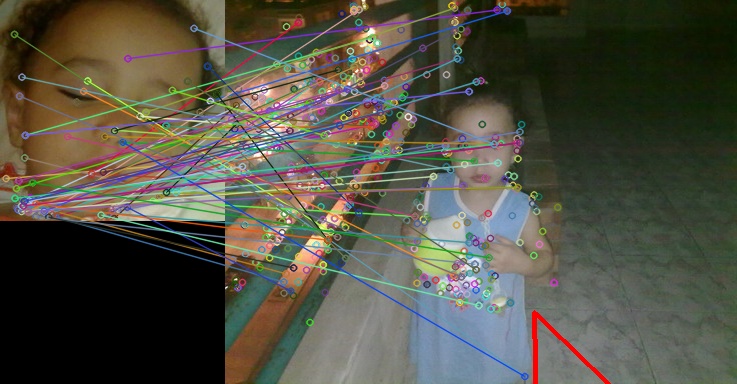

| marked best answer | Features are not matched correctly using descriptor extractor i wrote the below code to make features detections of two images, they are shown below.

the problem is despite i ahve two different images, the output Mat object of the descriptorExtractor is full of matched features with lines so much that i cant see the image..and as they are twoo different images i expected to see some few matched features.. please have alook at my code belwo and letm ke know what i am doing wrong or what i am missing. Image1:

Image2:

Output of descriptorExtractor:

Code: public class FeaturesMatch {

static final String path_jf01 = "C:/private/ArbeitsOrdner_19_Mar_2015/Images/FeaturesDetection/jf00.jpg";

static final String path_jf01_rev = "C:/private/ArbeitsOrdner_19_Mar_2015/Images/FeaturesDetection/jf01.jpg";

static final String path_jf01_DetectedOutPut = "C:/private/ArbeitsOrdner_19_Mar_2015/Images/FeaturesDetection/jf01_DetectedOutPut.jpg";

static final String path_jf01_rev_DetectedOutPut = "C:/private/ArbeitsOrdner_19_Mar_2015/Images/FeaturesDetection/jf01_rev_DetectedOutPut.jpg";

static final String path_jf01_Matches = "C:/private/ArbeitsOrdner_19_Mar_2015/Images/FeaturesDetection/jf01_matches.jpg";

static final String path_jf01_DrawnMatches = "C:/private/ArbeitsOrdner_19_Mar_2015/Images/FeaturesDetection/jf01_DrawnMatches.jpg";

public static void main(String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

/*Feature Detection*/

FeatureDetector fDetect = FeatureDetector.create(FeatureDetector.SIFT);

MatOfKeyPoint matKeyPts_jf01 = new MatOfKeyPoint();

fDetect.detect(path2Mat(path_jf01), matKeyPts_jf01);

System.out.println("matKeyPts_jf01.size: " + matKeyPts_jf01.size());

MatOfKeyPoint matKeyPts_jf01_rev = new MatOfKeyPoint();

fDetect.detect(path2Mat(path_jf01_rev), matKeyPts_jf01_rev);

System.out.println("matKeyPts_jf01_rev.size: " + matKeyPts_jf01_rev.size());

Mat mat_jf01_OutPut = new Mat();

Features2d.drawKeypoints(path2Mat(path_jf01), matKeyPts_jf01, mat_jf01_OutPut);

Highgui.imwrite(path_jf01_DetectedOutPut, mat_jf01_OutPut);

Mat mat_jf0_rev_OutPut = new Mat();

Features2d.drawKeypoints(path2Mat(path_jf01_rev), matKeyPts_jf01, mat_jf0_rev_OutPut);

Highgui.imwrite(path_jf01_rev_DetectedOutPut, mat_jf0_rev_OutPut);

/*DescriptorExtractor*/

DescriptorExtractor descExtract = DescriptorExtractor.create(DescriptorExtractor.SIFT);

Mat mat_jf01_Descriptor = new Mat();

descExtract.compute(path2Mat(path_jf01), matKeyPts_jf01, mat_jf01_Descriptor);

System.out.println("mat_jf01_Descriptor.size: " + mat_jf01_Descriptor.size());

Mat mat_jf01_rev_Descriptor = new Mat();

descExtract.compute(path2Mat(path_jf01_rev), matKeyPts_jf01_rev, mat_jf01_rev_Descriptor);

/*DescriptorMatcher*/

MatOfDMatch matDMatch = new MatOfDMatch();

DescriptorMatcher descripMatcher = DescriptorMatcher.create(DescriptorMatcher.BRUTEFORCE);

descripMatcher.match(mat_jf01_Descriptor, mat_jf01_rev_Descriptor, matDMatch);//is queryDescriptor = mat_jf01_Descriptor

//what is trainDescriptor

Highgui.imwrite(path_jf01_Matches, matDMatch);

System.out.println("matDMatch.size: " + matDMatch.size());

System.out.println("matDMatch.dim: " + matDMatch.dims());

System.out.println("matDMatch.elemSize: " + matDMatch.elemSize());

System.out.println("matDMatch.elemSize1: " + matDMatch.elemSize1());

System.out.println("matDMatch.hight: " + matDMatch.height());

System.out.println("matDMatch.width: " + matDMatch.width());

System.out.println("matDMatch.total: " + matDMatch.total());

System.out.println("matKeyPts_jf01.size: " + matKeyPts_jf01.size());

System.out.println("matKeyPts_jf01.dim: " + matKeyPts_jf01.dims());

System.out.println("matKeyPts_jf01.elemSize: " + matKeyPts_jf01.elemSize());

System.out.println("matKeyPts_jf01.elemSize1: " + matKeyPts_jf01.elemSize1());

System.out.println("matKeyPts_jf01.hight: " + matKeyPts_jf01.height());

System.out.println("matKeyPts_jf01.width: " + matKeyPts_jf01.width());

System.out.println("matKeyPts_jf01.total: " + matKeyPts_jf01.total());

/*Draw Matches*/

Mat mat_jf01_DrawnMatches = new Mat();

Features2d.drawMatches(path2Mat(path_jf01), matKeyPts_jf01, path2Mat(path_jf01_rev), matKeyPts_jf01_rev, matDMatch, mat_jf01_DrawnMatches);

Highgui.imwrite(path_jf01_DrawnMatches, mat_jf01_DrawnMatches);

}

private static Mat path2Mat(String path2File) {

// TODO Auto-generated method stub

return Highgui.imread(path2File);

}

} |