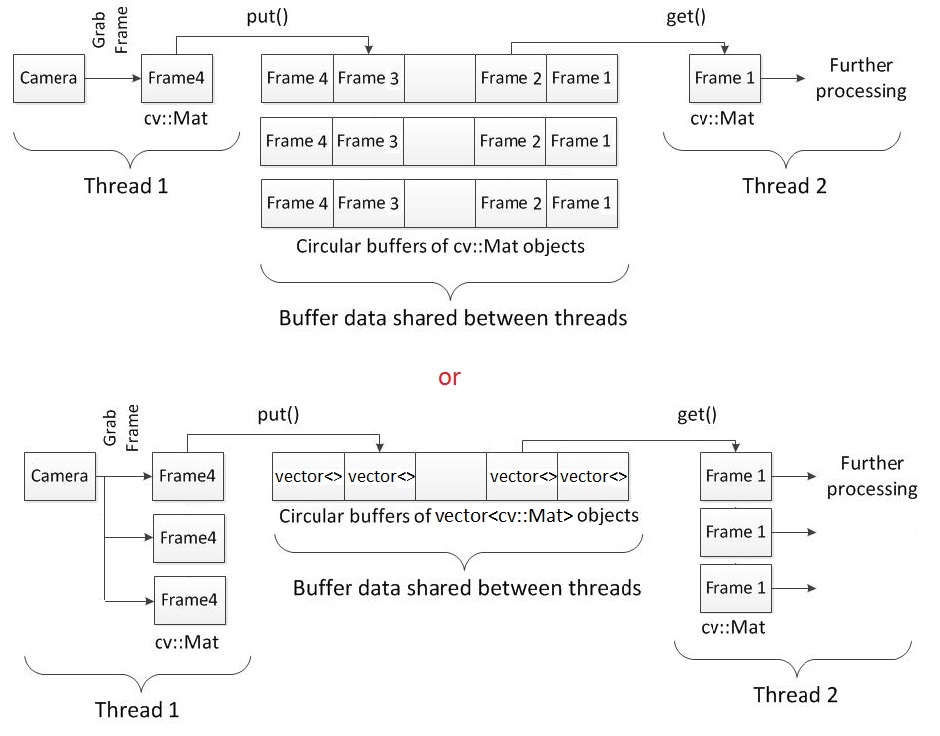

Well as the title says I would like to grab and process multiple frames in different threads by using a circular buffer or more, I hope that you can point me to what is better. For grabbing frames I am not using the VideoCapture() class from opencv but the libfreenect2 library and the corresponding listener since I am working with the Kinect one sensor which is not compatible with the VideoCapture() + openi2 functionality yet. My intention is to have one thread grabbing frames continuously in order not to affect the framerate that I can get from the kinect sensor, also here I might add a viewer in order to have a live monitor of what is happening (I do not know though how feasible is this and how it will affect the framerate) and having another thread where I would do all the process. From the libfreenect2 listener I can obtain multiple views regarding the sensor so at the same time I can have a frame for the rgb camera, one for the ir, one for the depth and with some process I can also obtain an rgbd. My question now is how to make shareable these frames to the two threads. Having a look in the following questions Time delay in VideoCapture opencv due to capture buffer and waitKey(1) timing issues causing frame rate slow down - fix? I think that a good approach would be to go with two threads an a circular buffer approach. However, what is more logical to have multiple circular buffers for each view or one circular buffer which will contain a container (e.g. an stl vector<>) with the frames from each view.

At the moment I am using the second approach with the vector, as it can be seen below:

//! [headers]

#include <iostream>

#include <stdio.h>

#include <iomanip>

#include <tchar.h>

#include <signal.h>

#include <opencv2/opencv.hpp>

#include <thread>

#include <mutex>

#include <queue>

#include <atomic>

#include <libfreenect2/libfreenect2.hpp>

#include <libfreenect2/frame_listener_impl.h>

#include <libfreenect2/registration.h>

#include <libfreenect2/packet_pipeline.h>

#include <libfreenect2/logger.h>

//! [headers]

using namespace std;

using namespace cv;

enum Process { cl, gl, cpu };

std::queue<vector<cv::Mat> > buffer;

std::mutex mtxCam;

std::atomic<bool> grabOn; // this is lock free

void grabber()

{

//! [context]

libfreenect2::Freenect2 freenect2;

libfreenect2::Freenect2Device *dev = nullptr;

libfreenect2::PacketPipeline *pipeline = nullptr;

//! [context]

//! [discovery]

if(freenect2.enumerateDevices() == 0)

{

std::cout << "no device connected!" << endl;

exit(EXIT_FAILURE);

// return -1;

}

string serial = freenect2.getDefaultDeviceSerialNumber();

// string serial = "014947350647";

std::cout << "SERIAL: " << serial << endl;

//! [discovery]

int depthProcessor = Process::cl;

if(depthProcessor == Process::cpu)

{

if(!pipeline)

//! [pipeline]

pipeline = new libfreenect2::CpuPacketPipeline();

//! [pipeline]

} else if (depthProcessor == Process::gl) {

#ifdef LIBFREENECT2_WITH_OPENGL_SUPPORT

if(!pipeline)

pipeline = new libfreenect2::OpenGLPacketPipeline();

#else

std::cout << "OpenGL pipeline is not supported!" << std::endl;

#endif

} else if (depthProcessor == Process::cl) {

#ifdef LIBFREENECT2_WITH_OPENCL_SUPPORT

if(!pipeline)

pipeline = new libfreenect2::OpenCLPacketPipeline();

#else

std::cout << "OpenCL pipeline is not supported!" << std::endl;

#endif

}

if(pipeline)

{

//! [open]

dev = freenect2.openDevice(serial, pipeline);

//! [open]

} else {

dev = freenect2.openDevice(serial);

}

if(dev == 0)

{

std::cout << "failure opening device!" << endl;

exit(EXIT_FAILURE);

// return -1;

}

//! [listeners]

libfreenect2::SyncMultiFrameListener listener(libfreenect2::Frame::Color |

libfreenect2::Frame::Depth |

libfreenect2::Frame::Ir);

libfreenect2::FrameMap frames;

dev->setColorFrameListener(&listener);

dev->setIrAndDepthFrameListener(&listener);

//! [listeners]

//! [start]

dev->start();

std::cout << "device serial: " << dev->getSerialNumber() << endl;

std::cout << "device firmware: " << dev->getFirmwareVersion() << endl;

//! [start]

//! [registration setup]

libfreenect2::Registration* registration = new libfreenect2::Registration(dev->getIrCameraParams(), dev->getColorCameraParams());

libfreenect2::Frame undistorted(512, 424, 4), rgb2depth(512, 424, 4), depth2rgb(1920, 1080 + 2, 4);

//! [registration setup]

Mat rgbmat, depthmat, irmat, rgbdmat, rgbdmat2, depthImg2;

vector<Mat> matBuff;

while (grabOn.load() == true)

{

listener.waitForNewFrame(frames);

libfreenect2::Frame *rgb = frames[libfreenect2::Frame::Color];

libfreenect2::Frame *ir = frames[libfreenect2::Frame::Ir];

libfreenect2::Frame *depth = frames[libfreenect2::Frame::Depth];

//! [loop start]

cv::Mat(rgb->height, rgb->width, CV_8UC4, rgb->data).copyTo(rgbmat);

cv::Mat(ir->height, ir->width, CV_32FC1, ir->data).copyTo(irmat);

// cv::Mat(depth->height, depth->width, CV_32FC1, depth->data).copyTo(depthmat);

//! [registration]

registration->apply(rgb, depth, &undistorted, &rgb2depth, true, &depth2rgb);

//! [registration]

cv::Mat(undistorted.height, undistorted.width, CV_32FC1, undistorted.data).copyTo(depthmat);

cv::Mat(rgb2depth.height, rgb2depth.width, CV_8UC4, rgb2depth.data).copyTo(rgbdmat);

cv::Mat(depth2rgb.height, depth2rgb.width, CV_32FC1, depth2rgb.data).copyTo(rgbdmat2);

mtxCam.lock();

matBuff.push_back(irmat.clone()); // should that be a .clone()?

matBuff.push_back(depthmat.clone());

matBuff.push_back(rgbdmat.clone());

buffer.push(matBuff);

mtxCam.unlock();

matBuff.clear();

putText(irmat, "THREAD FRAME", Point(10, 10), CV_FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 255, 0));

imshow("Image thread - ir", irmat / 4500.0f);

putText(depthmat, "THREAD FRAME", Point(10, 10), CV_FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 255, 0));

imshow("Image thread - depth", depthmat / 4500.0f);

putText(rgbdmat, "THREAD FRAME", Point(10, 10), CV_FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 255, 0));

imshow("Image thread - rgbd", rgbdmat);

waitKey(1); //just for imshow

//! [loop end]

listener.release(frames);

}

//! [stop]

dev->stop();

dev->close();

//! [stop]

delete registration;

}

void processor(vector<Mat> &imgs/*const Mat &src*/)

{

if(imgs.empty()) return;

putText(imgs[0], "PROCESS FRAME", Point(10, 10), CV_FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 255, 0));

imshow("Image main - ir", imgs[0] / 4500.0f);

putText(imgs[1], "PROCESS FRAME", Point(10, 10), CV_FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 255, 0));

imshow("Image main - depth", imgs[1] / 4500.0f);

putText(imgs[2], "PROCESS FRAME", Point(10, 10), CV_FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 255, 0));

imshow("Image main - rgbd", imgs[2]);

}

int main() {

std::cout << "Hello World!" << std::endl;

// Mat frame;

vector<Mat> frames;

grabOn.store(true); // set the grabbing control variable

thread t(grabber); // start the grabbing task

size_t bufSize;

while (true) {

mtxCam.lock(); // lock memory for exclusive access

bufSize = buffer.size(); // check how many frames are waiting

if (bufSize > 0) // if some

{

// reference to buffer.front() should be valid after

// pop because of cv::Mat memory reference counting

// but it's content can change after unlock, better to keep a copy

// an alternative is to unlock after processing (this will lock grabbing)

frames = buffer.front();

// buffer.front().copyTo(frame); // get the oldest grabbed frame (queue=FIFO)

buffer.pop(); // release the queue item

}

mtxCam.unlock(); // unlock the memory

if (bufSize > 0) // if a new frame is available

{

// cout << "inside...................: " << bufSize << endl;

processor(frames); // process it

bufSize--;

}

// if bufSize is increasing means that process time is too slow regards to grab time

// may be you will have out of memory soon

cout << endl << "frame to process:" << bufSize;

if (waitKey(1) == 27) // press ESC to terminate

{

grabOn.store(false); // stop the grab loop

t.join(); // wait for the grab loop

cout << endl << "Flushing buffer of:" << bufSize << " frames...";

while (!buffer.empty()) // flushing the buffer

{

frames = buffer.front();

buffer.pop();

processor(frames);

}

cout << "done" << endl;

break; // exit from process loop

}

}

// cout << endl << "Press Enter to terminate"; cin.get();

std::cout << "Goodbye World!" << std::endl;

return 0;

}

Is that ok what do you think? @pklab? For the moderators, though it is not a pure OpenCV question I think it suits to the form of the forum and I think that it would be quite useful for others as well.