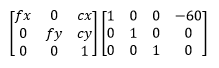

So, I have the projection matrix of the left camera:

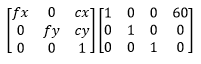

and the projection matrix of my right camera:

And when I perform triangulatePoints on the two vectors of corresponding points, I get the collection of points in 3D space.

All of the points in 3D space have a negative Z coordinate. I assume that the initial orientation of each camera is directed towards the positive Z axis.

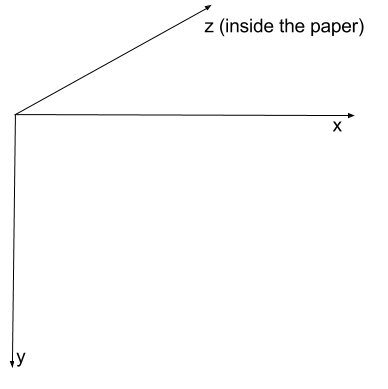

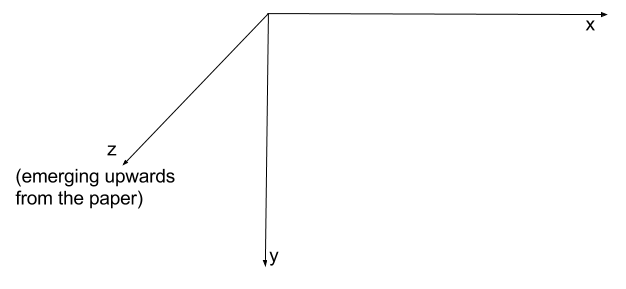

My assumption was that OpenCV uses coordinate axis like this:

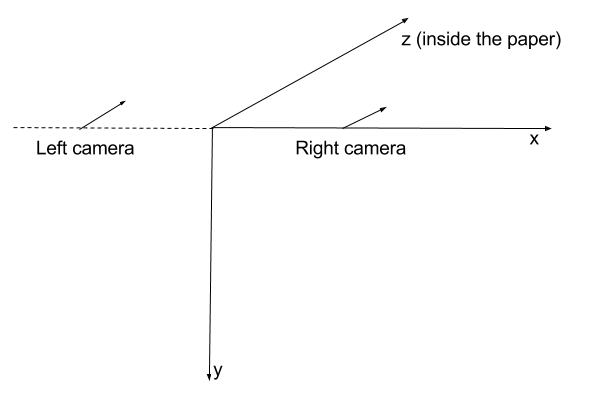

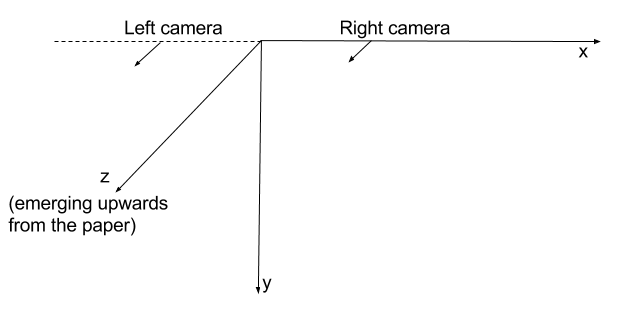

So, when I positioned my cameras with projection matrices, the complete picture would look like this:

But my experiment leads me to believe that the coordinate system looks like this:

And that my projection matrices have effectively messed up the left and right concept:

Is everything I've said correct? Is the latter coordinate system really the one that is used by OpenCV?