A new problem in my software arises :)

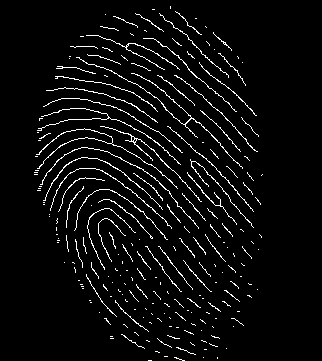

I got this input image, a skeletonized fingerprint

Then I am running Harris corner detection like in this example with the following code

cornerHarris(input_thinned, harris_corners, 2, 3, 0.04, BORDER_DEFAULT);

normalize(harris_corners, harris_corners_normalised, 0, 255, NORM_MINMAX, CV_32FC1, Mat());

convertScaleAbs(harris_corners_normalised, harris_corners_normalised);

And then I select all keypoint higher than 125 by this

int threshold = 125;

vector<KeyPoint> keypoints;

// Make a color clone for visualisation purposes

Mat harris_clone = harris_corners_normalised.clone();

harris_clone.convertTo(harris_clone, CV_8UC1);

cvtColor(harris_clone, harris_clone, CV_GRAY2BGR);

for(int i=0; i<harris_corners_normalised.rows; i++){

for(int j=0; j<harris_corners_normalised.cols; j++){

if ( (int)harris_corners_normalised.at<float>(j, i) > threshold ){

// Draw or store the keypoint location here, just like you decide. In our case we will store the location of the keypoint

circle(harris_clone, Point(i, j), 5, Scalar(0,255,0), 1);

circle(harris_clone, Point(i, j), 1, Scalar(0,0,255), 1);

keypoints.push_back( KeyPoint (i, j, 1) );

}

}

}

imshow("temp", harris_clone); waitKey(0);

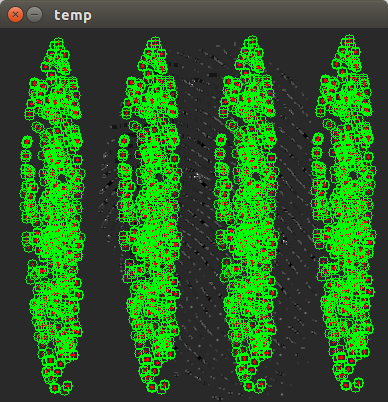

And this is the result

It seems to me that somewhere along the track, I got the point but they seem to be multiplied 4 times ... anyone got a clue what is going wrong?