Problem: I have been trying to calibrate 4 cameras to use for 3D reconstruction in our lab but my results so far aren't great.

Physical Setup Details: The cameras are mounted to the ceiling of the lab and spaced in an arc. My camera pairs have a large baseline ranging from 1.2m --> 1.4m, they are also angled to point at a focal point near the centre of my desired capture volume. The cameras are 1440x1080 (4:3), frame rate is currently set to 60FPS, synchronisation of the cameras relative to a master camera has been tested and verified to be within 6us.

Software Details: As far as I can tell my intrinsic parameters estimation with calibrateCameraCharuco is good. I am generally getting a root mean square pixel reprojection error of less than 0.5 for each camera. Also, visual inspection of the undistorted images shows no visible distortion. Next, when I calibrate the stereo pairs with stereoCalibrate I get OK results with an rms pixel reprojection error of about 0.9. Inspection of the translation matrix matches the setup in the lab fairly well. My problems begin when I attempt to use stereoRectify to obtain the projection/fundamental matrices for triangulation. My results from stereoRectify are mostly black, and show a lot of warping. In the next sections here I will show my results at each stage.

calibrateCameraCharuco Method/Results: Here is a snippet of my code to show how I am using the functions provided in OpenCV::aruco. Results are from 100 images.

for(int i = 0; i < nFrames; i++) {

int r = calibPositions.at(i);

// interpolate using camera parameters

Mat currentCharucoCorners;

vector < int > currentCharucoIds;

aruco::interpolateCornersCharuco(FD.allCorners[r], FD.allIds[r], FD.allImgs[r],

charucoboard, currentCharucoCorners, currentCharucoIds);

CD.allCharucoCorners.push_back(currentCharucoCorners);

CD.allCharucoIds.push_back(currentCharucoIds);

CD.filteredImages.push_back(FD.allImgs[r]);

}

// Create imaginary objectPoints for stereo calibration

WP.objPoints = charucoboard->chessboardCorners;

if(CD.allCharucoCorners.size() < 8) {

cerr << "Not enough corners for calibration" << endl;

}

// calibrate camera using charuco

cout << "Calculating reprojection error..." << endl;

double repError_all =

aruco::calibrateCameraCharuco(CD.allCharucoCorners, CD.allCharucoIds, charucoboard, FD.imgSize,

CP.cameraMatrix, CP.distCoeffs, CP.rvecs, CP.tvecs, CD.stdDevInt,

CD.stdDevExt, CD.repErrorPerView,

CALIB_RATIONAL_MODEL, TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 1000, 1e-8));

cout << "Reprojection error for all selected images --> " << repError_all << endl;

cout << "Calculating reprojection error based on best of calibration images (remove if repErr > thresh)..." << endl;

int count = 0;

for (int i = 0; i < nFrames; i++) {

if (CD.repErrorPerView.at<double>(i,0) > 0.8) {

CD.allCharucoCorners.erase(CD.allCharucoCorners.begin() + i);

CD.allCharucoIds.erase(CD.allCharucoIds.begin() + i);

CD.filteredImages.erase(CD.filteredImages.begin() + i);

cout << "Removed frame [ " << i << " ] due to poor reprojection error" << endl;

count++;

}

}

cout << count << " frames removed." << endl;

CD.repError =

aruco::calibrateCameraCharuco(CD.allCharucoCorners, CD.allCharucoIds, charucoboard, FD.imgSize,

CP.cameraMatrix, CP.distCoeffs, CP.rvecs, CP.tvecs, CD.stdDevInt,

CD.stdDevExt, CD.repErrorPerView, 0, TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 1000, 1e-8));

cout << "Reprojection error for best " << nFrames - count << " images --> " << CD.repError << endl;

Here is an example of my distorted and undistorted results.

Here are two of the intrinsic parameter .xml files that I save after calibration.

intrinsic calibration file 1:

<?xml version="1.0"?>

<opencv_storage>

<calibration_time>"Mon Sep 21 19:42:02 2020"</calibration_time>

<image_width>1440</image_width>

<image_height>1080</image_height>

<flags>0</flags>

<camera_matrix type_id="opencv-matrix">

<rows>3</rows>

<cols>3</cols>

<dt>d</dt>

<data>

8.0605929943849583e+02 0. 7.3480865246959092e+02 0.

8.1007034565182778e+02 5.1757622494227655e+02 0. 0. 1.</data></camera_matrix>

<distortion_coefficients type_id="opencv-matrix">

<rows>1</rows>

<cols>5</cols>

<dt>d</dt>

<data>

-3.4978195544034996e-01 1.2886581503437988e-01

-1.9665200615673575e-04 -1.4983018383788354e-03

-2.2248879341119590e-02</data></distortion_coefficients>

<avg_reprojection_error>3.5456431830182816e-01</avg_reprojection_error>

intrinsic calibration file 2:

<?xml version="1.0"?>

<opencv_storage>

<calibration_time>"Mon Sep 21 19:35:48 2020"</calibration_time>

<image_width>1440</image_width>

<image_height>1080</image_height>

<flags>0</flags>

<camera_matrix type_id="opencv-matrix">

<rows>3</rows>

<cols>3</cols>

<dt>d</dt>

<data>

8.0465978952959529e+02 0. 7.0834198622991266e+02 0.

8.0757265281475566e+02 4.9114869480837643e+02 0. 0. 1.</data></camera_matrix>

<distortion_coefficients type_id="opencv-matrix">

<rows>1</rows>

<cols>5</cols>

<dt>d</dt>

<data>

-3.6701403682828881e-01 1.5425867184023750e-01

6.9635465447631670e-05 -7.6979877001017848e-04

-3.2360458909053834e-02</data></distortion_coefficients>

<avg_reprojection_error>3.7534224198836619e-01</avg_reprojection_error>

stereoCalibrate Method/Results Here is a snippet of my code to show how I am using the functions provided in OpenCV. Results are from 100 images.

cout << "Loaded images..." << endl;

vector < int > currentCharucoIds_0, currentCharucoIds_1;

vector < Point2f > currentCharucoCorners_0, currentCharucoCorners_1;

vector < int > Vec_currentCharucoIds;

Mat Vec_currentCharucoCorners;

aruco::interpolateCornersCharuco(FD.first.allCorners[r], FD.first.allIds[r], currImgGray_0,

charucoboard, currentCharucoCorners_0, currentCharucoIds_0);

aruco::interpolateCornersCharuco(FD.second.allCorners[r], FD.second.allIds[r], currImgGray_1,

charucoboard, currentCharucoCorners_1, currentCharucoIds_1);

aruco::interpolateCornersCharuco(FD.first.allCorners[r], FD.first.allIds[r], currImgGray_0,

charucoboard, Vec_currentCharucoCorners, Vec_currentCharucoIds);

// need to [0] [1] [2] --> [a,b,c,d; ...;...;]

auto result_0 = std::remove_if(boost::make_zip_iterator( boost::make_tuple( currentCharucoIds_0.begin(), currentCharucoCorners_0.begin() ) ),

boost::make_zip_iterator( boost::make_tuple( currentCharucoIds_0.end(), currentCharucoCorners_0.end() ) ),

[=](boost::tuple<int, Point2f > const& elem) {

return !Match(boost::get<0>(elem), currentCharucoIds_1);

});

currentCharucoIds_0.erase(boost::get<0>(result_0.get_iterator_tuple()), currentCharucoIds_0.end());

currentCharucoCorners_0.erase(boost::get<1>(result_0.get_iterator_tuple()), currentCharucoCorners_0.end());

auto result_1 = std::remove_if(boost::make_zip_iterator( boost::make_tuple( currentCharucoIds_1.begin(), currentCharucoCorners_1.begin() ) ),

boost::make_zip_iterator( boost::make_tuple( currentCharucoIds_1.end(), currentCharucoCorners_1.end() ) ),

[=](boost::tuple<int, Point2f> const& elem) {

return !Match(boost::get<0>(elem), currentCharucoIds_0);

});

currentCharucoIds_1.erase(boost::get<0>(result_1.get_iterator_tuple()), currentCharucoIds_1.end());

currentCharucoCorners_1.erase(boost::get<1>(result_1.get_iterator_tuple()), currentCharucoCorners_1.end());

cout << "Calculating matches..." << endl;

int matches;

if (currentCharucoIds_0.size() == currentCharucoIds_1.size()) {

matches = currentCharucoIds_0.size();

cout << matches << " matches" << endl;

} else {

cout << "ID's not matched correctly, or not enought ID's" << endl;

exit(EXIT_FAILURE);

}

if (currentCharucoCorners_0.size() == currentCharucoCorners_1.size()) {

cout << "Adding current corners for cameras to charuco data..." << endl;

CD.first.allCharucoCorners.push_back(currentCharucoCorners_0);

CD.first.allCharucoIds.push_back(currentCharucoIds_0);

CD.first.filteredImages.push_back(currImgGray_0);

CD.second.allCharucoCorners.push_back(currentCharucoCorners_1);

CD.second.allCharucoIds.push_back(currentCharucoIds_1);

CD.second.filteredImages.push_back(currImgGray_1);

vector < Point3f > objPoints;

vector < int > objPoints_id;

objPoints = charucoboard->chessboardCorners;

for (unsigned int i = 0; i < charucoboard->chessboardCorners.size(); i++){

objPoints_id.push_back(i);

}

auto result_3 = std::remove_if(boost::make_zip_iterator( boost::make_tuple( objPoints_id.begin(), objPoints.begin() ) ),

boost::make_zip_iterator( boost::make_tuple( objPoints_id.end(), objPoints.end() ) ),

[=](boost::tuple<int, Point3f> const& elem) {

return !Match(boost::get<0>(elem), currentCharucoIds_0);

});

objPoints_id.erase(boost::get<0>(result_3.get_iterator_tuple()), objPoints_id.end());

objPoints.erase(boost::get<1>(result_3.get_iterator_tuple()), objPoints.end());

WP.first.objPoints.push_back(objPoints);

} else {

nBadFrames++;

cout << "Refined corners/ID count does not match or count is less than 8" << endl;

cout << currentCharucoCorners_0.size() << endl;

cout << currentCharucoIds_0.size() << endl;

}

}

cout << "Completed interpolation on " << count - nBadFrames << " images. "<< endl;

cout << "Beginning stereo calibration for current pair..." << endl;

Mat repErrorPerView;

double rms = stereoCalibrate(WP.first.objPoints, CD.first.allCharucoCorners, CD.second.allCharucoCorners,

intParam.first.cameraMatrix, intParam.first.distCoeffs,

intParam.second.cameraMatrix, intParam.second.distCoeffs, Size(1440,1080),

extParam.rotation, extParam.translation, extParam.essential, extParam.fundamental, repErrorPerView,

CALIB_USE_INTRINSIC_GUESS | CALIB_FIX_FOCAL_LENGTH | CALIB_FIX_PRINCIPAL_POINT |

CALIB_FIX_K1 | CALIB_FIX_K2 | CALIB_FIX_K3 | CALIB_FIX_K4 | CALIB_FIX_K5 | CALIB_RATIONAL_MODEL,

TermCriteria(TermCriteria::COUNT + TermCriteria::EPS, 1000, 1e-8));

cout << repErrorPerView << endl;

//cout << "Reprojection error for best " << numFrames - badFrames << " images --> " << rms << endl;

cout << rms << endl;

Mat Q;

// in stereo calib make struct Transformation {Rect and Proj}

stereoRectify(intParam.first.cameraMatrix, intParam.first.distCoeffs,

intParam.second.cameraMatrix, intParam.second.distCoeffs,

FD.first.imgSize, extParam.rotation, extParam.translation,

intParam.first.rectificationMatrix, intParam.second.rectificationMatrix,

intParam.first.projectionMatrix, intParam.second.projectionMatrix, extParam.dispToDepth,

0, -1, FD.first.imgSize, &intParam.first.regionOfInterest, &intParam.second.regionOfInterest);

Mat rot0, rot1, trans0, trans1, cameraMatrix0, cameraMatrix1;

decomposeProjectionMatrix(intParam.first.projectionMatrix, cameraMatrix0, rot0, trans0);

decomposeProjectionMatrix(intParam.second.projectionMatrix, cameraMatrix1, rot1, trans1);

cout << "Camera 1: " << endl << "Rotation: " << endl << rot0 << endl << "Translation: " << endl << trans0 << endl;

cout << "Camera 1: ROI H: " << endl << intParam.first.regionOfInterest.height << " W: " << intParam.first.regionOfInterest.width << endl;

cout << "Camera 2: " << endl << "Rotation: " << endl << rot1 << endl << "Translation: " << endl << trans1 << endl;

cout << "Camera 2: ROI H: " << endl << intParam.second.regionOfInterest.height << " W: " << intParam.second.regionOfInterest.width << endl;

Mat map1_1, map1_2, map2_1, map2_2;

initUndistortRectifyMap(intParam.first.cameraMatrix, intParam.first.distCoeffs,

intParam.first.rectificationMatrix, intParam.first.projectionMatrix,

Size(1440,1080), CV_16SC2, map1_1, map1_2);

initUndistortRectifyMap(intParam.second.cameraMatrix, intParam.second.distCoeffs,

intParam.second.rectificationMatrix, intParam.second.projectionMatrix,

Size(1440,1080), CV_16SC2, map2_1, map2_2);

Mat img1, img2; // Images to be read

Mat img1u, img2u; // Undistorted Imgs

Mat img1r, img2r; // Rectified Imgs

Mat img1u_Resize, img2u_Resize; // Undistorted resize

Mat img1r_Resize, img2r_Resize; // Rectified resize

Mat imgCombined, imgCombined_Resize; // Combined result for epilines

for (unsigned int i = 0; i < FD.first.allImgs.size(); i++) {

img1 = FD.first.allImgs[i];

img2 = FD.second.allImgs[i];

undistort(img1, img1u, intParam.first.cameraMatrix, intParam.first.distCoeffs);

undistort(img2, img2u, intParam.second.cameraMatrix, intParam.second.distCoeffs);

remap(img1, img1r, map1_1, map1_2, INTER_LINEAR);

remap(img2, img2r, map2_1, map2_2, INTER_LINEAR);

hconcat(img1r, img2r, imgCombined);

// draw horizontal line

for(int j = 0; j < imgCombined.rows; j += 16 ) {

line(imgCombined, Point(0, j), Point(imgCombined.cols, j), Scalar(0, 255, 0), 1, 8);

}

Size zero (0, 0);

resize(img1u, img1u_Resize, zero, 0.25, 0.25, INTER_LINEAR);

resize(img2u, img2u_Resize, zero, 0.25, 0.25, INTER_LINEAR);

resize(imgCombined, imgCombined_Resize, zero, 0.25, 0.25, INTER_LINEAR);

imshow("Undistorted 1", img1u_Resize);

imshow("Undistorted 2", img2u_Resize);

imshow("imgCombined", imgCombined_Resize);

char key = waitKey();

cout << "q/Q to quit, enter to see next image." << endl;

if ( key == 'q' || key == 'Q') {

break;

}

}

Here is an example of the output from decomposeProjectionMatrix, I'm not sure if it is correct and it may be causing an issue.

pair1

rms=0.463218

Camera 1:

Rotation:

[1, 0, 0;

0, 1, 0;

0, 0, 1]

Translation:

[0;

0;

0;

1]

Camera 1: ROI

H: 0 W: 0

Camera 2:

Rotation:

[1, 0, 0;

0, 1, 0;

0, 0, 1]

Translation:

[0.8238847064740386;

6.715809563857897e-18;

-4.940656458412465e-324;

0.5667574352738454]

Camera 2: ROI

H: 0 W: 0

pair2

rms=0.783253

Camera 1:

Rotation:

[0.9999999999999999, 0, 0;

0, 0.9999999999999999, 0;

0, 0, 1]

Translation:

[0;

0;

0;

1]

Camera 1: ROI H:

760 W: 1440

Camera 2:

Rotation:

[0.9999999999999999, 0, 0;

0, 0.9999999999999999, 0;

0, 0, 1]

Translation:

[0.766146582958673;

-8.866293832486486e-17;

0;

0.6426658645211748]

Camera 2: ROI H:

932 W: 830

pair3

rms=0.760754

Camera 1:

Rotation:

[1, 0, 0;

0, 1, 0;

0, 0, 1]

Translation:

[0;

0;

0;

1]

Camera 1: ROI H:

0 W: 0

Camera 2:

Rotation:

[1, 0, 0;

0, 1, 0;

0, 0, 1]

Translation:

[0.8221122187051663;

-6.908022583387919e-17;

-0;

0.5693254779611295]

Camera 2: ROI H:

0 W: 0

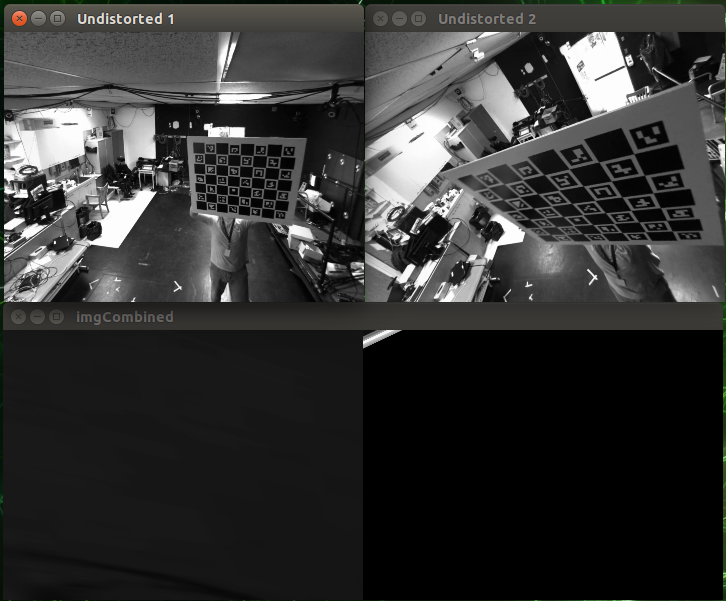

Here is an example of the rectified image pairs.

This is getting quite long so I can post any other information if needed. Thanks for the help!