Hello,

CONTEXT: I am doing a bit of image analysis for the project I am working on and I would like to identify some areas in my image. In those areas, are symbols I need later on so I want to find the black rectangles' positions for later use. Currently I have the black rectangle image and the mask to remove the interior parts of the pattern. I list all the contours of the image. For each contour I check if the size is approximately what I am looking for, if it matches I crop the original image using the contour rect, resize this crop to the template size and match the two. If the score from the match is suitable I accept it and add this contour to the list of black rectangles.

ISSUE: My problem is with the scores. I first struggle to determines what would be an appropriate threshold. When you have template size < image size, you get the best point, but in my case I get a score that is not directly apparently good/bad. Currently I am exploring SQDIFF, and so my idea was to make the worst (highest) score and us that as a scale (0 to worst) to judge my matches. For that worst score I did the following :

Mat imageWorstChannel = imageChannel.clone();

for (int i = 0; i < imageWorstChannel.rows; i++) {

Vec3b tempVec3b;

for (int j = 0; j < imageWorstChannel.cols; j++) {

tempVec3b = imageWorstChannel.at<Vec3b>(i, j);

if(tempVec3b.val[0] < middleValue) tempVec3b.val[0] = 255 - tempVec3b.val[0];

else tempVec3b.val[0] = tempVec3b.val[0];

if (tempVec3b.val[1] < middleValue) tempVec3b.val[1] = 255 - tempVec3b.val[1];

else tempVec3b.val[1] = tempVec3b.val[1];

if (tempVec3b.val[2] < middleValue) tempVec3b.val[2] = 255 - tempVec3b.val[2];

else tempVec3b.val[2] = tempVec3b.val[2];

imageWorstChannel.at<Vec3b>(i, j) = tempVec3b;

}

}

My problem is I gets some matche's scores above this supposedly "worst" score. Is there maybe something that I misunderstand on how OpenCV matches stuff? Is it the correct way to do what I want ?

ADDITIONNAL INFOS:

- I use imread (..., cv::IMREAD_COLOR) for my template and my image source and imread (..., cv::IMREAD_GRAYSCALE) for my mask

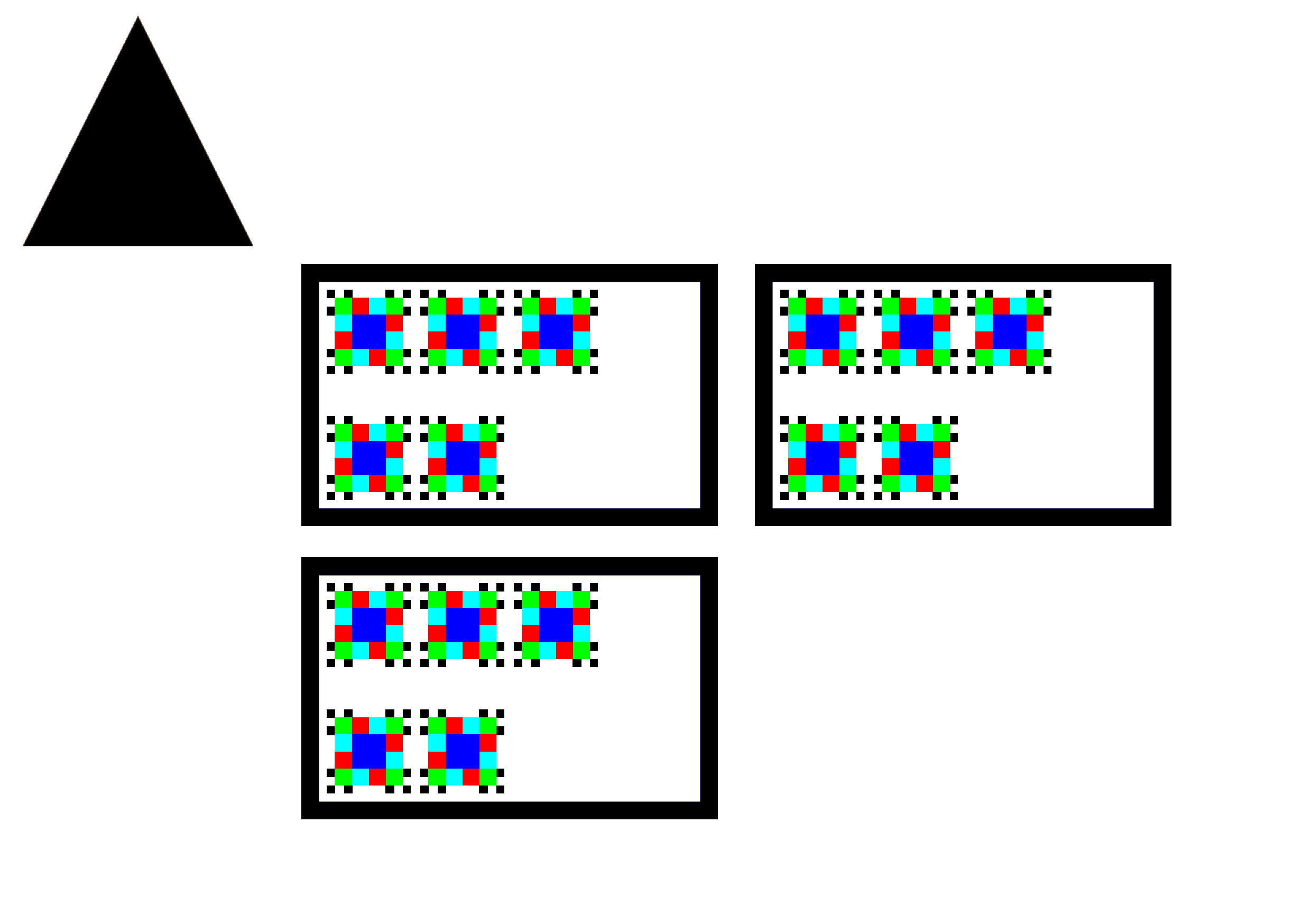

The Black Rectangle Image, e.i. the pattern am I searching for

The Black Rectangle Image, e.i. the pattern am I searching for