Hi, so I am looking at tracking and triangulating the position of a flying target (large portions of the camera view is the sky!) that is a couple of kilometres away. This means that the camera pair used for triangulation has to have quite a big baseline, atleast hundreds of meters for any type of accuracy in the triangulation. I am a bit confused however how to perform this task especially the calibration part.

For calibration of the camera pair I thought I could use block matching and the result to estimate the fundamental matrix (OpenCV findFundamental). Since I know the camera intrinsics I could from this determine the essential matrix and since I also know the translation vector I could calculate the rotation matrix. Finally since this would yield the relation between the cameras, but not the world coordinates final step would be using a couple of known points (about 5-7 in world coordinates) with solvePnP to find one of the cameras orientation relative the world frame. With this I could now form my projection matrices for my cameras used in the triangulation step.

My questions are:

Is the general idea correct or is there something I am missing?

I have also locked at using stereoCalibrate, but my understanding is that it requires the use of a calibration pattern, which in this case is a bit unrealistic given the scale. Or could I use this instead for calibration?

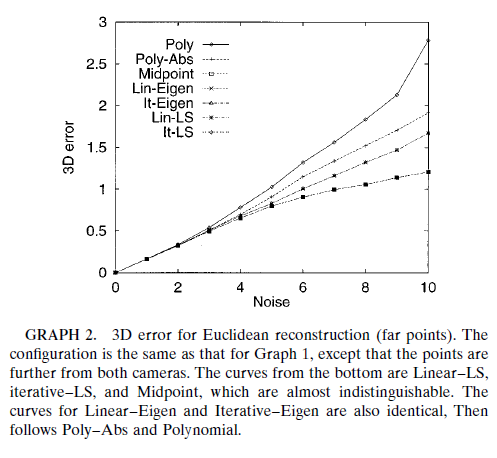

Finally a short question on triangulation. There are multiple algorithms out there, [Triangulation Richard I. Hartley] my understanding is that L2-norm solutions such as the polynomial algorithm are optimal for projection problems, but for my task of 3d-point accuracy then Linear-LS perform better (see GRAPH 2 from paper)?