Hello everyone, I have a video given (video.avi) and a set of images of books to be detected inside the video. My code actually doesn't detect the right book, but it always seems to detect the same book without noticing differences between all of them, and also doesn't draw the rectangle around the book (not even around the only one that always detect). I'm posting my code and a screenshot of the result I get:

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/opencv.hpp>

#include <iostream>

#include <opencv2/core/utils/filesystem.hpp>

#include <opencv2/core/types_c.h>

using namespace std;

using namespace cv;

int main() {

vector<Mat> data;

vector<string> fn;

glob("C:/Users/albma/Desktop/Università/Computer Vision/labs/lab6/Lab 6 data/objects/*.png", fn, true);

for (size_t k = 0; k < fn.size(); ++k)

{

Mat im = imread(fn[k]);

if (im.empty()) continue; //only proceed if sucsessful

data.push_back(im);

}

cvtColor(data[0], data[0], COLOR_BGR2GRAY);

Mat img_object = data[0].clone();

Ptr<FeatureDetector> detector;

vector<KeyPoint> keypoints_object, keypoints_scene;

Mat descriptors_object;

Mat descriptors_scene;

VideoCapture cap = VideoCapture("video.mov");

if (cap.isOpened()) {

Mat img_scene;

cap >> img_scene;

cvtColor(img_scene, img_scene, COLOR_BGR2GRAY);

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

detector->detect(img_object, keypoints_object);

detector->detect(img_scene, keypoints_scene);

descriptor->compute(img_object, keypoints_object, descriptors_object);

descriptor->compute(img_object, keypoints_scene, descriptors_scene);

cv::Ptr<cv::BFMatcher> matcher = cv::BFMatcher::create(cv::NORM_HAMMING);

vector< std::vector<DMatch> > knn_matches;

matcher->knnMatch(descriptors_object, descriptors_scene, knn_matches, 2);

//-- Filter matches using the Lowe's ratio test

const float ratio_thresh = 1.75f;

std::vector<DMatch> good_matches;

for (size_t i = 0; i < knn_matches.size(); i++)

{

if (knn_matches[i][0].distance < ratio_thresh * knn_matches[i][1].distance)

{

good_matches.push_back(knn_matches[i][0]);

}

}

//-- Draw matches

Mat img_matches;

drawMatches(img_object, keypoints_object, img_scene, keypoints_scene, good_matches, img_matches, Scalar::all(-1),

Scalar::all(-1), std::vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for (size_t i = 0; i < good_matches.size(); i++)

{

//-- Get the keypoints from the good matches

obj.push_back(keypoints_object[good_matches[i].queryIdx].pt);

scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt);

}

Mat H = findHomography(obj, scene, RANSAC);

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = Point2f(0, 0);

obj_corners[1] = Point2f((float)img_object.cols, 0);

obj_corners[2] = Point2f((float)img_object.cols, (float)img_object.rows);

obj_corners[3] = Point2f(0, (float)img_object.rows);

std::vector<Point2f> scene_corners(4);

perspectiveTransform(obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line(img_matches, scene_corners[0] + Point2f((float)img_object.cols, 0),

scene_corners[1] + Point2f((float)img_object.cols, 0), Scalar(0, 255, 0), 4);

line(img_matches, scene_corners[1] + Point2f((float)img_object.cols, 0),

scene_corners[2] + Point2f((float)img_object.cols, 0), Scalar(0, 255, 0), 4);

line(img_matches, scene_corners[2] + Point2f((float)img_object.cols, 0),

scene_corners[3] + Point2f((float)img_object.cols, 0), Scalar(0, 255, 0), 4);

line(img_matches, scene_corners[3] + Point2f((float)img_object.cols, 0),

scene_corners[0] + Point2f((float)img_object.cols, 0), Scalar(0, 255, 0), 4);

//-- Show detected matches

resize(img_matches, img_matches, Size(img_matches.cols / 2, img_matches.rows / 2));

imshow("Good Matches & Object detection", img_matches);

}

waitKey(0);

return -1;

}

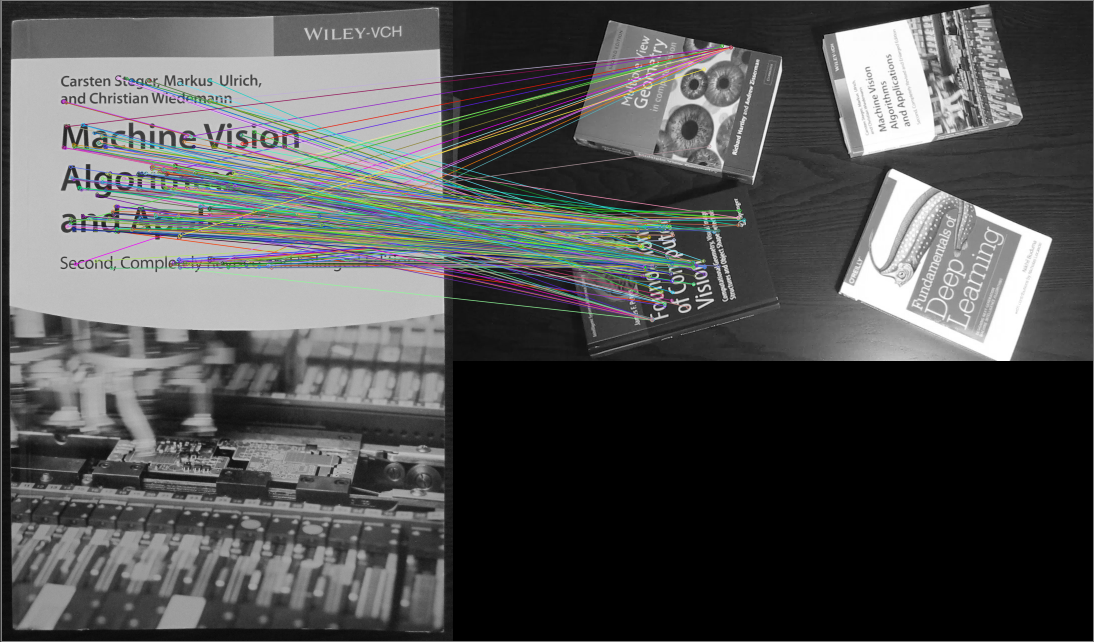

And that's the result:

I dont understand what may be the problem with my code, can anybody help me? Thank you!