so

Hi,

I am a junior college student who is new to computer vision, right now me and my classmates are doing a self-driving electric car project.

currently, we planning to use a stereo vision system for obstacle detection at least 10 meter.

Our idea is to use YOLO find the possible obstacle on the road( ex: pedestrian, car, bicycle...), and use the central point of bounding box as the object's pixel point on image, then use this image point find the 3D points of the object.

After looking for the data on internet, i found that I can find the projection matrix of two camera by solvePnP first, then find the 3D points of the object by using triangulatePoints with two camera's projection matrix and object's pixel point on two image taken by two camera.

Next, I want to know the error of 3D coordinate estimation along with the distance of the object. therefore, I did a experiment.

In the experiment,

- I use microsoft lifeCam hd-3000 as right came and microsoft lifeCam VX-800 as left camera

- two camera have different focal length

- both image resolution is 640 X 480

- the chessboard grid size is 3cm x 3cm

- baseline is 60 cm

then what I did is

First, I calibrate two camera respectively, then I get the camera matrix and distortion coefficient of both camera, after that, I use getOptimalNewCameraMatrix function to get new camera Matrix and roi of two camera.

new_cameraMatrixLeft, roi_left = cv2.getOptimalNewCameraMatrix(cameraMatrixLeft, distCoeffsLeft, (width, height), 1, (width, height))

new_cameraMatrixRight, roi_right = cv2.getOptimalNewCameraMatrix(cameraMatrixRight, distCoeffsRight, (width, height), 1, (width, height))

Second, I put a chessboard in front of the camera 120 cm, here is the left camera image

C:\fakepath\120cm_left_image.jpg

here is the right camera image

C:\fakepath\120cm_right_image.jpg

and I set the up-right corner as world coordinate system origin, and z-axis is into image, use the chessboard corner as object.

C:\fakepath\a.jpg

the object's world coordinate is ( x, y, z )

C:\fakepath\b.jpg

Third, I undistort the image by using undistort function, the left image size after is 637 X 476, right image is 604 X 444 .

undistort_left_image = cv2.undistort(frame_left, cameraMatrixLeft, distCoeffsLeft, None, new_cameraMatrixLeft)

undistort_right_image = cv2.undistort(frame_right, cameraMatrixRight, distCoeffsRight, None, new_cameraMatrixRight)

then,I use opencv find chessboard corner and cornerSubPix function to find the two camera's undistort image points.

x, y, w, h = roi_left

undistort_left_image = undistort_left_image[y:y+h, x:x+w]

x, y, w, h = roi_right

undistort_right_image = undistort_right_image[y:y+h, x:x+w]

gray_l = cv2.cvtColor(undistort_left_image, cv2.COLOR_BGR2GRAY)

gray_r = cv2.cvtColor(undistort_right_image, cv2.COLOR_BGR2GRAY)

ret_l, corners_l = cv2.findChessboardCorners(gray_l, (column, row), None)

ret_r, corners_r = cv2.findChessboardCorners(gray_r, (column, row), None)

corners2_l = cv2.cornerSubPix(gray_l, corners_l, (11, 11), (-1, -1), criteria)

corners2_r = cv2.cornerSubPix(gray_r, corners_r, (11, 11), (-1, -1), criteria)

imgpoints_l.append(corners2_l)

imgpoints_r.append(corners2_r)

imgpoints_l_undistort = imgpoints_l[0]

imgpoints_r_undistort = imgpoints_r[0]

Forth, I use solvePnP function to get the projection matrix of two camera with 63 object points, 63 left and right image points.

_, rotationLeft, translationLeft = cv2.solvePnP(objpoints[0], imgpoints_l_undistort, cameraMatrixLeft, distCoeffsLeft)

_, rotationRight, translationRight = cv2.solvePnP(objpoints[0], imgpoints_r_undistort, cameraMatrixRight, distCoeffsRight)

rmtxLeft, _ = cv2.Rodrigues(rotationLeft)

rmtxRight, _ = cv2.Rodrigues(rotationRight)

rtLeft = np.hstack((rmtxLeft, translationLeft))

rtRight = np.hstack((rmtxRight, translationRight))

projectionMatrixLeft = np.dot(cameraMatrixLeft, rtLeft)

projectionMatrixRight = np.dot(cameraMatrixRight, rtRight)

Fifth, I move the chessboard forward 60 cm, know the up-right corner of chessboard world coordinate is (0, 0, 60) cm, chessboard is in front of the camera 180 cm, here is the left camera image.

C:\fakepath\d.jpg

Sixth, I take the image and get the up-right corner's image points on both camera manually, then use undistortPoints to get the up-right corner's undistort image points and use triangulatePoint to estimate the up-right corner's world coordinate with projection matrix and undistort image points of two camera.

imgpoints_l_undistort = cv2.undistortPoints( np.array([[[imgpoints_x_l, imgpoints_y_l]]], np.float32), cameraMatrixLeft, distCoeffsLeft, None, new_cameraMatrixLeft)

imgpoints_r_undistort = cv2.undistortPoints( np.array([[[imgpoints_x_r, imgpoints_y_r]]], np.float32), cameraMatrixRight, distCoeffsRight, None, new_cameraMatrixRight)

imgpoints_l_undistort = np.array([[[imgpoints_x_l, imgpoints_y_l]]], np.float32)

imgpoints_r_undistort = np.array([[[imgpoints_x_r, imgpoints_y_r]]], np.float32)

l = imgpoints_l_undistort.reshape(imgpoints_l_undistort.shape[1], 2).T

r = imgpoints_r_undistort.reshape(imgpoints_r_undistort.shape[1], 2).T

p4d = cv2.triangulatePoints(projectionMatrixLeft, projectionMatrixRight, l, r)

Seventh, repeat the fifth and sixth step till the distance between chessboard and camera is 1020 cm.

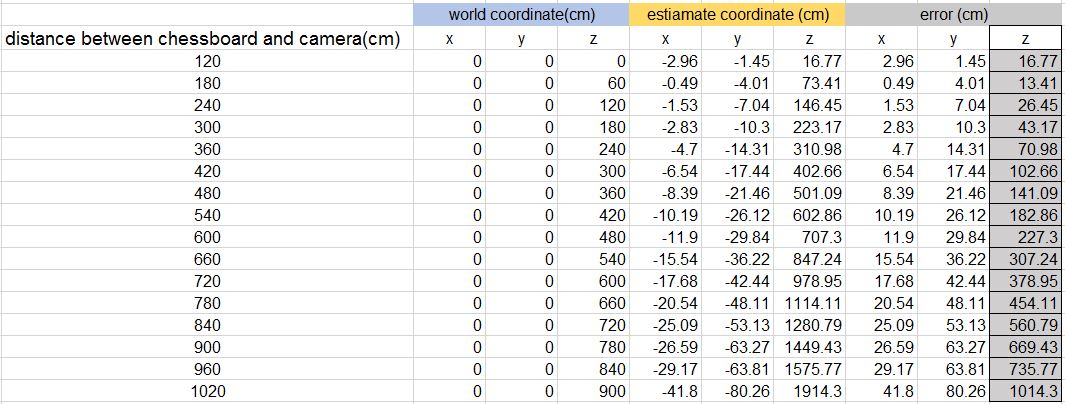

here is the result

C:\fakepath\c.JPG

As you can see, when the distance is 420 cm, the z(depth) estimation error is already 1 meter, at 1020 cm, the error comes to 10 meter, which is too big.

Our goal is that the coordinate error <= 1 meter when estimate 10 meter away object, so my question is

- Is there anything I did wrong in the experiment ? or maybe there are something can be adjusted to improve the result.

- Or my idea is actually wrong ? because I never see any paper or information on internet use the method as I did when estimating the 3D coordinate or depth.

- How does triangulatePoints works ?

- I have seen many people create depth map when estimating the depth, also I know that I can use reprojection Image To 3D function to transform depth map to 3D coordinate, but I only have web cam with different focal length, will this work ?, the depth map model seems based on the camera with same focal length.

- Increase the resolution of image will improve the accuracy greatly ?

sorry the question is't complete yet, I will finish it soonAny advice is welcome, thanks.