I have many hours of video captured by an infrared camera placed by marine biologists in a canal. Their research goal is to count herring that swim past the camera. It is too time consuming to watch each video, so they'd like to employ some computer vision to help them filter out the frames that do not contain fish. They can tolerate some false positives and false negatives, so they don't need a sophisticated machine learning approach at this point.

I am using a process that looks like this for each frame:

- Load the frame from the video

- Apply a Gaussian (or median blur)

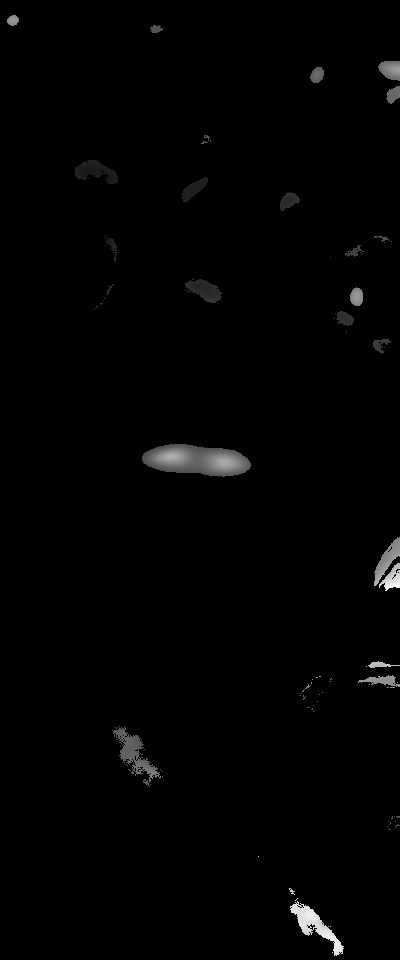

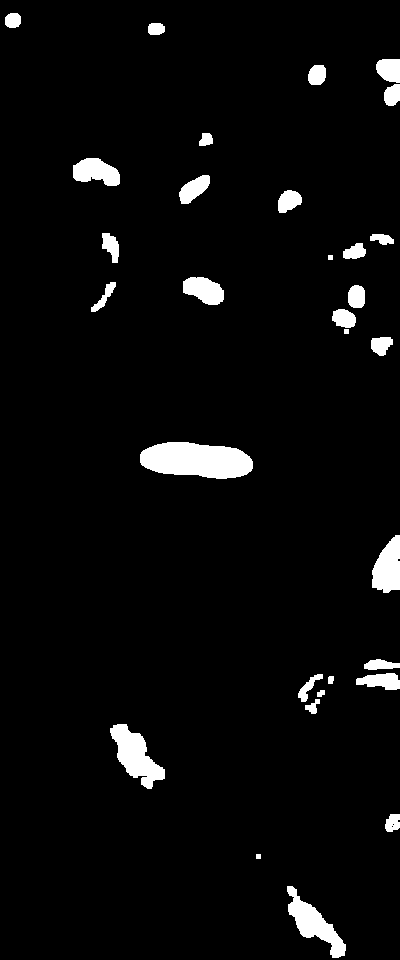

- Subtract the background using the BackgroundSubtractorMOG2 class

- Apply a brightness threshold — the fish tend to reflect the sunlight, or an infrared light that is turned on at night — and dilate

Compute the total area of all of the contours in the image

If this area is greater than a certain percentage of the frame, the frame may contain fish. Extract the frame.

To find optimal parameters for these operations, such as the blur algorithm and its kernel size, the brightness threshold, etc., I've taken a manually tagged video and run many versions of the detector algorithm using an evolutionary algorithm to guide me to optimal parameters. However, even the best parameter set I can find still creates many false negatives (about 2/3rds of the fish are not detected) and false positives (about 80% of the detected frames in fact contain no fish).

I'm looking for ways that I might be able to improve the algorithm. Can I identify the fish by the ellipse of their contour and the angle (they tend to be horizontal, or at an upward or downward angle, but not vertical or head-on)? Should I do something to normalize the lighting conditions so that the same brightness threshold works whether day or night?