Hey everyone,

initially I created an issue for this question, but apparently it is no bug (sorry for that), so I am hoping that you can help me to get it right :)

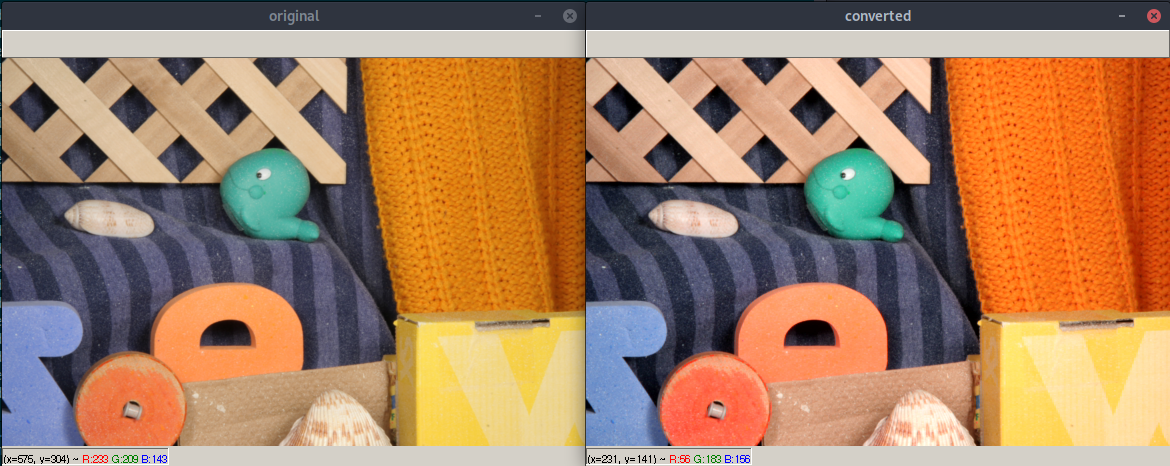

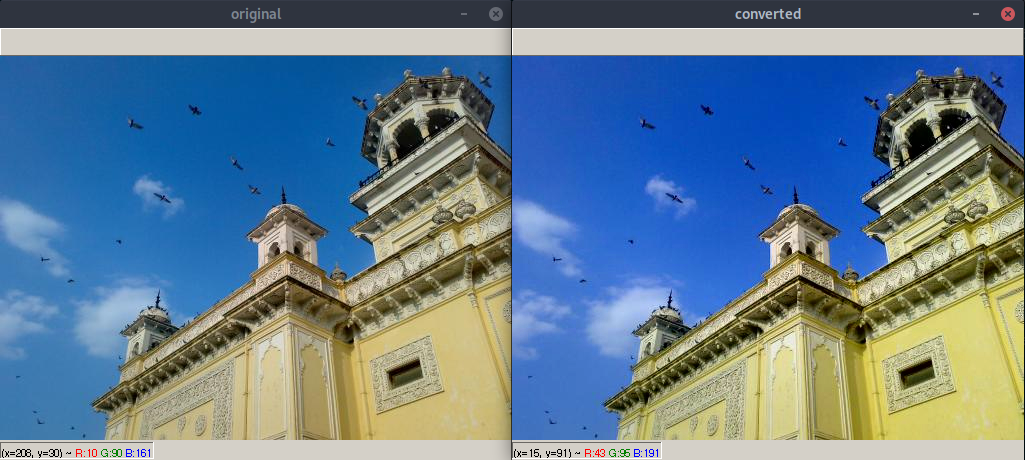

I have an image dataset stored as RGB images. I need to convert these RGB images to YUYV images. While it is possible to convert images from YUYV (also known as YUV422) into RGB via COLOR_YUV2RGB_YUYV, there is no reverse operation for this. I thought it should be simple to implement this with the YUV conversion and subsampling, but when I attempted that and afterwards converted the image back to RGB, it looks slightly different and more saturated.

I used this sample image. This snipped is a stand alone working sample of what I did and what seems to go wrong.

import cv2

import numpy as np

# Load sample image

img_bgr = cv2.imread("home.jpg")

cv2.imshow("original", img_bgr)

cv2.waitKey(0)

# Convert from BGR to YUV

img_yuv = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2YUV)

# Converting directly back from YUV to BGR results in an (almost) identical image

img_bgr_restored = cv2.cvtColor(img_yuv, cv2.COLOR_YUV2BGR)

cv2.imshow("converted", img_bgr_restored)

cv2.waitKey(0)

diff = img_bgr.astype(np.int16) - img_bgr_restored

print("mean/stddev diff (BGR => YUV => BGR)", np.mean(diff), np.std(diff))

# Create YUYV from YUV

y0 = np.expand_dims(img_yuv[...,0][::,::2], axis=2)

u = np.expand_dims(img_yuv[...,1][::,::2], axis=2)

y1 = np.expand_dims(img_yuv[...,0][::,1::2], axis=2)

v = np.expand_dims(img_yuv[...,2][::,::2], axis=2)

img_yuyv = np.concatenate((y0, u, y1, v), axis=2)

img_yuyv_cvt = img_yuyv.reshape(img_yuyv.shape[0], img_yuyv.shape[1] * 2, int(img_yuyv.shape[2] / 2))

# Convert back to BGR results in more saturated image.

img_bgr_restored = cv2.cvtColor(img_yuyv_cvt, cv2.COLOR_YUV2BGR_YUYV)

cv2.imshow("converted", img_bgr_restored)

cv2.waitKey(0)

diff = img_bgr.astype(np.int16) - img_bgr_restored

print("mean/stddev diff (BGR => YUV => YUYV => BGR)", np.mean(diff), np.std(diff))

Output:

mean/stddev diff (BGR => YUV => BGR) 0.044821845160590276 0.539779183532531

mean/stddev diff (BGR => YUV => YUYV => BGR) -0.3019137912326389 11.760396080102238

The high standard deviation shows that the resulting image differs quiet significantly from the original image. I would expect this to be a lot lower, in the order of converting to and from plain YUV.

So my conversion from YUV to YUYV is probably wrong. How would I do it properly?

Thanks and kind regards,

Jan