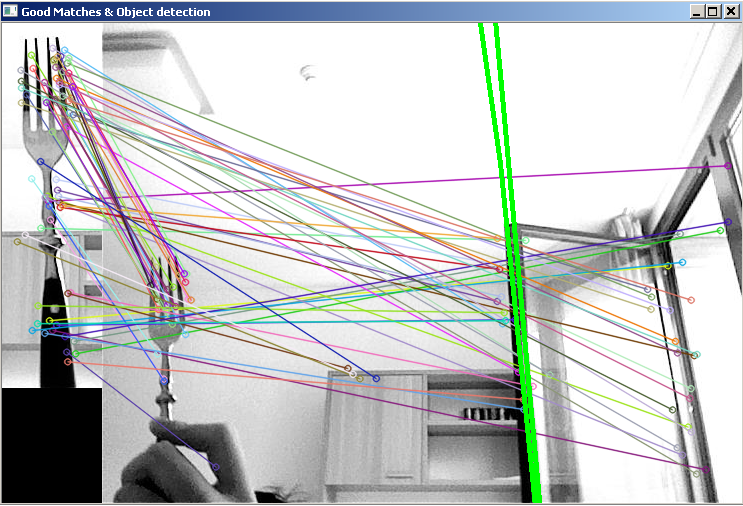

Hi all, I have to improve the robustness of the object detection (I need a very very strong detection, no problem of constrains time), because how you can see in the image, he calculates false positives and give wrong results.

Do you have any idea, how I can increase robustness? I use bruteforce matcher because I think he find the best matching but it isn't.

Here is the code:

#include <stdio.h>

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/legacy/legacy.hpp>

#include <opencv2/legacy/compat.hpp>

#include <opencv2/flann/flann.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/nonfree/features2d.hpp>

#include <opencv2/nonfree/nonfree.hpp>

using namespace cv;

void readme();

/** @function main */

int main( int argc, char** argv )

{

if( argc != 3 )

{ readme(); return -1; }

Mat img_object = imread( argv[1], CV_LOAD_IMAGE_GRAYSCALE );

Mat img_scene = imread( argv[2], CV_LOAD_IMAGE_GRAYSCALE );

if( !img_object.data || !img_scene.data )

{ std::cout<< " --(!) Error reading images " << std::endl; return -1; }

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;//20000

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_object, keypoints_scene;

detector.detect( img_object, keypoints_object );

detector.detect( img_scene, keypoints_scene );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_object, descriptors_scene;

extractor.compute( img_object, keypoints_object, descriptors_object );

extractor.compute( img_scene, keypoints_scene, descriptors_scene );

//-- Step 3: Matching descriptor vectors using FLANN matcher

//FlannBasedMatcher matcher;

BFMatcher matcher(NORM_L2,true);

std::vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( unsigned int i = 0; i < descriptors_object.rows; i++ )

{

if(i==matches.size()) break;

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist );

printf("-- Min dist : %f \n", min_dist );

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

std::vector< DMatch > good_matches;

for( unsigned int i = 0; i < descriptors_object.rows; i++ )

{

if(i==matches.size()) break;

if( matches[i].distance < 3*min_dist )

{ good_matches.push_back( matches[i]); }

}

Mat img_matches;

drawMatches( img_object, keypoints_object, img_scene, keypoints_scene,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( unsigned int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypoints_object[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows ); obj_corners[3] = cvPoint( 0, img_object.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( img_matches, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

//-- Show detected matches

imshow( "Good Matches & Object detection", img_matches );

waitKey(0);

return 0;

}

/** @function readme */

void readme()

{ std::cout << " Usage: ./SURF_descriptor <img1> <img2>" << std::endl; }

And this is the image of the output:

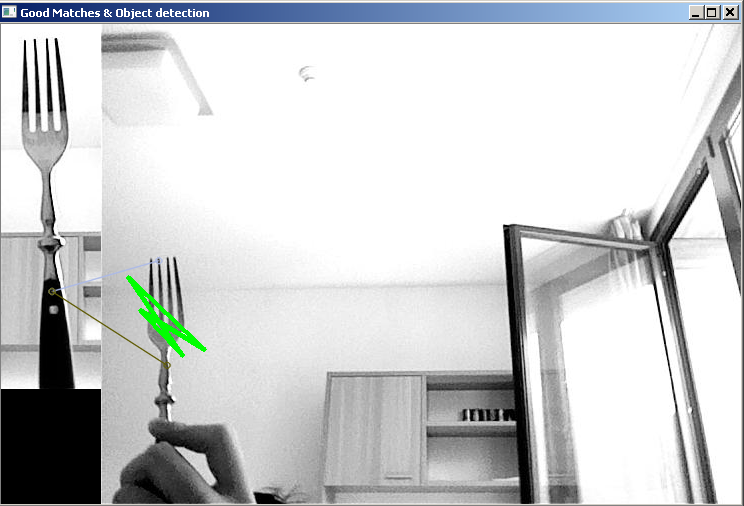

I've modified the code how you suggest, but now it is too much restrictive!!!

new code:

#include <stdio.h>

include <iostream>

include "opencv2/core/core.hpp"

include "opencv2/features2d/features2d.hpp"

include "opencv2/highgui/highgui.hpp"

include "opencv2/imgproc/imgproc.hpp"

include "opencv2/calib3d/calib3d.hpp"

#include <opencv2 objdetect="" objdetect.hpp="">

#include <opencv2 features2d="" features2d.hpp="">

#include <opencv2 core="" core.hpp="">

#include <opencv2 highgui="" highgui.hpp="">

#include <opencv2 legacy="" legacy.hpp="">

#include <opencv2 legacy="" compat.hpp="">

#include <opencv2 flann="" flann.hpp="">

#include <opencv2 calib3d="" calib3d.hpp="">

#include <opencv2 nonfree="" features2d.hpp="">

#include <opencv2 nonfree="" nonfree.hpp="">

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/calib3d/calib3d.hpp"

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/legacy/legacy.hpp>

#include <opencv2/legacy/compat.hpp>

#include <opencv2/flann/flann.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/nonfree/features2d.hpp>

#include <opencv2/nonfree/nonfree.hpp>

using namespace cv;

cv;

void readme();

/* readme();

/** @function main */

int main( int argc, char* char** argv )

{

if( argc != 3 )

{ readme(); return -1; }

}

Mat img_object = imread( argv[1], CV_LOAD_IMAGE_GRAYSCALE );

Mat img_scene = imread( argv[2], CV_LOAD_IMAGE_GRAYSCALE );

);

if( !img_object.data || !img_scene.data )

{ std::cout<< " --(!) Error reading images " << std::endl; return -1; }

}

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;//20000

400;//20000

//SurfFeatureDetector detector( minHessian );

SiftFeatureDetector detector( minHessian);

std::vector<keypoint> minHessian);

std::vector<KeyPoint> keypoints_object, keypoints_scene;

keypoints_scene;

detector.detect( img_object, keypoints_object );

detector.detect( img_scene, keypoints_scene );

);

//-- Step 2: Calculate descriptors (feature vectors)

//SurfDescriptorExtractor extractor;

SiftFeatureDetector extractor;

extractor;

Mat descriptors_object, descriptors_scene;

descriptors_scene;

extractor.compute( img_object, keypoints_object, descriptors_object );

extractor.compute( img_scene, keypoints_scene, descriptors_scene );

);

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

//BFMatcher matcher(NORM_L2,true);

std::vector<std::vector< dmatch="" >>="" matches;="" matcher.match(="" descriptors_object,="" descriptors_scene,="" matches="" );="" matcher.knnmatch(descriptors_object,descriptors_scene,matches,2);<="" p="">

std::vector<vector<dmatch>> matcher(NORM_L2,true);

std::vector<std::vector< DMatch >> matches;

//matcher.match( descriptors_object, descriptors_scene, matches );

matcher.knnMatch(descriptors_object,descriptors_scene,matches,2);

std::vector<vector<DMatch>> good_matches;

double RatioT = 0.75;

//-- ratio Test

for(int i=0; i<matches.size(); i++)="" {="" if((matches[i].size()="=1)||(abs(matches[i][0].distance/matches[i][1].distance)" <="" ratiot))="" {="" good_matches.push_back(matches[i]);="" }="" }<="" p="">

i++)

{

if((matches[i].size()==1)||(abs(matches[i][0].distance/matches[i][1].distance) < RatioT))

{

good_matches.push_back(matches[i]);

}

}

/*

double max_dist = 0; double min_dist = 100;

100;

//-- Quick calculation of max and min distances between keypoints

for( unsigned int i = 0; i < descriptors_object.rows; i++ )

{

if(i==matches.size()) break;

break;

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

}

printf("-- Max dist : %f \n", max_dist );

printf("-- Min dist : %f \n", min_dist );

);

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

std::vector< DMatch > good_matches;

good_matches;

for( unsigned int i = 0; i < descriptors_object.rows; i++ )

{

if(i==matches.size()) break;

break;

if( matches[i].distance < 3*min_dist )

{ good_matches.push_back( matches[i]); }

}

*/

*/

Mat img_matches;

for(int i=0;i<good_matches.size();i++) drawmatches(="" img_object,="" keypoints_object,="" img_scene,="" keypoints_scene,="" good_matches[i],="" img_matches,="" scalar::all(-1),="" scalar::all(-1),="" vector<char="">(), i=0;i<good_matches.size();i++)

drawMatches( img_object, keypoints_object, img_scene, keypoints_scene,

good_matches[i], img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

);

//-- Localize the object

std::vector<point2f> std::vector<Point2f> obj;

std::vector<point2f> scene;

std::vector<Point2f> scene;

for( unsigned int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypoints_object[ good_matches[i][0].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i][0].trainIdx ].pt );

}

}

Mat H = findHomography( obj, scene, CV_RANSAC );

);

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<point2f> std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows ); obj_corners[3] = cvPoint( 0, img_object.rows );

std::vector<point2f> scene_corners(4);

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( img_matches, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

);

//-- Show detected matches

imshow( "Good Matches & Object detection", img_matches );

);

waitKey(0);

return 0;

}

}

/** @function readme */

void readme()

{ std::cout << " Usage: ./SURF_descriptor <img1> <img2>" << std::endl; }

}

And this is the result:

Any idea on how to improve the code?