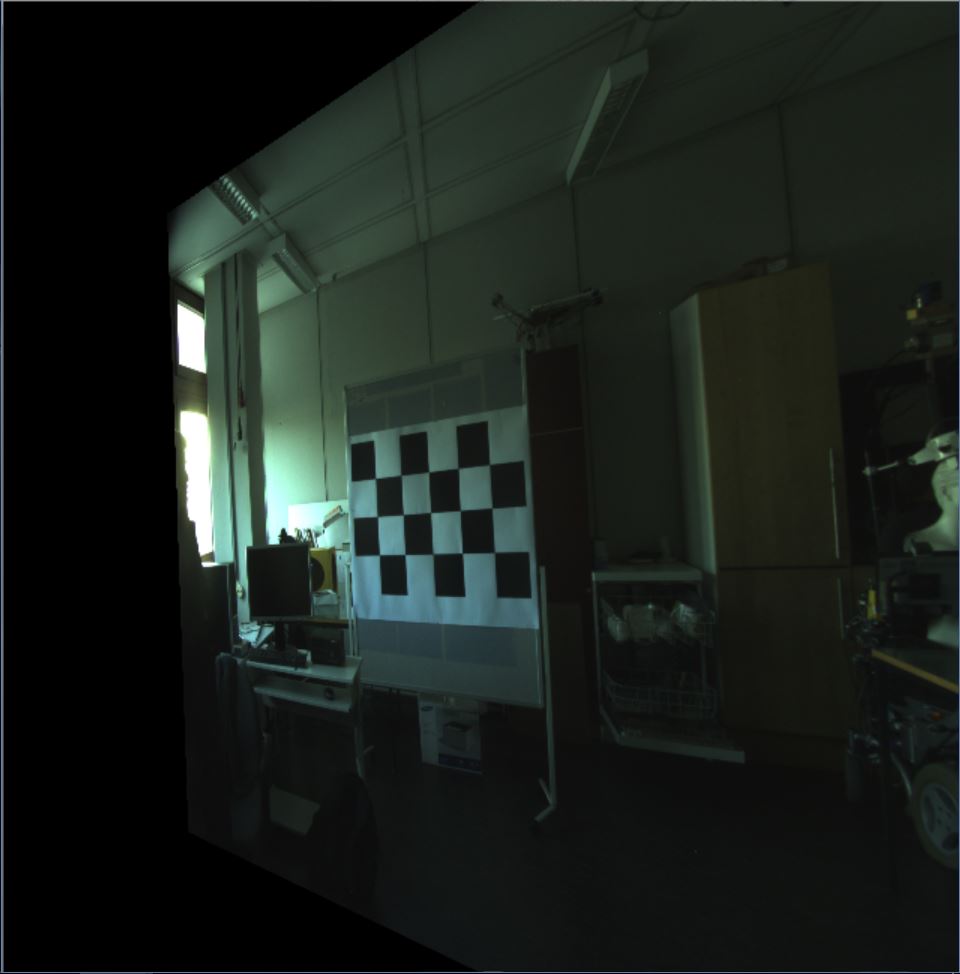

I am using cv::stereoRectifyUncalibrated() to rectify two images but I am getting pretty bad results including lots of shearing effects. The steps I am following:

- SURF to detect and match keypoints

- cv::findFundamentalMat() to compute fundamental matrix

- cv::stereoRectifyUncalibrated() to get homography matrix H1 and H2

- cv::warpPerspective() to get the rectified images

I want to use the rectified images for disparity. But can't use due to the bad results of rectification. My questions:

- Is it the fundamental matrix causing the problem?

- or the warpPerspective() transform responsible for this?

- or something else I need take care of?

Following is my code and sample images of results. I am new to opencv and appreciate any help.

#include <iostream>

#include <stdio.h>

#include "opencv2/core.hpp"

#include "opencv2/features2d.hpp"

#include "opencv2/xfeatures2d.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/calib3d.hpp"

#include "opencv2/core/affine.hpp"

using namespace cv;

using namespace cv::xfeatures2d;

int main()

{

//Loading the stereo images

Mat leftImage = imread("left.jpg", CV_LOAD_IMAGE_COLOR);

Mat rightImage = imread("right.jpg", CV_LOAD_IMAGE_COLOR);

//checking if image file succesfully opened

if (!leftImage.data || !rightImage.data)

{

std::cout << " --(!) Error reading images " << std::endl; return -1;

}

/*showing the input stereo images

namedWindow("Left image original", WINDOW_FREERATIO);

namedWindow("Right image original", WINDOW_FREERATIO);

imshow("Left image original", leftImage);

imshow("Right image original", rightImage);

*/

//::::::::::::::::::::::::::::::::::::::::::::::::

//Step 1: Detect the keypoints using SURF Detector

int minHessian = 420;

Ptr<SURF> detector = SURF::create(minHessian); //here detector is a pointer which points to SURF type object

//create is also a pointer which points to SURF type object

std::vector<KeyPoint> keypointsLeft, keypointsRight; //vectors storing keypoints of two images

detector->detect(leftImage, keypointsLeft);

detector->detect(rightImage, keypointsRight);

//::::::::::::::::::::::::::::::::

//Step 2: Descriptors of keypoints

Mat descriptorsLeft;

Mat descriptorsRight;

detector->compute(leftImage, keypointsLeft, descriptorsLeft);

detector->compute(rightImage, keypointsRight, descriptorsRight);

//std::cout << "descriptor matrix size: " << keypointsDescriptorsLeft.rows << " by " << keypointsDescriptorsLeft.cols << std::endl;

//::::::::::::::::::::::::::::::::::::::::::::::::::::::::::

//Step 3: matching keypoints from image right and image left

//according to their descriptors (BruteForce, Flann based approaches)

// Construction of the matcher

std::vector<cv::DMatch> matches;

static Ptr<BFMatcher> matcher = cv::BFMatcher::create();

// Match the two image descriptors

matcher->match(descriptorsLeft, descriptorsRight, matches);

//std::cout << "Number of matched points: " << matches.size() << std::endl;

//::::::::::::::::::::::::::::::::

//Step 4: find the fundamental mat

// Convert 1 vector of keypoints into

// 2 vectors of Point2f for computing F matrix

// with cv::findFundamentalMat() function

std::vector<int> pointIndexesLeft; //getting index for point2f conversion

std::vector<int> pointIndexesRight; //getting index for point2f conversion

for (std::vector<cv::DMatch>::const_iterator it = matches.begin(); it != matches.end(); ++it) {

// Get the indexes of the selected matched keypoints

pointIndexesLeft.push_back(it->queryIdx);

pointIndexesRight.push_back(it->trainIdx);

}

// Convert keypoints vector into Point2f type vector

//as needed for fundamentalMat() function

std::vector<cv::Point2f> matchingPointsLeft, matchingPointsRight;

cv::KeyPoint::convert(keypointsLeft, matchingPointsLeft, pointIndexesLeft);

cv::KeyPoint::convert(keypointsRight, matchingPointsRight, pointIndexesRight);

//creating clone Mat to draw the keypoints on

Mat drawKeyLeft = leftImage.clone(), drawKeyRight = rightImage.clone();

//check by drawing the points

std::vector<cv::Point2f>::const_iterator it = matchingPointsLeft.begin();

while (it != matchingPointsLeft.end()) {

// draw a circle at each corner location

cv::circle(drawKeyLeft, *it, 3, cv::Scalar(0, 0, 0), 1);

++it;

}

it = matchingPointsRight.begin();

while (it != matchingPointsRight.end()) {

// draw a circle at each corner location

cv::circle(drawKeyRight, *it, 3, cv::Scalar(0, 0, 0), 1);

++it;

}

namedWindow("Left Image Keypoints", WINDOW_FREERATIO);

namedWindow("Right Image Keypoints", WINDOW_FREERATIO);

imshow("Left Image Keypoints", drawKeyLeft);

imshow("Right Image Keypoints", drawKeyRight);

// Compute F matrix from n>=8 matches

cv::Mat fundamental = cv::findFundamentalMat(matchingPointsLeft, // selected point2f points in first image

matchingPointsRight, // selected point2f points in second image

CV_FM_RANSAC); // 8-point method

std::cout << std::endl << "F-Matrix " << fundamental << std::endl << std::endl;

//drawing epipolar lines

//drawEpipolarLines(matchingPointsLeft, matchingPointsRight, fundamental, leftImage, rightImage);

//:::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::::

//Step 5: Getting Homography matrices H1 & H2 using stereoRectifyUncalibrated()

//creating homography mactrices H1, H2

//are used to get rectified images

cv::Mat H1(3, 3, fundamental.type()); //H1 for left image

cv::Mat H2(3, 3, fundamental.type()); //H2 for right image

cv::stereoRectifyUncalibrated(matchingPointsLeft, matchingPointsRight, fundamental, leftImage.size(), H1, H2, 2);

std::cout << "H1 matrix" << H1 << std::endl;

std::cout << std::endl << "H2 matrix" << H2 << std::endl << std::endl;

//creating Mat to hold rectified images

Mat rectifiedLeft, rectifiedRight;

//getting rectified images using final transformation matrix above

warpPerspective(leftImage, rectifiedLeft, H1, leftImage.size(), INTER_LINEAR);

warpPerspective(rightImage, rectifiedRight, H2, leftImage.size(), INTER_LINEAR);

namedWindow("Rectified Left Image", WINDOW_FREERATIO);

namedWindow("Rectified Right Image", WINDOW_FREERATIO);

imshow("Rectified Left Image", rectifiedLeft);

imshow("Rectified Right Image", rectifiedRight);

waitKey(0);

//system("pause");

return 0;

}