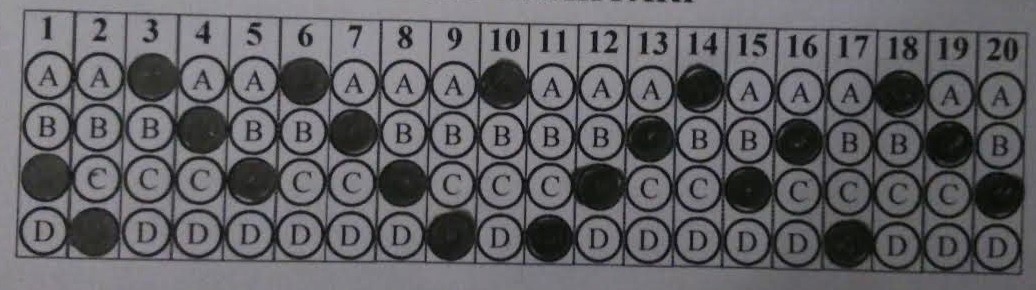

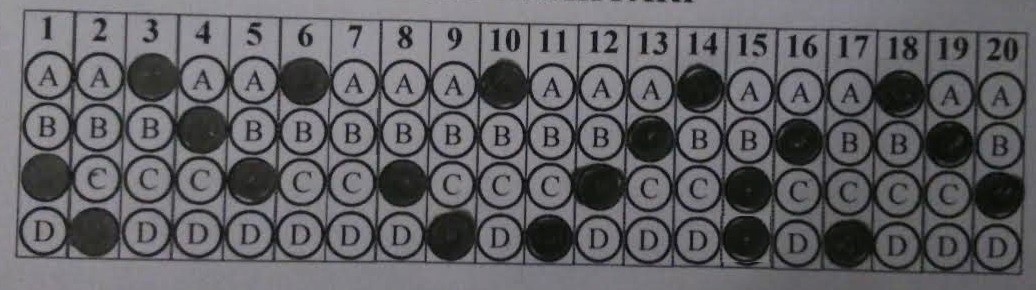

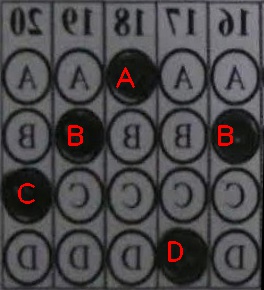

How to detect marked black regions inside largest Rectangle Contour?

I can detect largest contour the answer sheet (20 questions, each have 4 alternative)

After the draw largest contour, what shall I do? Divide matris the rectangle by 20x4 cell? Or find countour again but this time inside the rectangle? I dont know what I need. Just I want to get which is marked.

public Mat onCameraFrame(CameraBridgeViewBase.CvCameraViewFrame inputFrame) {

return findLargestRectangle(inputFrame.rgba());

}

private Mat findLargestRectangle(Mat original_image) {

Mat imgSource = original_image;

hierarchy = new Mat();

//convert the image to black and white

Imgproc.cvtColor(imgSource, imgSource, Imgproc.COLOR_BGR2GRAY);

//convert the image to black and white does (8 bit)

Imgproc.Canny(imgSource, imgSource, 50, 50);

//apply gaussian blur to smoothen lines of dots

Imgproc.GaussianBlur(imgSource, imgSource, new Size(5, 5), 5);

//find the contours

List<MatOfPoint> contours = new ArrayList<MatOfPoint>();

Imgproc.findContours(imgSource, contours, hierarchy, Imgproc.RETR_LIST, Imgproc.CHAIN_APPROX_SIMPLE);

hierarchy.release();

double maxArea = -1;

int maxAreaIdx = -1;

MatOfPoint temp_contour = contours.get(0); //the largest is at the index 0 for starting point

MatOfPoint2f approxCurve = new MatOfPoint2f();

Mat largest_contour = contours.get(0);

List<MatOfPoint> largest_contours = new ArrayList<MatOfPoint>();

for (int idx = 0; idx < contours.size(); idx++) {

temp_contour = contours.get(idx);

double contourarea = Imgproc.contourArea(temp_contour);

//compare this contour to the previous largest contour found

if (contourarea > maxArea) {

//check if this contour is a square

MatOfPoint2f new_mat = new MatOfPoint2f( temp_contour.toArray() );

int contourSize = (int)temp_contour.total();

Imgproc.approxPolyDP(new_mat, approxCurve, contourSize*0.05, true);

if (approxCurve.total() == 4) {

maxArea = contourarea;

maxAreaIdx = idx;

largest_contours.add(temp_contour);

largest_contour = temp_contour;

}

}

}

MatOfPoint temp_largest = largest_contours.get(largest_contours.size()-1);

largest_contours = new ArrayList<MatOfPoint>();

largest_contours.add(temp_largest);

Imgproc.cvtColor(imgSource, imgSource, Imgproc.COLOR_BayerBG2RGB);

Imgproc.drawContours(imgSource, contours, maxAreaIdx, new Scalar(0, 255, 0), 1);

Log.d(TAG, "Largers Contour:" + contours.get(maxAreaIdx).toString());

return imgSource;

}

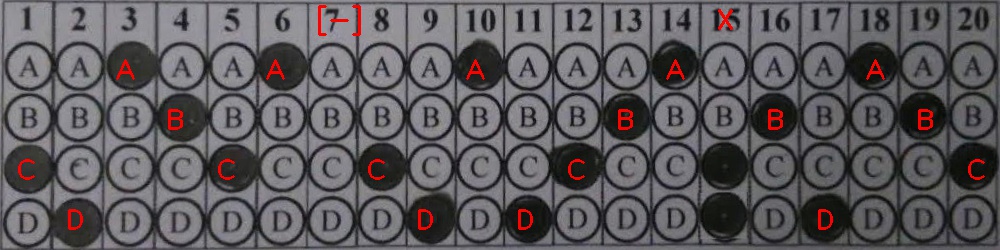

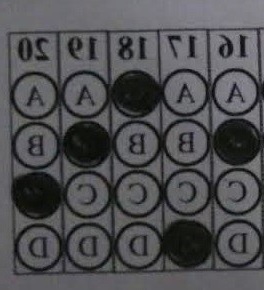

UPDATE 1: I want to thank you @sturkmen for the his answer. I can read and find black regions now. Here the Android codes:

public View onCreateView(LayoutInflater inflater, ViewGroup container,

Bundle savedInstanceState) {

View _view = inflater.inflate(R.layout.fragment_main, container, false);

// Inflate the layout for this fragment

Button btnTest = (Button) _view.findViewById(R.id.btnTest);

btnTest.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

Mat img = Imgcodecs.imread(mediaStorageDir().getPath() + "/" + "test2.jpg");

if (img.empty()) {

Log.d("Fragment", "IMG EMPTY");

}

Mat gray = new Mat();

Mat thresh = new Mat();

//convert the image to black and white

Imgproc.cvtColor(img, gray, Imgproc.COLOR_BGR2GRAY);

//convert the image to black and white does (8 bit)

Imgproc.threshold(gray, thresh, 0, 255, Imgproc.THRESH_BINARY_INV + Imgproc.THRESH_OTSU);

Mat temp = thresh.clone();

//find the contours

Mat hierarchy = new Mat();

Mat corners = new Mat(4,1, CvType.CV_32FC2);

List<MatOfPoint> contours = new ArrayList<MatOfPoint>();

Imgproc.findContours(temp, contours,hierarchy, Imgproc.RETR_EXTERNAL, Imgproc.CHAIN_APPROX_SIMPLE);

hierarchy.release();

for (int idx = 0; idx < contours.size(); idx++)

{

MatOfPoint contour = contours.get(idx);

MatOfPoint2f contour_points = new MatOfPoint2f(contour.toArray());

RotatedRect minRect = Imgproc.minAreaRect( contour_points );

Point[] rect_points = new Point[4];

minRect.points( rect_points );

if(minRect.size.height > img.width() / 2)

{

List<Point> srcPoints = new ArrayList<Point>(4);

srcPoints.add(rect_points[2]);

srcPoints.add(rect_points ...

Don't try to detect circle look for black region.

Another way is to use a mask with a perfect a grid which fit grid and estimate level in each answer.

Thank you @LBerger. Your list about methods I guess. Firstly I need to figure it out.