Barrel distortion with LUT from PNG

Hi.

I would like to apply Barrel Distortion to a camera stream to display the video on a Zeiss VR One. In the vrone unity3d sdk I found lookup tables for the distortion as png files and was very happy since I did not expect to find something like that. I would like to use them for the barrel distortion since LUT is fast and I think this would be the best to get this working in real time.

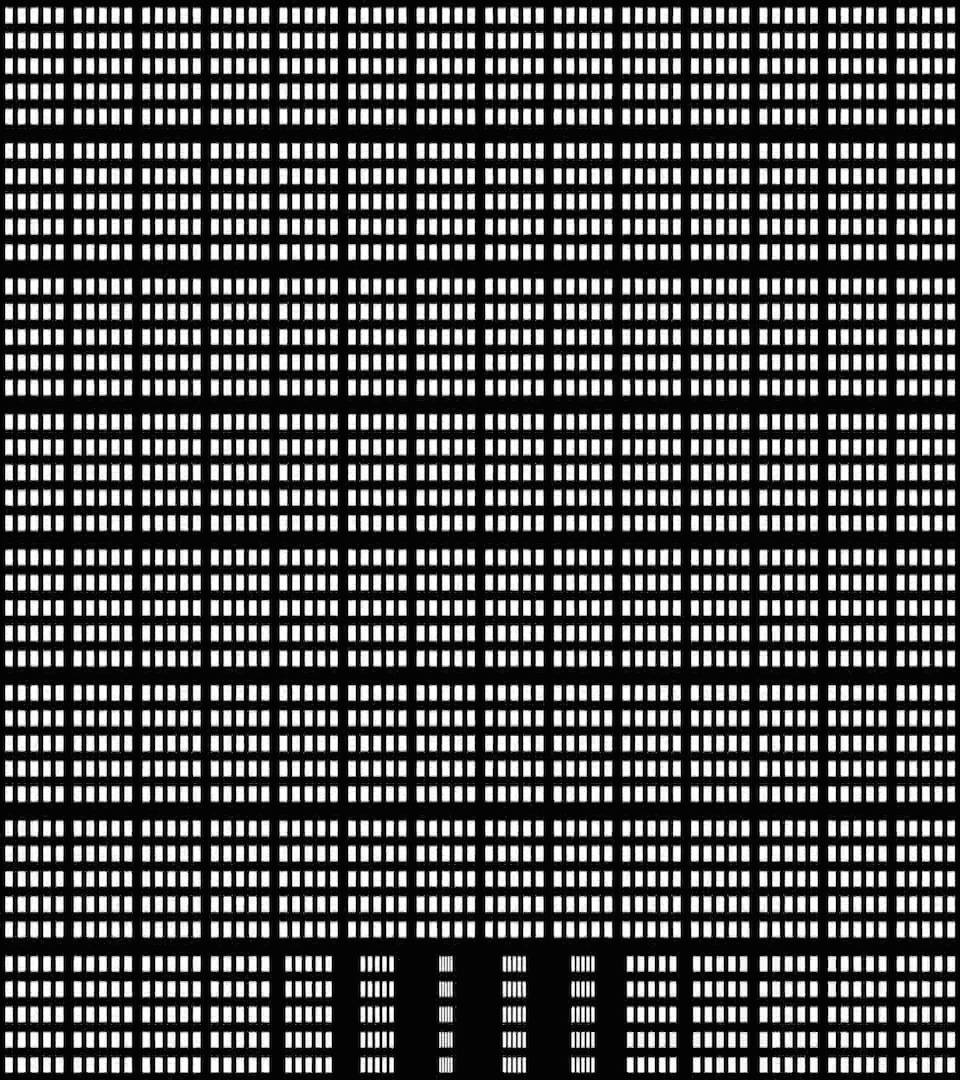

My problem is that I have no idea on how to use the tables. There are 6 tables, one for every color and for the X and Y axes. Does anybody have an idea how I can read the files and apply the distortion? This is the .png file for the blue color on the X axis:

I tried the following code, as suggested by Tetragramm:

Mat prepareLUT(char* filename){

Mat first;

first = imread(filename, CV_LOAD_IMAGE_COLOR);

Mat floatmat;

first.convertTo(floatmat, CV_32F);

Mat* channels = (Mat*)malloc(3*sizeof(Mat));

split(floatmat, channels);

Mat res(Size(960,1080), CV_32FC1);

res = channels[0] + channels[1]/255.0 + channels[2]/65025.0;

//scaleAdd(channels[1], 1.0/255, channels[0], res);

//scaleAdd(channels[2], 1.0/65025, res, res);

free(channels);

return res;

}

I do this for all 6 LUTs, and then I split my image and apply the remap like this:

std::vector<Mat> channels(3);

split(big, channels);

std::vector<Mat> remapped;

Mat m1;

remap(channels[0], m1, data->lut_xb, data->lut_yb, INTER_LINEAR);

remapped.push_back(m1);

Mat m2;

remap(channels[1], m2, data->lut_xg, data->lut_yg, INTER_LINEAR);

remapped.push_back(m2);

Mat m3;

remap(channels[2], m3, data->lut_xr, data->lut_yr, INTER_LINEAR);

remapped.push_back(m3);

Mat merged;

merge(remapped, merged);

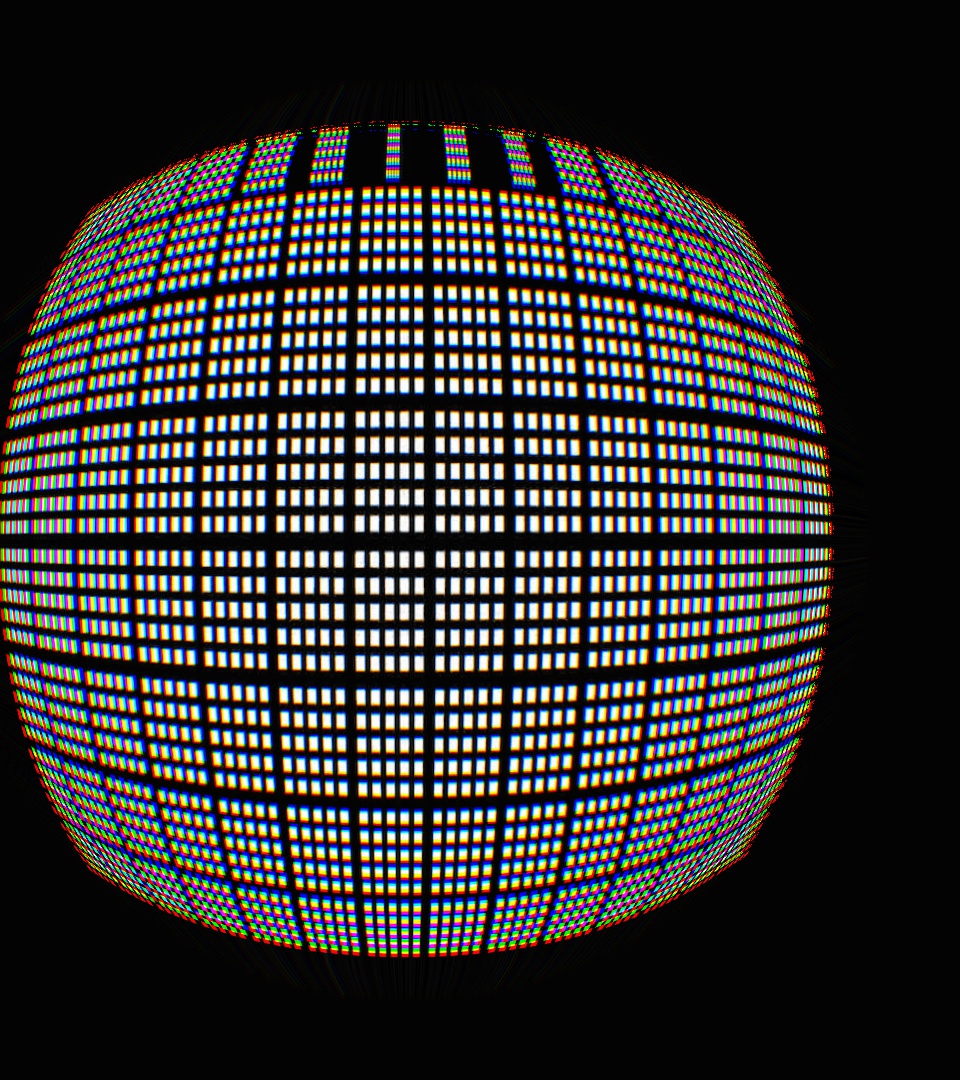

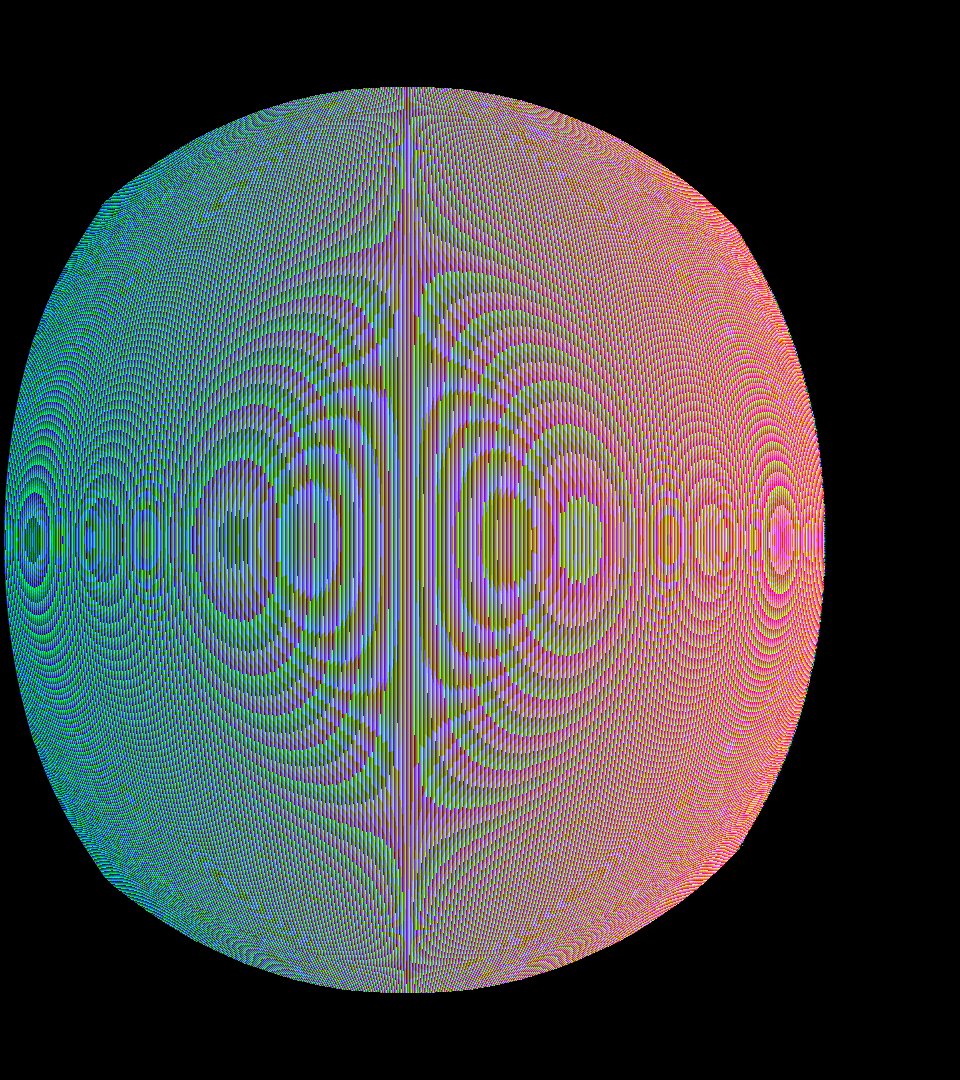

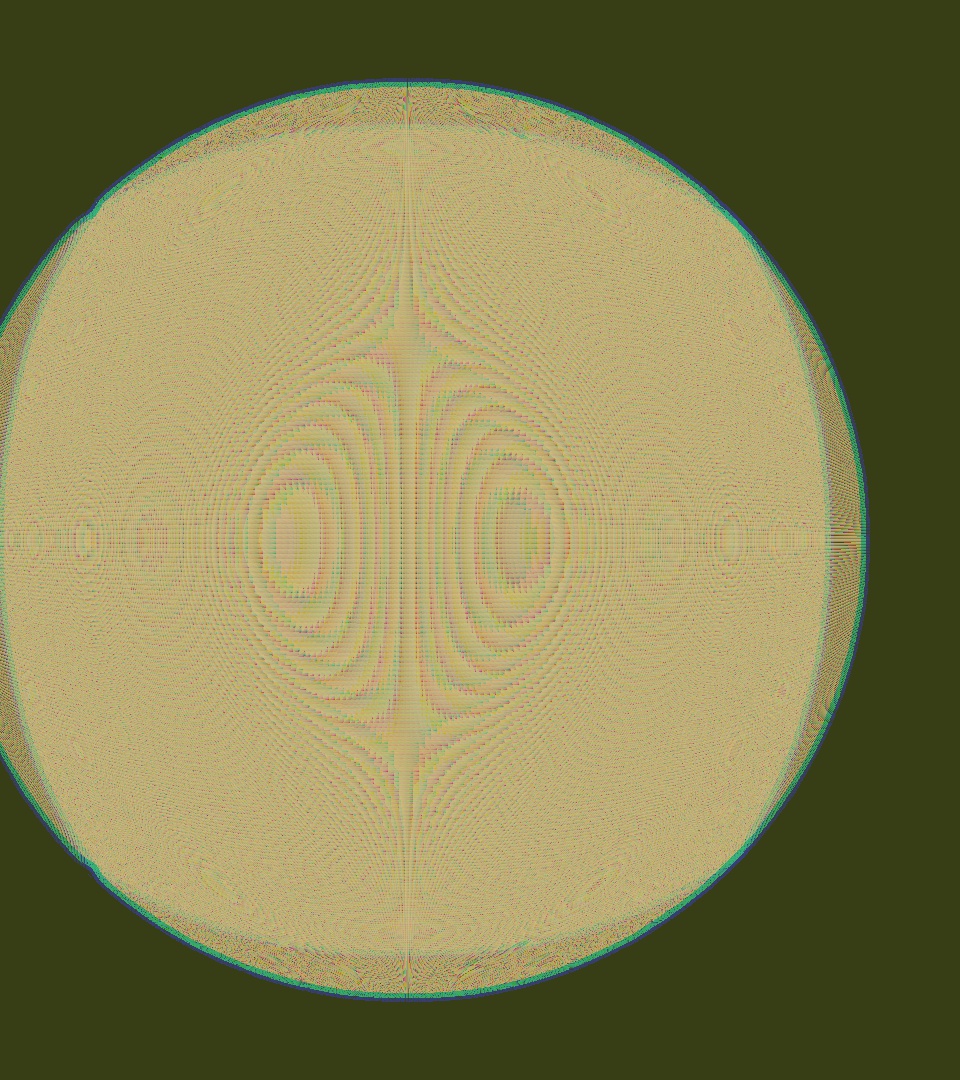

But the image does not turn out right. This is supposed to be an image of my hand:

Any idea what might be wrong?