I'm moving this to an answer so I can write longer.

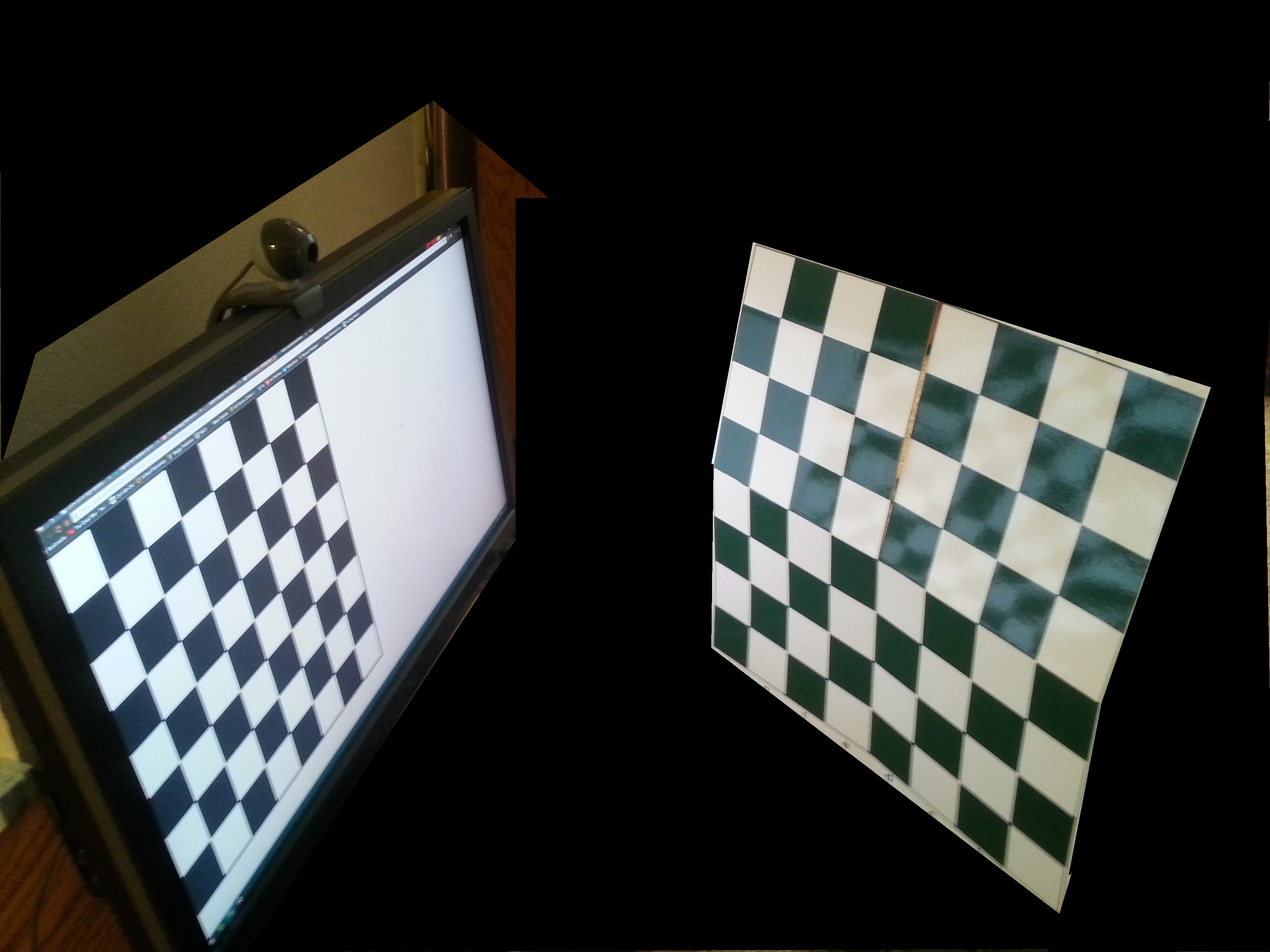

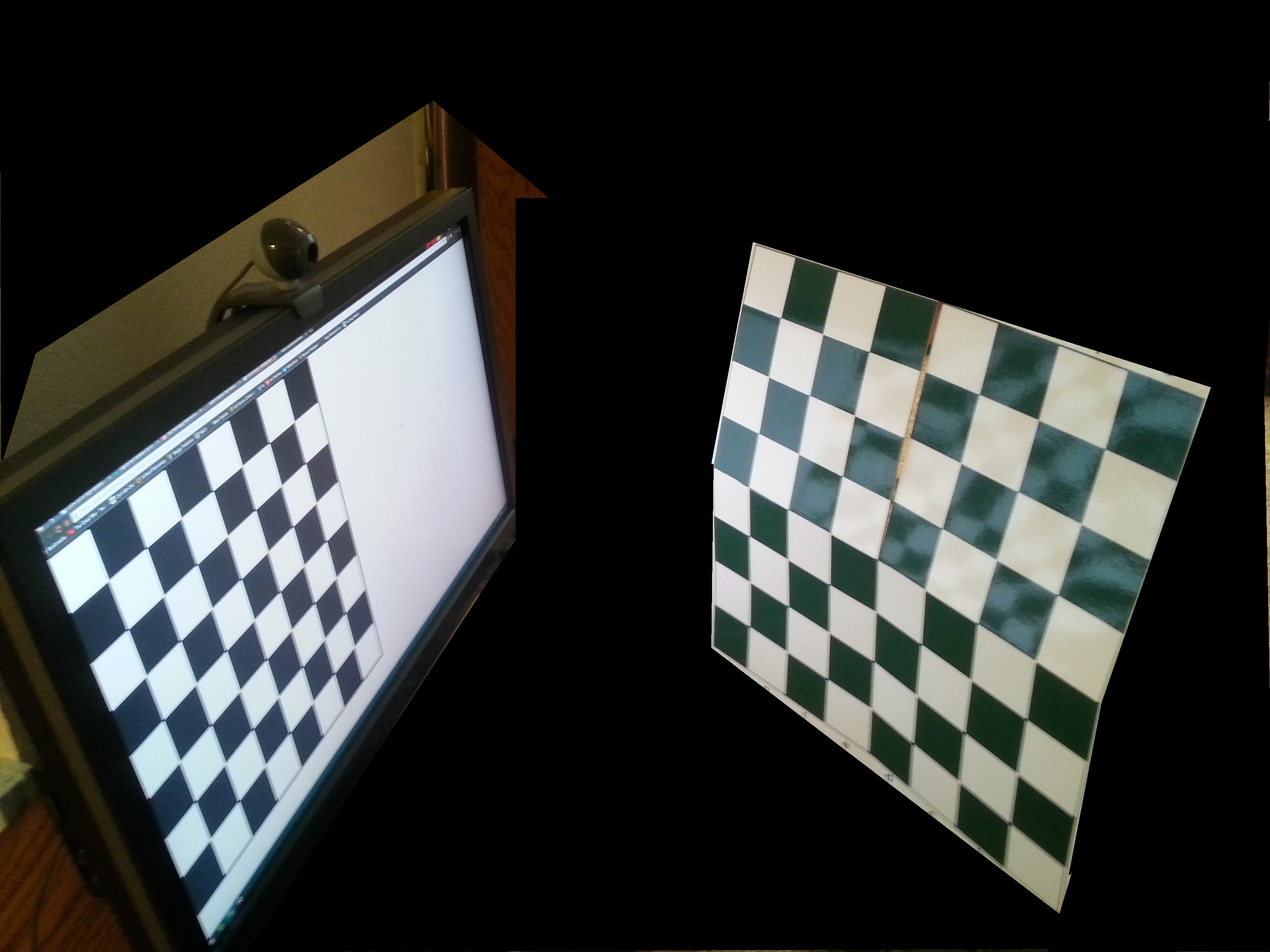

Here is the setup you should be using. With the "real" chessboard being flatter than that one, I just grabbed it. Oh, and the picture on the screen should be shown in full screen mode so the corner of the board and screen match.

The reason you need to do it this way is because when you find the location of the chessboard, it finds the position of the top left corner of the chessboard and the orientation of the plane of the chessboard. Since you are trying to find the top left corner of the screen and the plane of the screen, you need them to match up. So you put the screen chessboard flat on the screen with it's corner in the corner of the screen.

So you have two cameras and two chessboards. You should take a picture from both cameras with both chessboards in the same spot. For better results, move the secondary camera (the one taking this picture) around and average the results.

From these pictures you will get three transformations.

- From primary camera to real chessboard.

- From secondary camera to real chessboard.

- From secondary camera to screen chessboard.

What you want to find is from primary camera to screen chessboard. So you need to reverse the transformation from secondary camera to real chessboard, and now you have the three you need. primary->real->secondary->screen. And from that, obviously, you can get the inverse, if you need it.

EDIT: Code I used.

//Secondary Camera to Space Chessboard

<Snip Calculation>

Rodrigues(rvecs[0], R2);

tvecs[0].copyTo(T2);

//Secondary Camera to Screen Chessboard

Rodrigues(rvecs[0], R3);

R3 = R3.t();

T3 = -R3*tvecs[0];

//Primary Camera to Space Chessboard

flip(image, image, 1);

Rodrigues(rvecs[0], R1);

R1 = R1.t();

T1 = -R1*tvecs[0];

Mat translation(3, 1, CV_64F);

translation.setTo(0);

translation = R3*translation + T3;

translation = R2*translation + T2;

translation = R1*translation + T1;

This gives this, which is a little off, but probably because I only used one image for secondary camera and got bad camera matrix and distortion. The primary camera needs more images too, but I mentioned that below.

[-96.78414539354907;

-471.6594258771706;

16.61072172695242]

Could you add a little more information? Do you mean you are displaying an image and what to know where in the image the ray is? Or is the ray coming from something in front of the screen like an eye? Or what?

I have a video of a person and a gaze vector originating from that person's eye. The center of the eye and gaze vector are in 3d world coordinates. I want to figure out where the user is looking, so consequently where the gaze ray intersects with the computer screen..

Alright, first step, do you know the coordinates of the corners of the screen in world coordinates? That's step one.

No, that's where I'm struggling. I'm trying to figure out how to get those so that I can construct a plane and intersect the gaze vector (or ray) with it. Any ideas/pointers would be much appreciated!

Ok, Where is the origin of your world system? The camera? There are a couple of ways to do this. One is to carefully align the camera with the screen and measure the distance to the corners.

If you have another camera, you can do something more precise. Place a chessboard at a forty five degree angle in front of your primary camera and find the transformation from world coordinates to chessboard coordinates. Then use the second camera to take a picture (or pictures) with the first chessboard, and a chessboard pattern on the screen. You can then find the location of the screen in the chessboard coordinates and translate that to world coordinates.

Are you comfortable enough with the transformations to do that?

The camera is the origin of my world system. If I'm using a laptop with a built in webcam, the z coordinate of the screen corners would be 0, correct?

For the second method, I'm assuming you're referring to the findChessBoardCorners() function OpenCV has. But I'm confused as to how the second camera's picture would help determine the location of the screen.

If you're using a laptop, then yes, you can likely assume zero. At least for the top corners. If the focal plane of the camera is tilted with respect to the screen the bottom corners could be pretty far off.

The calibrateCamera function returns the rvec and tvec of the camera with respect to the chessboard. So once you use multiple cameras and a chessboard in space and on the screen you have Primary Camera -> Chessboard in Space and Secondary Camera -> Chessboard in Space and Secondary Camera -> Chessboard on screen. Just invert the second one, and then apply them all in sequence to get Primary Camera -> Chessboard on Screen.

Thanks! Could you just clarify how I would get the bottom corners using the first method? I have the intrinsic camera matrix.

Honestly, I can't think of a way without simply carefully measuring.

Wait, I have an idea. Get a mirror and set it up so that the camera is looking straight back into itself. Then show a chessboard on the screen and get the rotation and translation. Then measure the distance from mirror to camera, and you have everything you need. This is a bit hard to hold the mirror in place, but not impossible. Set the laptop in front of the bathroom mirror and carefully arrange the screen.

How would getting the rvecs and tvecs help with the screen corners? I'm still a bit confused

One other point came up. I have fx, fy in pixel units. Is there a conversion to mm? I've looked this up but the answers have been inconsistent.