solvePnP large (~100) pixel re-projection error

Hi,

I have been trying to find a camera pose in relation to an object frame, however I'm getting unstable results and large re-projection errors (100 pixels or more in total).

I know that object points and image points are correct. Intrinsic parameters and distortion coefficients were obtained with OpenCV's calibrateCamera with minimal re-projection error (0.5 pixel).

I have tried CV_EPNP and solvePnPRansac, all of them return about the same results, or worse.

The code:

cv::Mat intrinsic_matrix = (cv::Mat_<double>(3, 3) <<

502.21, 0, 476.11,

0, 502.69, 360.73,

0, 0, 1);

cv::Mat distortion_coeffs = (cv::Mat_<double>(1, 5) <<

-3.2587021051876525e-01, 1.1137886872576558e-01, -8.0030372520954252e-04, 1.4677531243862570e-03, -1.6824659875846807e-02);

// Intrinsic matrix and distortion coefficients are read from a file

vector<cv::Point3f> objectPoints;

vector<cv::Point2f> imagePoints;

if (pcl::io::loadPCDFile<pcl::PointXYZ> ("lms1.pcd", *Lms1PointCloud) == -1) //* load the file

{

PCL_ERROR ("Couldn't read file test_pcd.pcd \n");

return (-1);

}

if (pcl::io::loadPCDFile<pcl::PointXYZ> ("singleCamCloud.pcd", *SingleCamCloud) == -1) //* load the file

{

PCL_ERROR ("Couldn't read file test_pcd.pcd \n");

return (-1);

}

lms1PointCloud.points=Lms1PointCloud->points);

singleCamCloud.points=SingleCamCloud->points;

// Fill vectors objectPoints and imagePoints

for (int i=0; i<singleCamCloud.points.size(); i++)

{

imagePoints.push_back(cv::Point2f(singleCamCloud.points[i].x, singleCamCloud.points[i].y));

objectPoints.push_back(cv::Point3f(lms1PointCloud.points[i].x, lms1PointCloud.points[i].y, lms1PointCloud.points[i].z));

}

cv::Mat rotation_vector;

cv::Mat translation_vector;

solvePnP(objectPoints, imagePoints, intrinsic_matrix, cv::noArray(), rotation_vector, translation_vector, false, CV_ITERATIVE);

// Projection of objectPoints according to solvePnP

cv::Mat test_image = cv::Mat::zeros( 720, 960, CV_8UC3 );

vector<cv::Point2f> reprojectPoints;

cv::projectPoints(objectPoints, rotation_vector, translation_vector, intrinsic_matrix, cv::noArray(), reprojectPoints);

float sum = 0.;

sum = cv::norm(reprojectPoints, imagePoints);

std::cout << "sum=" << sum << std::endl;

// Draw projected points (red) and real image points (green)

int myradius=5;

for (int i=0; i<reprojectPoints.size(); i++)

{

cv::circle(test_image, cv::Point(reprojectPoints[i].x, reprojectPoints[i].y), myradius, cv::Scalar(0,0,255),-1,8,0);

cv::circle(test_image, cv::Point(imagePoints[i].x, imagePoints[i].y), myradius, cv::Scalar(0,255,0),-1,8,0);

}

imwrite( "test_image.jpg", test_image );Object points file and image points file (dropbox links).

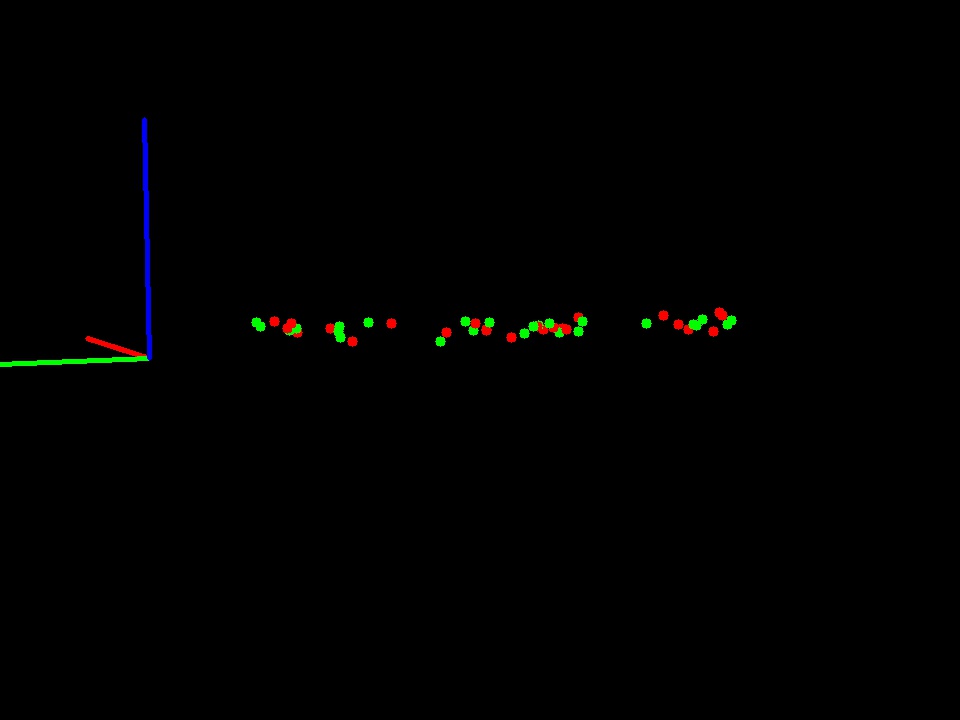

In these conditions I get a re-projection error of 94.43. The image bellow shows original image points (green) and re-projected image points (red).

I'm also not sure how I should use the distortion coefficients, since image points are already obtained from an undistorted image I opted to not use them on solvePnP and projectPoints, is this correct? Although I don't think this is where the large re-projection error comes from, since the error doesn't really change much by using them or not.

I can't seem to find an explanation for such a large error...

If you need any more details feel free to ask. Thanks in advance.

EDIT: An image to help visualize the problem. See comments bellow.

Green is the Z camera axis, orange with the frame overlapped is my reference frame ...