Train dataset for temp stage can not be filled. Branch training terminated."

I am trying to detect logos in flat, 2 dimensional images (advertisements).

Setup- Positive Image #1 and #2

Negative Images (600 random advertisements) Sample #1 and Sample #2

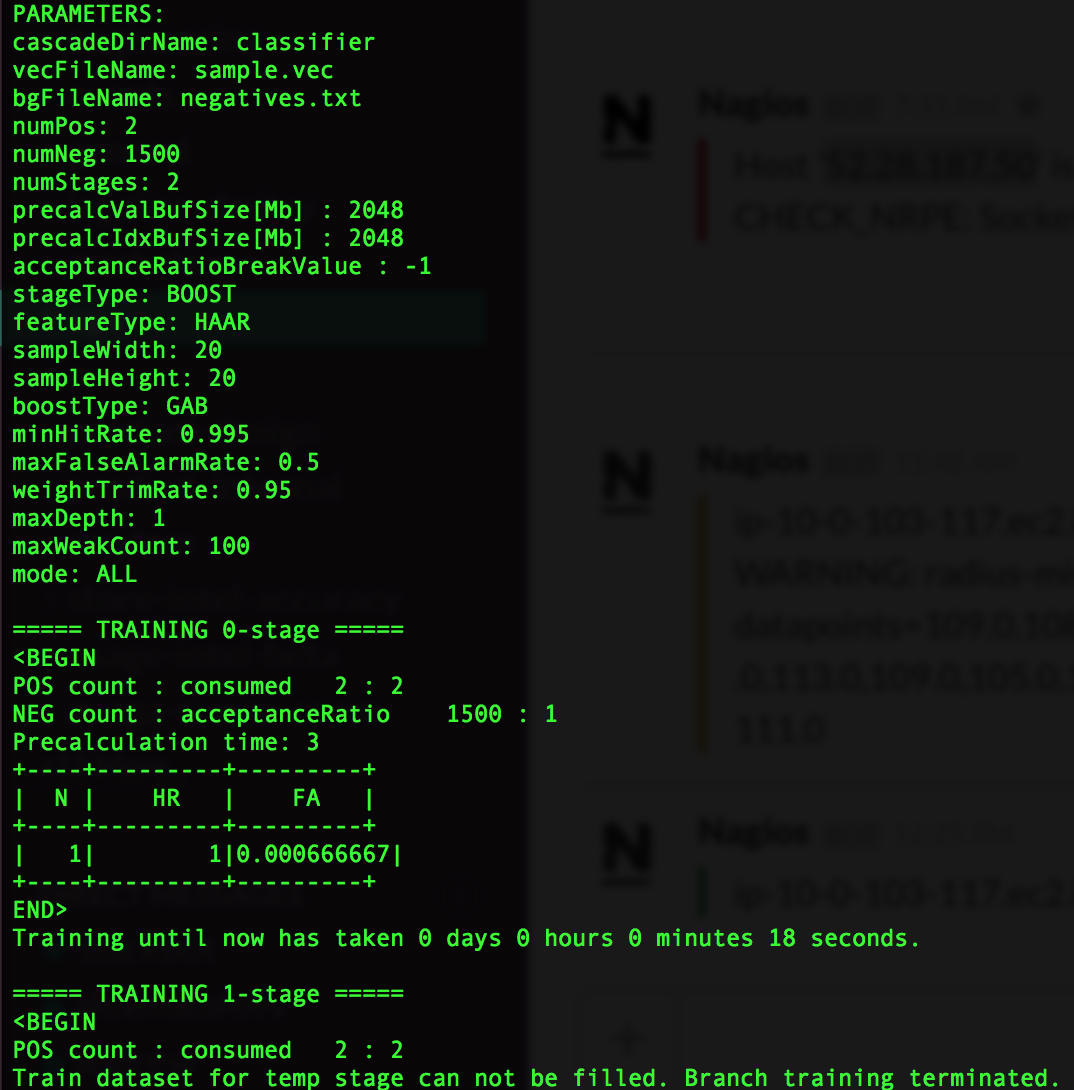

With the command opencv_traincascade -data classifier -vec sample.vec -bg negatives.txt -numStages 2 -minHitRate 0.995 -maxFalseAlarmRate 0.5 -numPos 2 -numNeg 1500 -w 20 -h 20 -mode ALL -precalcValBufSize 2048 -precalcIdxBufSize 2048

Gives me the error -

"Train dataset for temp stage can not be filled. Branch training terminated."

Any help would be greatly appreciated!

Simply said, you have the maximum performance that you can get from using 2 positive samples. You need more training data for the training to continue.

Thanks Steven for helping! I only have 2 samples in there because I read in the documentation

For example you may need only one positive sample for absolutely rigid object like an OpenCV logoShould i just make different sizes/rotation of the same logo?

Oh wow I completely misread your training output. Getting the positive samples was no problem at all. The problem lies in your negative samples. I am guessing you have exactly 1500 samples with the model size and that it? Keep in mind that -numNeg is the amount of samples that need to get wrongly classified by the previous stages, in order to be valid for a new negative sample for the next stage. This means that for example, for creating 3 stages you need for example 2000 negative windows, but -numNeg will only be like 700. You get it?

Thanks! That actually helped a lot. This is my result so far. Currently it can detect 3 out of the four images. Any thoughts on how I can improve? I'm using the commands here. I'm confused about how the algo still has trouble identifying the app logo when there are so many examples of the logo in the training set.

Well, it seems that you now need to play with the parameters. Please use the search button above on

cascade classifier parameters. I think I have explained them for over 1000 times by now.