Features2D example

Hi,

I start writting an example about feature2d and matching. I have got some questions. First questions Is it a good program call in right order, graphical result , dynamicCast and ...? Second questions about create method I use ORB::create and BRISK::create. Is it possible to write something like create("ORB"). Third questions matches are saved in file. On screen I can see

0 237 0 808.622

1 208 0 676.574

2 220 0 558.299

3 15 0 297.963

in file I have got

<Matches>

0 237 0 1145710537 1 208 0 1143547076 2 220 0 1141609254 3 15 0

I don't understand. have you got same results?

thanks for your help

#include <opencv2/opencv.hpp>

#include <vector>

#include <iostream>

using namespace std;

using namespace cv;

int main(void)

{

vector<String> typeAlgoMatch;

typeAlgoMatch.push_back("BruteForce");

typeAlgoMatch.push_back("BruteForce-Hamming");

typeAlgoMatch.push_back("BruteForce-Hamming(2)");

vector<String> typeDesc;

typeDesc.push_back("AKAZE");

typeDesc.push_back("ORB");

typeDesc.push_back("BRISK");

String dataFolder("../data/");

vector<String> fileName;

fileName.push_back("basketball1.png");

fileName.push_back("basketball2.png");

Mat img1 = imread(dataFolder+fileName[0], IMREAD_GRAYSCALE);

Mat img2 = imread(dataFolder+fileName[1], IMREAD_GRAYSCALE);

Ptr<Feature2D> b;

vector<String>::iterator itDesc;

// Descriptor loop

for (itDesc = typeDesc.begin(); itDesc != typeDesc.end(); itDesc++){

Ptr<DescriptorMatcher> descriptorMatcher;

vector<DMatch> matches; /*<! Match between img and img2*/

vector<KeyPoint> keyImg1; /*<! keypoint for img1 */

vector<KeyPoint> keyImg2; /*<! keypoint for img2 */

Mat descImg1, descImg2; /*<! Descriptor for img1 and img2 */

vector<String>::iterator itMatcher = typeAlgoMatch.end();

if (*itDesc == "AKAZE"){

b = AKAZE::create();

}

else if (*itDesc == "ORB"){

b = ORB::create();

}

else if (*itDesc == "BRISK"){

b = BRISK::create();

}

try {

b->detect(img1, keyImg1, Mat());

b->compute(img1, keyImg1, descImg1);

b->detectAndCompute(img2, Mat(),keyImg2, descImg2,false);

// Match method loop

for (itMatcher = typeAlgoMatch.begin(); itMatcher != typeAlgoMatch.end(); itMatcher++){

descriptorMatcher = DescriptorMatcher::create(*itMatcher);

descriptorMatcher->match(descImg1, descImg2, matches, Mat());

// Keep best matches only to have a nice drawing

Mat index;

Mat tab(matches.size(), 1, CV_32F);

for (int i = 0; i<matches.size(); i++)

tab.at<float>(i, 0) = matches[i].distance;

sortIdx(tab, index, SORT_EVERY_COLUMN + SORT_ASCENDING);

vector<DMatch> bestMatches; /*<! best match */

for (int i = 0; i<30; i++)

bestMatches.push_back(matches[index.at<int>(i, 0)]);

Mat result;

drawMatches(img1, keyImg1, img2, keyImg2, bestMatches, result);

namedWindow(*itDesc+": "+*itMatcher, WINDOW_AUTOSIZE);

imshow(*itDesc + ": " + *itMatcher, result);

FileStorage fs(*itDesc+"_"+*itMatcher+"_"+fileName[0]+"_"+fileName[1]+".xml", FileStorage::WRITE);

fs<<"Matches"<<matches;

vector<DMatch>::iterator it;

cout << "Index \tIndex \tdistance\n";

cout << "in img1\tin img2\n";

double cumSumDist2=0;

for (it = bestMatches.begin(); it != bestMatches.end(); it++)

{

cout << it->queryIdx << "\t" << it->trainIdx << "\t" << it->distance << "\n";

Point2d p=keyImg1[it->queryIdx].pt-keyImg2[it->trainIdx].pt;

cumSumDist2=p.x*p.x+p.y*p.y;

}

desMethCmp.push_back(cumSumDist2);

waitKey();

}

}

catch (Exception& e){

cout << "Feature : " << *itDesc << "\n";

if (itMatcher != typeAlgoMatch.end())

cout << "Matcher : " << *itMatcher << "\n";

cout<<e.msg<<endl;

}

}

int i=0;

cout << "\n\t";

for (vector<String>::iterator itMatcher = typeAlgoMatch.begin(); itMatcher != typeAlgoMatch.end(); itMatcher++)

cout<<*itMatcher<<"\t";

cout << "\n";

for (itDesc = typeDesc.begin(); itDesc != typeDesc.end(); itDesc++){

cout ...

Does this version work with you instead of using dynamicCast ?

@Eduardo Yes it does

It is no more possible to use in OpenCV 3.0 something like (link here and here):

So your code is correct.

are you trying to add a sample ? good ;)

issue: http://code.opencv.org/issues/4308

@berak I have modified source file.

good ! it also might be better, to write the xml files to your local folder, not to samples/data

Some few more comments:

@Eduardo About two methods it's only to say that there is two way to do the same thing. About your last remark i have catch exception and there is no exception on my computer. For all descriptors methods can be called. After in source file of this method may be you're right hamming=hamming(2). I don't know. on image result I can see some difference betwenn hamming and hamming(2) for all descriptors. May be results are different for an another reason. I have create a pull request for this sample must I delete hamming(2)?

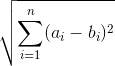

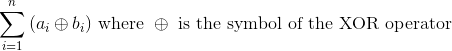

Some late comments after I see the pull request. I still don't see the point to test the different binary descriptors with all the matching methods. For me, it is a nonsense to use a matching method other than

BruteForce-Hammingwith these binary descriptors as we will always end up intrinsically with a wrong result. For a novice user, it will mess him up.Other point, you have the cumulative distance (train/query distance point) between each matching method. How could you decide which one is the most appropriate ? If you really want to show the possible matching method, I think that the best is to choose two images with a known homography and to show that the distance error between the train match point and the true corresponding point is bigger with the inappropriate matching methods.