Thresholding + masking image erratic results

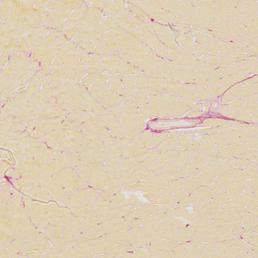

Hi! I'm trying to threshold + mask this image:

However, using the code below this produces erratic and random images:

I'm guessing it has something to do with the binary header imprinted in beginning of the produced images, however, I'm not where they are coming from. Also, considering that the images change randomly, the header is to some extent unique for each image (EDIT: To clarify: using same code and input image, the output is not consistent for each conversion).

<UPDATE>: This is the part of the code which errs:

mask:

[255 255 0 0]

image:

[200 150 100 50]

result of cv2.bitwise_and(b,b,mask=a):

[[200]

[150]

[145]

[ 1]]

Now I'd assume the operation for e.g. element at index 1 (150) is evaluated as follows:

Image ∧ Image = Image∧Image

150 ∧ 150 = 150

0b10010110 ∧ 0b10010110 = 0b10010110

⇒Image∧Image=Image

Image∧Image ∧ Mask = Image∧Image∧Mask

150 ∧ 255 = 150

0b10010110 ∧ 0b11111111 = 0b10010110

⇒Image∧Image∧Mask=Image∧Mask

And that holds true. However, this is what I get for element at index 2 (100), at different (but with identical parameters) function calls:

Image ∧ Mask = Image∧Mask

100 ∧ 0 = 184

0b10010110 ∧ 0b11111111 = 0b10111000

and

Image ∧ Mask = Image∧Mask

100 ∧ 0 = 153

0b10010110 ∧ 0b11111111 = 0b10011001

and

Image ∧ Mask = Image∧Mask

100 ∧ 0 = 49

0b10010110 ∧ 0b11111111 = 0b00110001

What am I missing here?

</UPDATE>

The input image is an RGB (no alpha) and the thresholds are all positive.

Any insights are most welcome!

(EDIT: As per comments, the full code may be a bit verbose - so now listing relevant pieces first, full code below:)

def threshold_image(self,image):

# image = PIL RGB image

assert(image.size == (258,258))

assert(len(image.getbands()) == 3)

image=numpy.array(image) # Still RGB, not BGR.

assert(image.shape == (258,258,3))

mask = cv2.inRange(image, numpy.array([0, 0, 0], dtype=numpy.uint8),

numpy.array([200, 250, 251], dtype=numpy.uint8))

im=cv2.bitwise_and(image,image,mask=mask)

assert(image.shape == (258,258,3))

return Image.fromarray(im)

Full code:

from PIL import Image, ImageMath

import cv2, numpy, math, os, copy

A=0

B=1

C=2

MIN=0

MAX=1

U=0

L=1

class Thresholder():

"""Class for performing thresholding and/or conversions."""

# _thresholds=[]

# bounds=[[],[]]

# conversion_factor = None

# neg_test = False

# pos_test = False

def __init__(self,thresholds,from_cs="rgb",to_cs="rgb"):

"""Instantiates a new thresholding engine.

Args:

thresholds: 2d array of the form

[ [cs_val_min_a, cs_val_max_a],

[cs_val_min_b, cs_val_max_b],

[cs_val_min_c, cs_val_max_c] ]

...where a,b and c are the channels of

the target (threshold) colorspace.

Negative value in either max or min of a channel

will trigger a NOT threshold (i.e. inverted).

from_colorspace: Originating test set colorspace.

to_colorspace: Colorspace of threshold values.

Returns:

A new Thresholding object.

"""

self._thresholds=thresholds

from_cs=from_cs.upper()

to_cs=to_cs.upper()

self.conversion_factor = self._check_cs_conversion(from_cs,to_cs)

upper=(thresholds[A][MAX],thresholds[B][MAX],thresholds[C][MAX])

lower=(thresholds[A][MIN],thresholds[B][MIN],thresholds[C][MIN])

self.bounds=(upper,lower)

upper = []

lower = []

self.inverts=[]

for i in range(3):

u=self.bounds ...

Since this code is not standard OpenCV provided functionality but rather a function you just copy pasted from somewhere without knowing exactly what it is doing, I am afraid that the support for this will be minimal. Why not contact the original author for this?

From my point of view is a problem of Mat types/channels/size

@StevenPuttemans - Copy/Pasted from my code, yes. I am the 'original author'. How is this not standard functionality? Not saying it definitely isn't, just wondering why it wouldn't be. I'll edit post to show precisely which methods/operations are relevant, just to be safe :+) @thdrksdfthmn Yes, dimensionality may be cause, but where does it get the hickups?

You can do a debugging with

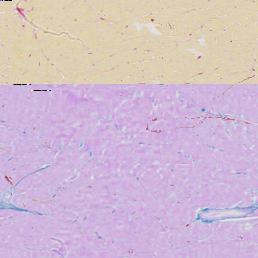

imshow()s to see each step. I have not used python :)@thdrksdfthmn: Thnx for tips. The corruption appears when applying the mask - at the bitwise_and. None image instances ahead of that are corrupt.

So, maybe the two images are not the same type? (you have 2

bitwise_and()s)image.shape == (258,258,3) mask.shape == (258,258) Since the mask is binary, the colorspace of this shouldn't matter, no?

No, the mask is always one channel. See this, you have two sources and a destination Mat

Yes, the mask is one channel. mask.shape == (258, 258) implies (258,258,1). Sorry for not being clear. If I can supply any other helpful information let me know. I've solved the issue for now by using a min/max operation on the image and a 3-channel version of the 1 channel mask mentioned above.