Strange behavior of findFundamentalMat + RANSAC

I'm using findFundamentalMat + RANSAC to calculate the fundamental matrix of a stereo rig. However, it seems that it is not giving stable outputs.

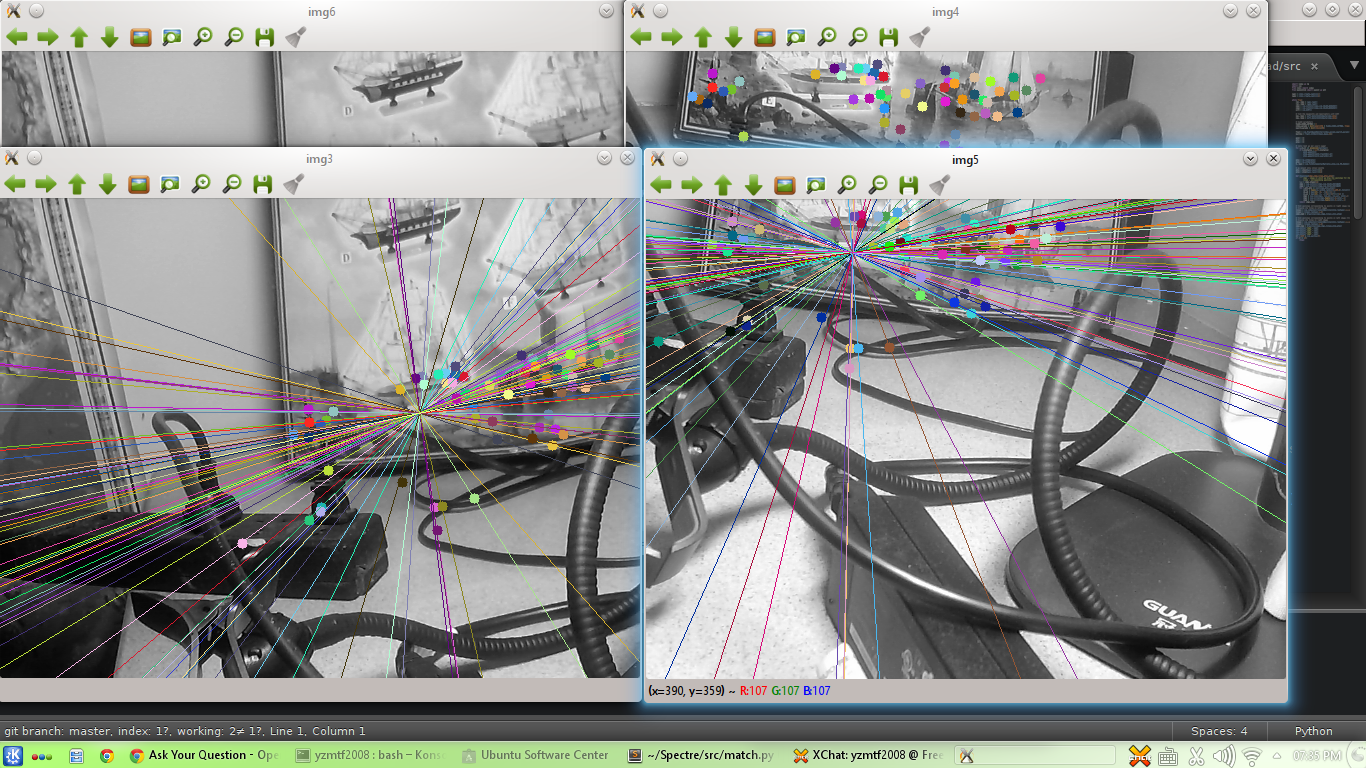

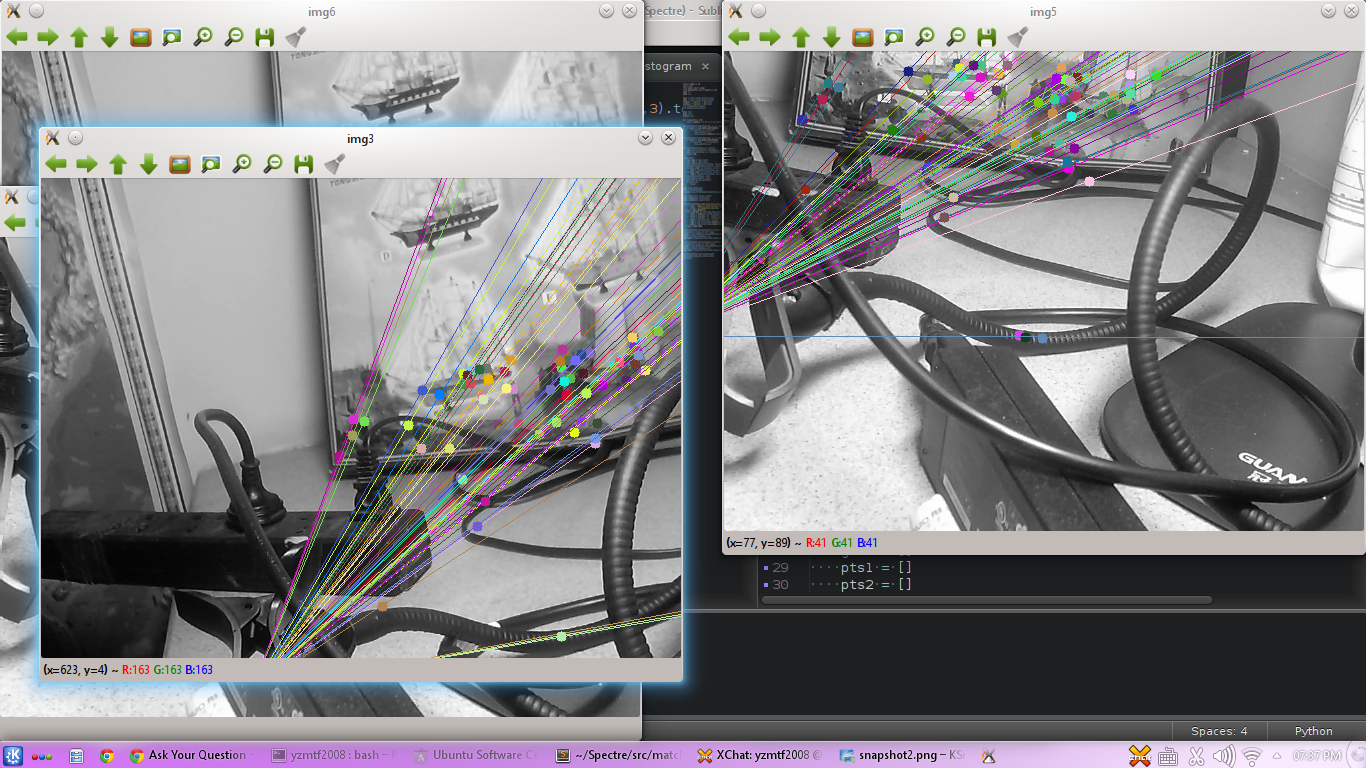

For every run of the same scene, it gives wildly different outputs. The epipoles are drifting rapidly, and they sometimes appear inside the image. However, when both of the epipoles are inside the image, it seems that the two points are correspondent points.

Why is this happening?

[screenshot]

import numpy as np

import cv2

from libcv import video

from matplotlib import pyplot as plt

cap1 = video.create_capture(1)

cap2 = video.create_capture(2)

while True:

ret, img1 = cap1.read()

ret, img2 = cap2.read()

img1 = cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)

sift = cv2.SIFT()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

good = []

pts1 = []

pts2 = []

# ratio test as per Lowe's paper

for i,(m,n) in enumerate(matches):

if m.distance < 0.6*n.distance:

good.append(m)

pts2.append(kp2[m.trainIdx].pt)

pts1.append(kp1[m.queryIdx].pt)

pts1 = np.int32(pts1)

pts2 = np.int32(pts2)

F, mask = cv2.findFundamentalMat(pts1,pts2,cv2.FM_RANSAC)

# We select only inlier points

pts1 = pts1[mask.ravel()==1]

pts2 = pts2[mask.ravel()==1]

def drawlines(img1,img2,lines,pts1,pts2):

''' img1 - image on which we draw the epilines for the points in img2

lines - corresponding epilines '''

r,c = img1.shape[:2]

img1 = cv2.cvtColor(img1,cv2.COLOR_GRAY2BGR)

img2 = cv2.cvtColor(img2,cv2.COLOR_GRAY2BGR)

for r,pt1,pt2 in zip(lines,pts1,pts2):

color = tuple(np.random.randint(0,255,3).tolist())

x0,y0 = map(int, [0, -r[2]/r[1] ])

x1,y1 = map(int, [c, -(r[2]+r[0]*c)/r[1] ])

img1 = cv2.line(img1, (x0,y0), (x1,y1), color,1)

img1 = cv2.circle(img1,tuple(pt1),5,color,-1)

img2 = cv2.circle(img2,tuple(pt2),5,color,-1)

return img1,img2

# Find epilines corresponding to points in right image (second image) and

# drawing its lines on left image

lines1 = cv2.computeCorrespondEpilines(pts2.reshape(-1,1,2), 2,F)

lines1 = lines1.reshape(-1,3)

img5,img6 = drawlines(img1,img2,lines1,pts1,pts2)

# Find epilines corresponding to points in left image (first image) and

# drawing its lines on right image

lines2 = cv2.computeCorrespondEpilines(pts1.reshape(-1,1,2), 1,F)

lines2 = lines2.reshape(-1,3)

img3,img4 = drawlines(img2,img1,lines2,pts2,pts1)

cv2.imshow('img6', img6)

cv2.imshow('img5', img5)

cv2.imshow('img4', img4)

cv2.imshow('img3', img3)

ch = 0xFF & cv2.waitKey(5)

if ch == 27:

break

A small guess, clone the images before applying the sift operator. You are now assigning continuous the same pointer, so your data gets processed by the operator possible leading to unexpected behaviour.