It can be done and here are my results. This routine is a combination of thresholding and my custom color-component dependant threshold (Java-Code):

Code

// initialization

Mat colorsub = ...

byte[] data = new byte[4];

byte[] black = new byte[] { 0, 0, 0, -1 };

byte[] white = new byte[] { -1, -1, -1, -1 };

// iterate over the image

for (int y = 0; y < colorsub.height(); y++) {

for (int x = 0; x < colorsub.width(); x++) {

// extract color component values as unsigned integers (byte is a signed type in java)

colorsub.get(y, x, data);

int r = data[0] & 0xff;

int g = data[1] & 0xff;

int b = data[2] & 0xff;

// do simple threshold first

int thresh = 100;

if (r > thresh || g > thresh || b > thresh)

{

colorsub.put(y, x, white);

continue;

}

// adjust the blue component

b = (int)(b * 1.3);

// quantification of color component's values distribution

int mean = (r + g + b) / 3;

int diffr = Math.abs(mean - r);

int diffg = Math.abs(mean - g);

int diffb = Math.abs(mean - b);

int maxdev = 80;

if ((diffr + diffg + diffb) > maxdev)

colorsub.put(y, x, white);

else

colorsub.put(y, x, black);

}

}

On my camera I have noticed that in darkgray text the blue channel is too low so I amateurishly increase it a little. This could be improved by a real histogram correction.

Also, the first threshold operation is not really based on pixel intensity, but I think the effect is negligible for this demonstration.

Results

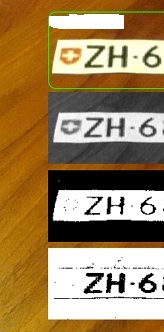

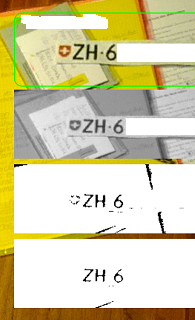

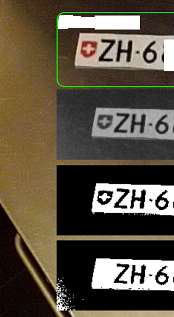

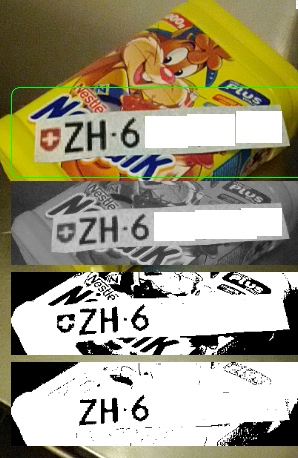

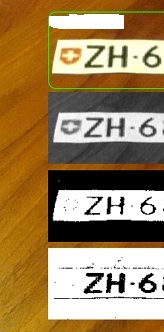

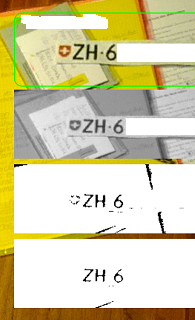

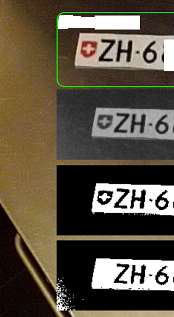

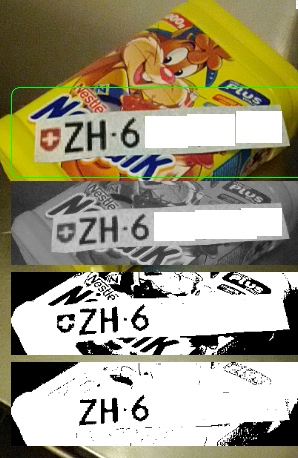

- First image is the regular image caught by the classifier, in a green border.

- Second image is the grayscale image by OpenCV, for reference

- Third image is OpenCVs binary threshold

- Fourth image is the output from my custom threshold function above.

On a regular desk. This doesn't look too bad:

On a sofa in low light condition. Here the threshold performs better. I had to correct the values for my custom threshold after this one:

On a stack of paper, reduced artifacts:

On the kitchen bar. Since the metal is gray, wen cannot filter it out, obviously:

I think this is the most interesting image. Much less and smaller segments:

Conclusion

As with every thresholding algorithm, fine tuning is paramount. Given a thresholded image with finely tuned parameters, a color-coded threshold can still further improve the picture.

It looks to be useful to remove inlined emblems and pictures from the text, e.g. smileys or colored bullet points.

Maybe the information in this post can be of use for someone else.