Projecting point cloud to image plane in order to estimate camera pose

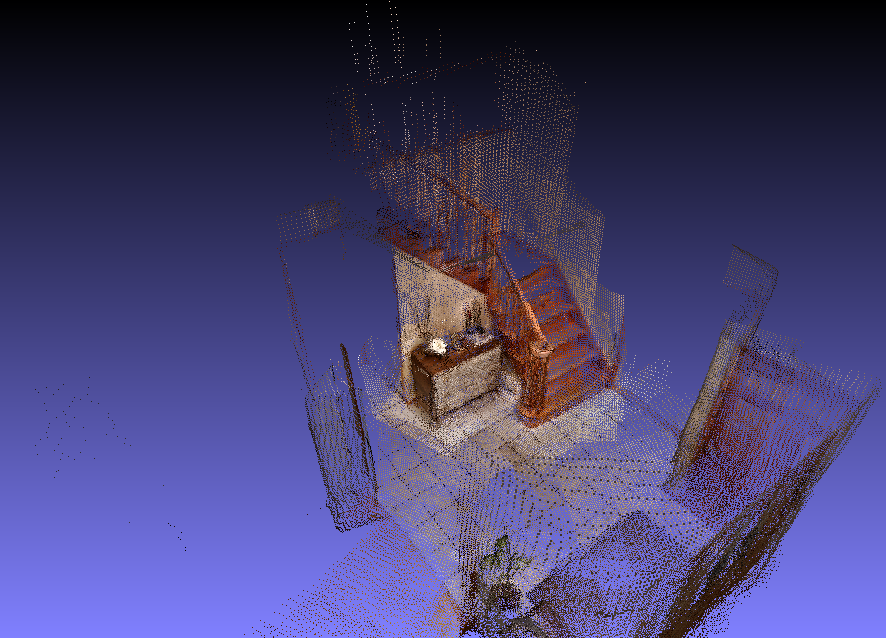

I am working on a task of image registration in point cloud of scene. I have an image from camera, 3D point cloud of scene and camera calibration data (i.e. focal length). The image and point cloud of scene share the same space. Here is a snapshot of my point cloud of scene

and query image

From my previous question here I have learnt that the SolvePnP method from OpenCV would work in this case but there is no obvious way to find robust correspondences btw query image and scene point cloud.

My intuition now is to project point cloud to image plane, match keypoints calculated on it against ones calculated on query image and use robust point correspondences in the SolvePnP method to get camera pose. I have found here a way to project point cloud to image plane.

My questions is could it work and is it possible to preserve the transformation btw the resultant image of scene and the original point cloud?

to speed this up a bit, do you have any other means to render the cloud to an image, like meshlab, blender, etc ?

Do you have pointcloud information with intensity (

pcl::PointXYZI) or RGB (e.g.pcl::PointXYZRGB) data?If you have at least intensity information, indeed you should be able to:

solvePnPto estimate the relative transformation ([R | t]) between the color camera and the depth camera (2D is from the color camera, 3D from the pointcloud)Without at least intensity information for the pointcloud, the only common information between image and pointcloud should be object contour information since the pointcloud encodes spatial structure of the scene.

Keypoints would not be useful here because they need texture information and your pointcloud don't have that (when you project your raw pointcloud into the image plane, the intensity is then the depth value).

@Eduardo, see the other question for a better pointcloud description

@berak I have Meshlab. Is it possible to render the cloud to an image using meshlab?

file -> save snapshot

just try and add it to your question along with your other 2d image

in the meantime, we can work out a better way to read your ply into opencv

I added a snapshot of my scene point cloud and other 2d image.

^^ well done, thanks ;)

unfortunately, this already shows some problems:

the 3d point cloud contains far more, than your 2d image, e.g. the back wall. this might even obscure the view you have in 2d (you could probably move the camera to the same viewpoint, your 2d image was taken from, but then you have one more transformation in the way)

it is probably far too sparse, to be rendered into an image useful for 2d feature matching (yea, you could try to use larger points for rendering, but hmmm, a lot of "holes" in there)

even if you can read in the point cloud from opencv, and do your own projection / rendering -- the problems will be the same.

btw, that's a real nice cloud, how did you acquire that ?

and btw, what's the purpose of all of this ?

Thank you for answer. It's a reconstructed point cloud out of photos from phone camera. My task is to recover the camera pose of capturing the query image. I can ask for another scene cloud in obj format if it helps. Maybe use PCL library to obtain image projection of the point cloud? Or something else?

so, some "structure from motion" approach ? tell us more, this is important (or at least, interesting) !

sure PCL has support for rendering images (via VTK) also, opencv_contrib has a VIZ(also vtk based) and an oviz(using ogre) module to render point clouds (but i never used either)

so, some "structure from motion" approach ? tell us more, this is important (or at least, interesting) !

I think yes. That's all I can tell. The project is about reconstructing 3D point cloud of scene from photos taken by phone camera. I need to estimate the pose the query image was taken. Can my approach work or is there a better solution to this problem?

I also tried this method to project unorganized point cloud to image. However it failed with Segmentation fault error

probably, because your point cloud is in a different format (normals, colors, etc)

you can't simply copy/paste SO answers without fact checking or doing any serious research on your own here

both answers to the SO question are simply wrong ! (using 1 as 1st index is bs)

Thank you. I see. So is there a way to effectively match the query image with the point cloud of scene?

i'm somehow smelling an XY problem here.

how are those 2 things connected ?

sorry, but this seems to be the major problem, now.

i'm somehow smelling an XY problem here.

What do you mean? More relates to images?

Ok. The point cloud of scene is actually a reconstruction of the scene from multiple photos taken by camera on phone (panoramic view). I am provided with some image and the full reconstructed point cloud of scene. My task is to estimate the pose of the query image (or localize it) in this point cloud. Hope it's clear now.

https://en.wikipedia.org/wiki/XY_problem

but yea, ok, i'm wrong, then ;)

Ok. I see. Btw I will get a dense point cloud of scene so no worries about holes. But how about the projecting the point cloud to 2d image? Is there a simple way to match the query image to the point cloud of scene?

sad as it is, NO, it's anything but simple.

maybe you can try the solvePnP() approach again, with a little cheating, like manually marking 3d corners (e.g. from the staircase) in meshlab, and corresponding 2d points in your image, just "to get the feel of it" ? a dozen points should be enough

Ok. I will try this. But this approach will not be automatic. It will require manual marking of points once we get new query image.

I have another idea to match the query image to image views of scene with known poses and use SolvePnP method. Here is discussed this approach. Would it work?

@Eduardo Thank you for the algorithm! How I can project the pointcloud using an intrinsics matrix and what data should I pass to solvePnP method in third step? I see that solvePnP method takes in point correspondences in an image and a point cloud.