Why cv:: encode gives similar size of output buffer for bgr8 and mono8 images?

Hello

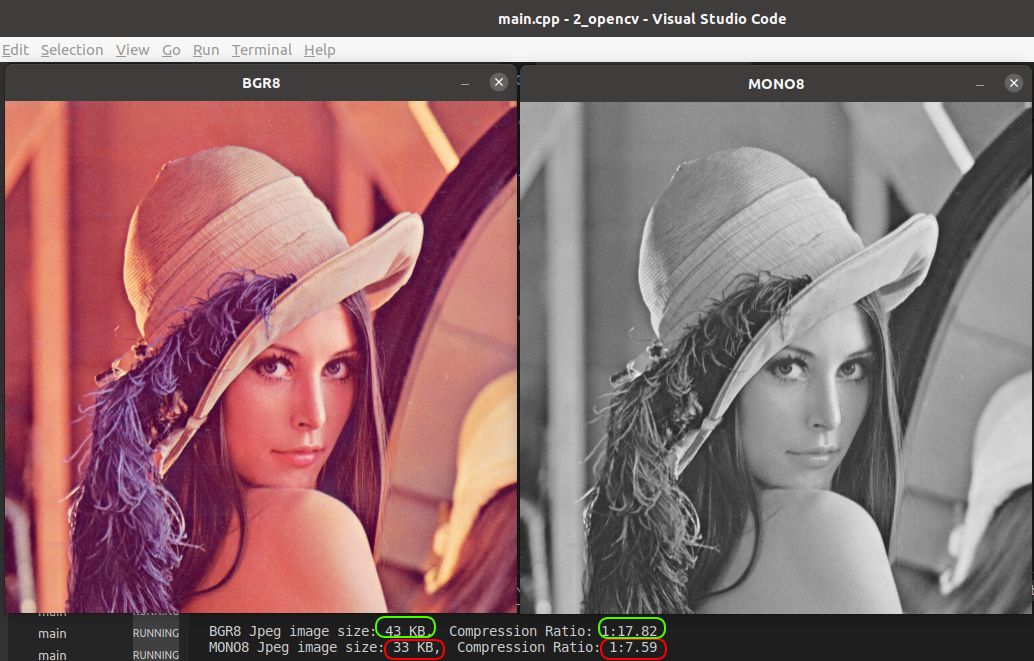

I notice 4.1 MB/s @50fps 1280x720 bgr8.

.....and 3.7 MB/s @50fps 1280x720 mono8.

Above test performed with same camera looking at same scene in same ambient light conditions.

I was expecting around 3 times less bandwidth consumption for grayscale.

jai@jai:~$ opencv_version

4.2.0

jai@jai:~$ opencv_version -v | grep jpeg

JPEG: /usr/lib/x86_64-linux-gnu/libjpeg.so (ver 80)

jai@jai:~$ ldconfig -p | grep jpeg

libmjpegutils-2.1.so.0 (libc6,x86-64) => /lib/x86_64-linux-gnu/libmjpegutils-2.1.so.0

libjpegxr.so.0 (libc6,x86-64) => /lib/x86_64-linux-gnu/libjpegxr.so.0

libjpeg.so.8 (libc6,x86-64) => /lib/x86_64-linux-gnu/libjpeg.so.8

libjpeg.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libjpeg.so

libgdcmjpeg16.so.3.0 (libc6,x86-64) => /lib/x86_64-linux-gnu/libgdcmjpeg16.so.3.0

libgdcmjpeg16.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libgdcmjpeg16.so

libgdcmjpeg12.so.3.0 (libc6,x86-64) => /lib/x86_64-linux-gnu/libgdcmjpeg12.so.3.0

libgdcmjpeg12.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libgdcmjpeg12.so

libgdcmjpeg8.so.3.0 (libc6,x86-64) => /lib/x86_64-linux-gnu/libgdcmjpeg8.so.3.0

libgdcmjpeg8.so (libc6,x86-64) => /lib/x86_64-linux-gnu/libgdcmjpeg8.so

jai@jai:~$

FYI: I am using ros::compressed_image_transport plugin. I checked on opencv4 and opencv3.4.9.

I thought to try the same thing with simple C++ program and find the same pattern again. Below is my simple C++ program and its output.

#include <iostream>

#include <vector>

#include <opencv2/imgproc/imgproc.hpp> // gray

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

Mat im, imGray;

im = imread( "lenna.png", IMREAD_COLOR ); // Read the file

imGray = imread( "lenna.png", IMREAD_GRAYSCALE ); // Read the file

if( im.empty() ) // Check for invalid input

{

cout << "Could not open or find the image" << std::endl ;

return -1;

}

if(true){

std::vector<int> params;

params.resize(9, 0);

params[0] = cv::IMWRITE_JPEG_QUALITY;

params[1] = 80;

params[2] = cv::IMWRITE_JPEG_PROGRESSIVE;

params[3] = 0;

params[4] = cv::IMWRITE_JPEG_OPTIMIZE;

params[5] = 0;

params[6] = cv::IMWRITE_JPEG_RST_INTERVAL;

params[7] = 0;

std::vector< uchar > buffer, bufferGray;

if (cv::imencode(".jpeg", im, buffer, params)) {

float cRatio = (float)(im.rows * im.cols * im.elemSize())

/ (float)buffer.size();

printf("BGR8 Jpeg image size: %lu KB, Compression Ratio: 1:%.2f\n", buffer.size()/1024, cRatio);

imwrite("im.jpg", im, params);

}

else

{

printf("cv::imencode (jpeg) failed on bgr8 image\n");

}

if (cv::imencode(".jpeg", imGray, bufferGray, params)) {

float cRatio = (float)(imGray.rows * imGray.cols * imGray.elemSize())

/ (float)bufferGray.size();

printf("MONO8 Jpeg image size: %lu KB, Compression Ratio: 1:%.2f\n\n", bufferGray.size()/1024, cRatio);

imwrite("imGray.jpg", imGray, params);

}

else

{

printf("cv::imencode (jpeg) failed on mono8 image\n");

}

}

imshow( "BGR8", im );

imshow( "MONO8", imGray );

waitKey(0);

return 0;

}

My concern is the low compression ratio for gray-scale.

you expect us to know, what that is ? (or that anyone here can reproduce your findings ?)

maybe it's a good idea to write a simple test prog, that just loads a single image and saves it again, and show us here

Thanks @berak I follow your suggestion and explicitly present a simple program to reproduce my findings. (Appended my question)