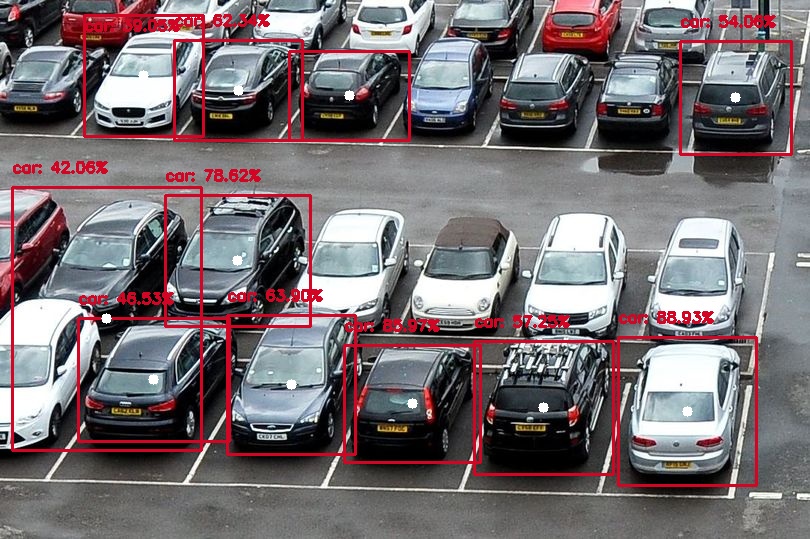

How to get the number of centroids in a rectangle?[SOLVED]

Current if statement checks if centroid coordinates (x, y) are inside the red rectangle and outputs the coordinates. Instead of getting coordinates of each centroid inside the red rectangle how can I get the the total number of centroids inside the red rectangle?

My while loop:

while True:

frame = vs.read()

frame = imutils.resize(frame, width=720)

cv2.rectangle(frame, (box.top), (box.bottom), color, 2)

(h, w) = frame.shape[:2]

blob = cv2.dnn.blobFromImage(cv2.resize(frame, (300, 300)), 0.007843, (300, 300), 127.5)

net.setInput(blob)

detections = net.forward()

for i in np.arange(0, detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > args["confidence"]:

idx = int(detections[0, 0, i, 1])

if CLASSES[idx] != "car":

continue

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

label = "{}: {:.2f}%".format(CLASSES[idx], confidence * 100)

cv2.rectangle(frame, (startX, startY), (endX, endY), COLORS[idx], 2)

center = ((startX+endX)/2, (startY+endY)/2)

x = int(center[0])

y = int(center[1])

cv2.circle(frame, (x, y), 5, (255,255,255), -1)

if ((x > box.top[0]) and (x < box.bottom[0]) and (y > box.top[1]) and (y < box.bottom[1])):

print(x, y)

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

Output picture:

Current output (x and y coordinates of each centroid inside red rectangle):

111 237

532 247

307 249

Desired output (total number of centroids inside red rectangle):

3

deep learning is easier than math?