This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

The problem has been solved. Unfortunately, un-resizing and using image only.

#!/usr/bin/python3.7

#OpenCV 4.2, Raspberrypy 3/2b/4b, Buster ver 10

#Date: 2nd February, 2020.

import numpy as np

import argparse

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image")

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-c", "--confidence", type=float, default=0.2,

help="minimum probability to filter weak detections")

args = vars(ap.parse_args())

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

COLORS = np.random.uniform(0, 255, size=(len(CLASSES), 3))

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

image = cv2.imread(args["image"])

(h, w) = image.shape[:2]

blob = cv2.dnn.blobFromImage(cv2.resize(image, (300, 300)),

0.007843, (300, 300), 127.5)

print("[INFO] computing object detections...")

net.setInput(blob)

detections = net.forward()

counter = 0

# loop over the detections

for i in np.arange(0, detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > args["confidence"]:

idx = int(detections[0, 0, i, 1])

if CLASSES[idx] is not "car":

continue

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

label = "{}: {:.2f}%".format(CLASSES[idx],

confidence * 100)

cv2.rectangle(image, (startX, startY),

(endX, endY), COLORS[idx], 2)

center = ((startX+endX)/2, (startY+endY)/2)

x = int(center[0])

y = int(center[1])

cv2.circle(image, (x, y), 5, (255,255,255), -1)

print(x, y)

y = startY - 15 if startY - 15 > 15 else startY + 15

cv2.putText(image, label, (startX, y),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, COLORS[idx], 2)

counter += 1

print(f'counter', counter)

# show the output image

cv2.imshow("Output", image)

cv2.waitKey(0)

[INFO] loading model...

[INFO] computing object detections...

636 542

986 518

272 542

counter 3

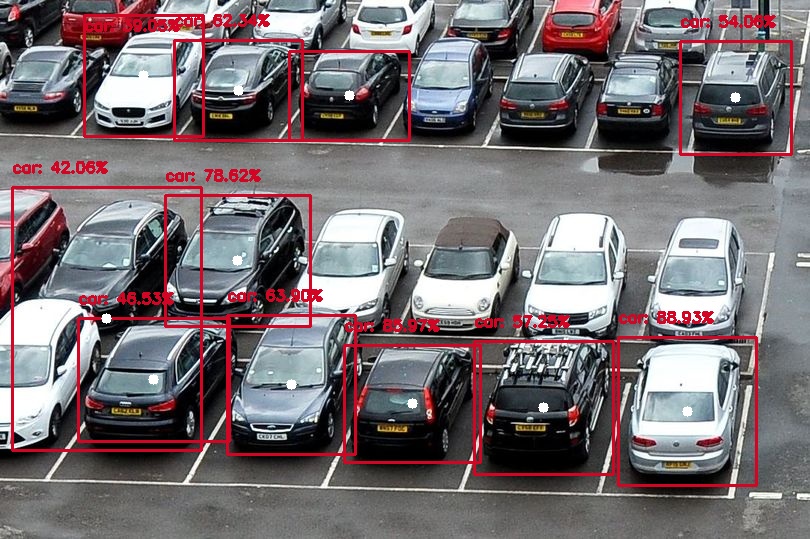

Output:

[INFO] loading model...

[INFO] computing object detections...

687 411

412 403

237 260

291 384

238 90

543 407

735 97

153 379

106 318

143 75

349 95

counter 11

output:

| 2 | No.2 Revision |

The problem has been solved. Unfortunately, un-resizing and using image only.

#!/usr/bin/python3.7

#OpenCV 4.2, Raspberrypy 3/2b/4b, Buster ver 10

#Date: 2nd February, 2020.

import numpy as np

import argparse

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image")

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-c", "--confidence", type=float, default=0.2,

help="minimum probability to filter weak detections")

args = vars(ap.parse_args())

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

COLORS = np.random.uniform(0, 255, size=(len(CLASSES), 3))

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

image = cv2.imread(args["image"])

(h, w) = image.shape[:2]

blob = cv2.dnn.blobFromImage(cv2.resize(image, (300, 300)),

0.007843, (300, 300), 127.5)

print("[INFO] computing object detections...")

net.setInput(blob)

detections = net.forward()

counter = 0

# loop over the detections

for i in np.arange(0, detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > args["confidence"]:

idx = int(detections[0, 0, i, 1])

if CLASSES[idx] is not "car":

continue

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

label = "{}: {:.2f}%".format(CLASSES[idx],

confidence * 100)

cv2.rectangle(image, (startX, startY),

(endX, endY), COLORS[idx], 2)

center = ((startX+endX)/2, (startY+endY)/2)

x = int(center[0])

y = int(center[1])

cv2.circle(image, (x, y), 5, (255,255,255), -1)

print(x, y)

y = startY - 15 if startY - 15 > 15 else startY + 15

cv2.putText(image, label, (startX, y),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, COLORS[idx], 2)

counter += 1

print(f'counter', counter)

# show the output image

cv2.imshow("Output", image)

cv2.waitKey(0)

[INFO] loading model...

[INFO] computing object detections...

636 542

986 518

272 542

counter 3

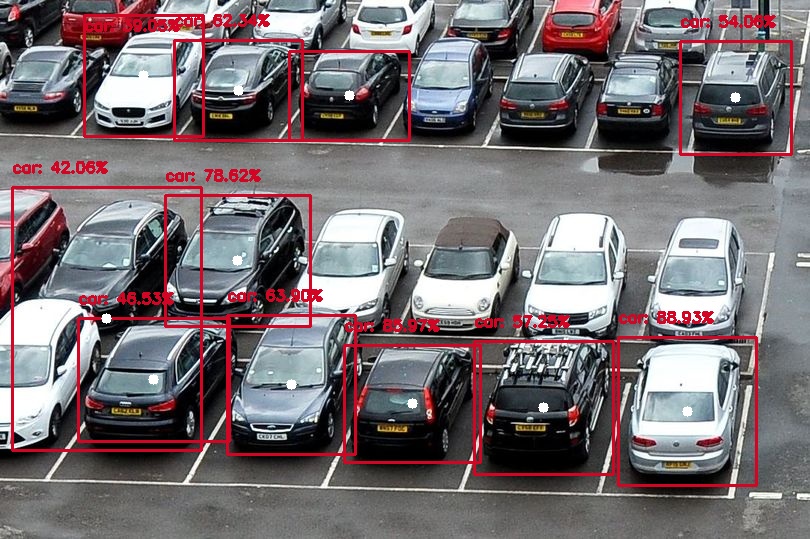

Output:

Output:

[INFO] loading model...

[INFO] computing object detections...

687 411

412 403

237 260

291 384

238 90

543 407

735 97

153 379

106 318

143 75

349 95

counter 11

output:

output:

| 3 | No.3 Revision |

The problem has been solved. Unfortunately, un-resizing and using image only.

#!/usr/bin/python3.7

#OpenCV 4.2, Raspberrypy 3/2b/4b, Raspberry py 3/3b/4b, Buster ver 10

#Date: 2nd February, 2020.

import numpy as np

import argparse

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image")

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-c", "--confidence", type=float, default=0.2,

help="minimum probability to filter weak detections")

args = vars(ap.parse_args())

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

COLORS = np.random.uniform(0, 255, size=(len(CLASSES), 3))

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

image = cv2.imread(args["image"])

(h, w) = image.shape[:2]

blob = cv2.dnn.blobFromImage(cv2.resize(image, (300, 300)),

0.007843, (300, 300), 127.5)

print("[INFO] computing object detections...")

net.setInput(blob)

detections = net.forward()

counter = 0

# loop over the detections

for i in np.arange(0, detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > args["confidence"]:

idx = int(detections[0, 0, i, 1])

if CLASSES[idx] is not "car":

continue

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

label = "{}: {:.2f}%".format(CLASSES[idx],

confidence * 100)

cv2.rectangle(image, (startX, startY),

(endX, endY), COLORS[idx], 2)

center = ((startX+endX)/2, (startY+endY)/2)

x = int(center[0])

y = int(center[1])

cv2.circle(image, (x, y), 5, (255,255,255), -1)

print(x, y)

y = startY - 15 if startY - 15 > 15 else startY + 15

cv2.putText(image, label, (startX, y),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, COLORS[idx], 2)

counter += 1

print(f'counter', counter)

# show the output image

cv2.imshow("Output", image)

cv2.waitKey(0)

[INFO] loading model...

[INFO] computing object detections...

636 542

986 518

272 542

counter 3

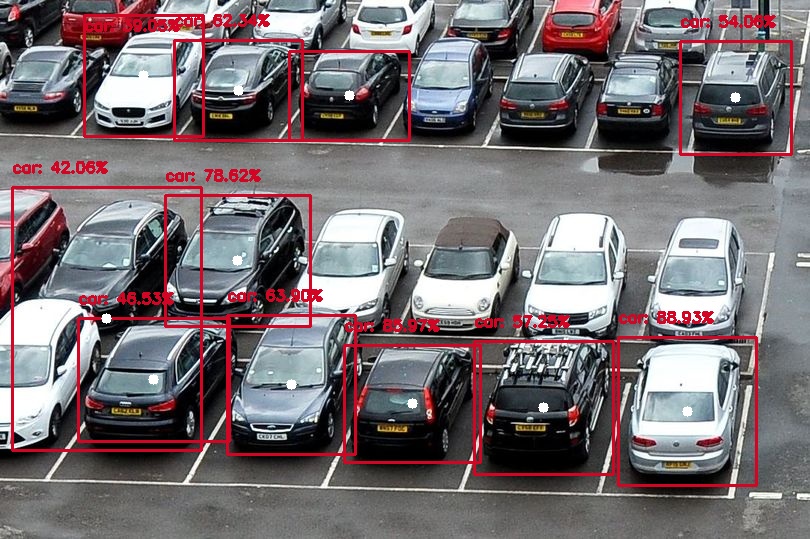

Output:

[INFO] loading model...

[INFO] computing object detections...

687 411

412 403

237 260

291 384

238 90

543 407

735 97

153 379

106 318

143 75

349 95

counter 11

output: