calculate distance between the center of the Aruco marker and the center of the camera.

Hello

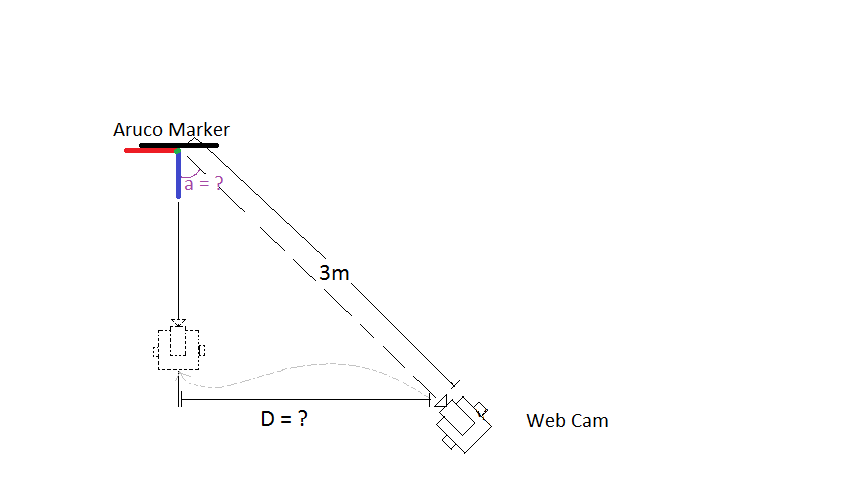

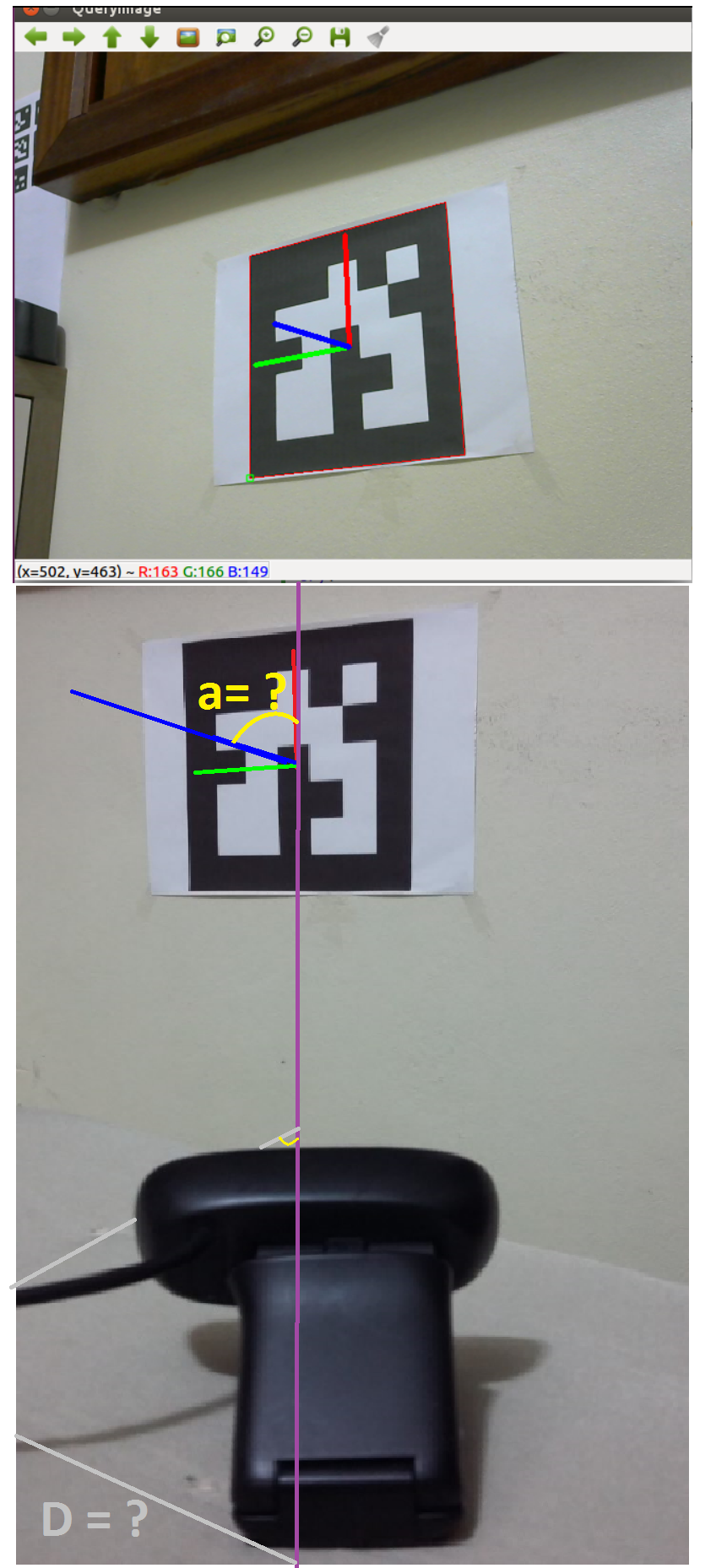

I can currently identify an aruco marker with my webcam. I would like to calculate the distance "D" between the webcam and the Aruco marker. Is it possible to find the angle "a" between the blue axis of the Aruco marker and the center of the camera image?

note: 3m distance is an example, I can get it through a rangefinder that measures the distance from the center of the camera to the center of the Arcuo marker. The camera is fixed at the center of a differential robot and the robot can align with the center of the Aruco marker. I would like to calculate the distance "D" to position(approximately) the robot in the center of the Aruco marker.

i'm using kyle-bersani code (https://github.com/kyle-bersani/openc...):

import numpy

import cv2

import cv2.aruco as aruco

import os

import pickle

# Check for camera calibration data

if not os.path.exists('./calibration.pckl'):

print("You need to calibrate the camera you'll be using. See calibration project directory for details.")

exit()

else:

f = open('calibration.pckl', 'rb')

(cameraMatrix, distCoeffs, _, _) = pickle.load(f)

f.close()

if cameraMatrix is None or distCoeffs is None:

print("Calibration issue. Remove ./calibration.pckl and recalibrate your camera with CalibrateCamera.py.")

exit()

# Constant parameters used in Aruco methods

ARUCO_PARAMETERS = aruco.DetectorParameters_create()

#ARUCO_DICT = aruco.Dictionary_get(aruco.DICT_6X6_1000) original

ARUCO_DICT = aruco.Dictionary_get(aruco.DICT_5X5_1000)

# Create grid board object we're using in our stream

board = aruco.GridBoard_create(

markersX=2,

markersY=2,

markerLength=0.09,

markerSeparation=0.01,

dictionary=ARUCO_DICT)

# Create vectors we'll be using for rotations and translations for postures

rvecs, tvecs = None, None

cam = cv2.VideoCapture(0)

while(cam.isOpened()):

# Capturing each frame of our video stream

ret, QueryImg = cam.read()

if ret == True:

# grayscale image

gray = cv2.cvtColor(QueryImg, cv2.COLOR_BGR2GRAY)

# Detect Aruco markers

corners, ids, rejectedImgPoints = aruco.detectMarkers(gray, ARUCO_DICT, parameters=ARUCO_PARAMETERS)

# Refine detected markers

# Eliminates markers not part of our board, adds missing markers to the board

corners, ids, rejectedImgPoints, recoveredIds = aruco.refineDetectedMarkers(

image = gray,

board = board,

detectedCorners = corners,

detectedIds = ids,

rejectedCorners = rejectedImgPoints,

cameraMatrix = cameraMatrix,

distCoeffs = distCoeffs)

QueryImg = aruco.drawDetectedMarkers(QueryImg, corners, borderColor=(0, 0, 255))

if ids is not None:

try:

rvec, tvec, _objPoints = aruco.estimatePoseSingleMarkers(corners, 10.5, cameraMatrix, distCoeffs)

QueryImg = aruco.drawAxis(QueryImg, cameraMatrix, distCoeffs, rvec, tvec, 5)

except:

print("Deu merda segue o baile")

cv2.imshow('QueryImage', QueryImg)

# Exit at the end of the video on the 'q' keypress

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cv2.destroyAllWindows()

thanks

I think I need to find the angle between the normal vector (blue axis) and the center of the camera. Then I could calculate the distance "D" through trigonometry: D = 3 * sen(a).

note: maybe there is another way to calculate the distance "D" that I don't know

Have a look at

estimatePoseSingleMarkers(), see this tutorial.It returns the rotation and the translation vector to transform from the ArUco frame to the camera frame. Norm of the translation vector is the distance between the tag frame and the camera frame.

Hello, thanks for your attention. I added some more images and the code I'm using. Could you help me find angle "a" based on this code?

No need to calculate the angle.

tvecis directly the translation between the camera frame and the tag frame.I suggest you to read this and this or a computer vision course about camera projection model and camera pose estimation.

ok, if I print the results of "tvec" I get this output:

print(tvec) = [[[-20.96593757 -9.13860749 92.81840327]]]

What do these values mean? Is it possible to convert them to meters or angles?

It is explained in the previously mentioned links...

If you are not familiar with computer vision, have a look at these materials:

If you don't take the effort to learn, it is like randomly copy-pasting some code until it works or someone wants to do the job.

ok, I think "solvePnP ()" is the way to go.

estimatePoseSingleMarkers