ORB pyramid not working

I am using the following detector and descriptor for the features I want to track:

Ptr<FeatureDetector> detector = Ptr<FeatureDetector>(

new ORB(500, 2, 9, 31, 0, 2, ORB::HARRIS_SCORE, 31));

Ptr<DescriptorExtractor> descriptorExtractor = DescriptorExtractor::create("ORB");

So I have 9 size pyramids with distance 2 so every smaller pyramid-image is 50% of the last one right? And this 9 times (I tried it also with other numbers like the default 1.2 for the distance e.g.)

Still if I use a marker-image I want to detect in a current frame and the marker in the current frame is only 50% the size of the original marker then there are no matches anymore, the matches are only found for scale changes like 0.8 to 1.2 to the original marker.

Now if I scale the image manually down to 50% of its size it can be found again so a manuall pyramid approach is working but not the one directly done by the Orb detector. Am I doing something wrong here?

Here are some images I took to exmplain the problem:

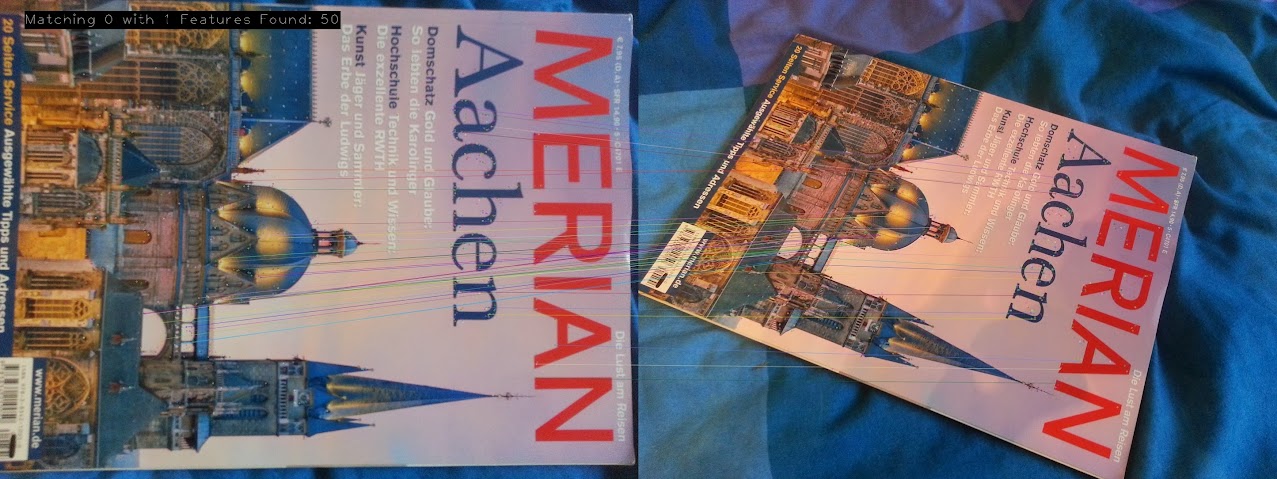

Marker and scene have same size, everything is fine:

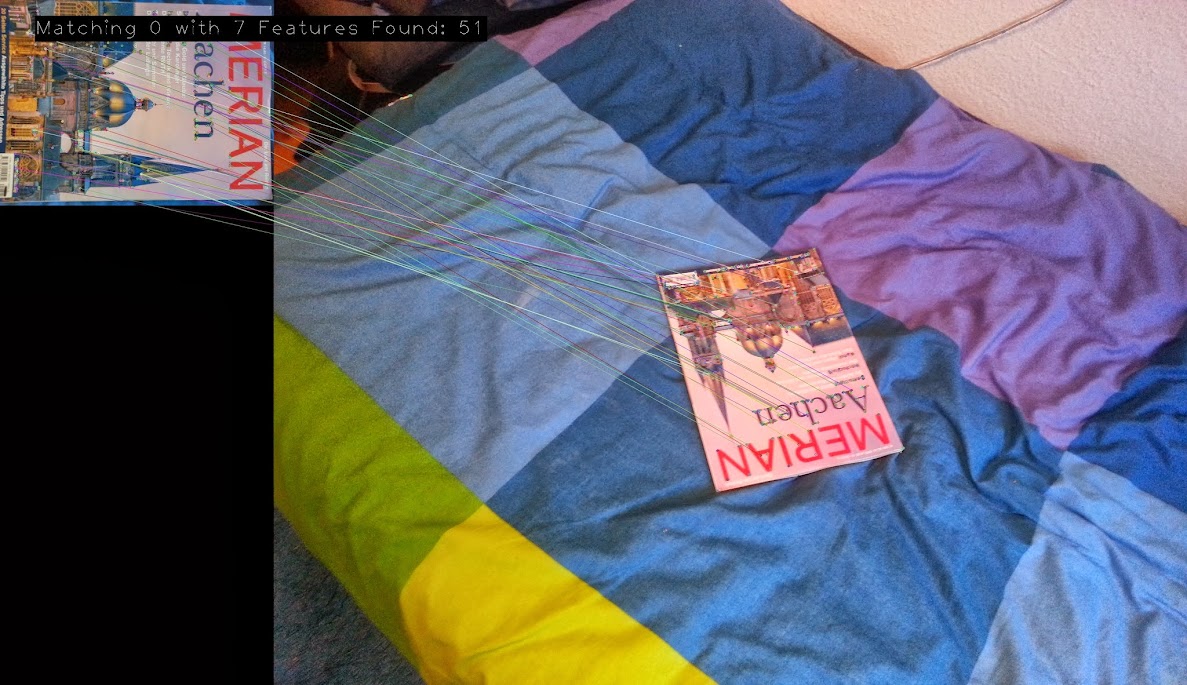

Different scale of marker and current scene, no matches anymore:

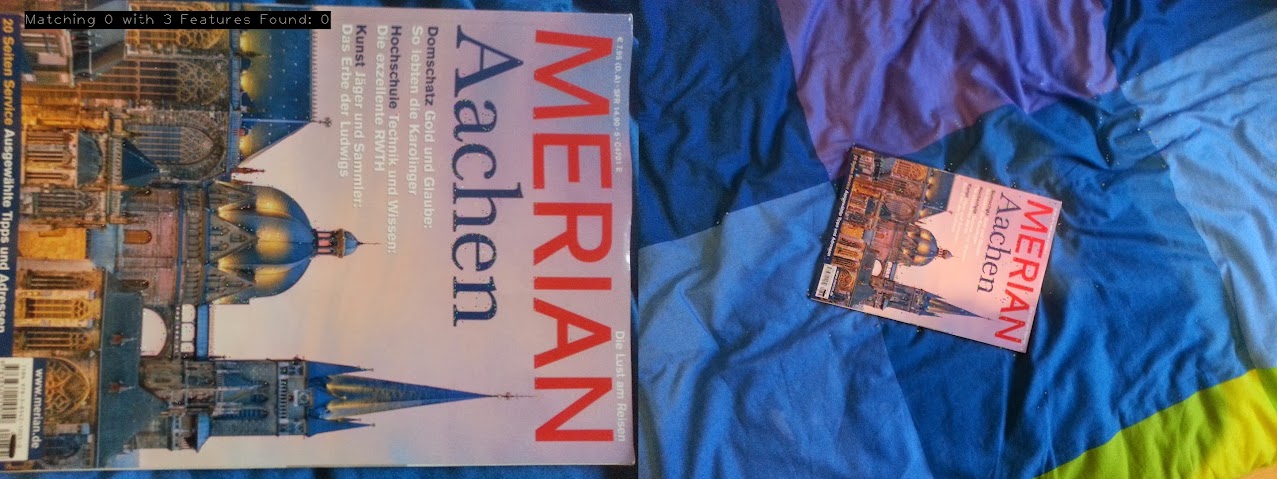

2 Images where i resized the marker image manually and then its found again as expected: