Get Rotation and Translation Matrix

I'm programming one Asus Xtion depth camera, wich instead of an RGB image, it gives me the Depth information.

I already have the Camera and Distortion Matrices of the depth camera, but now, I want to calibrate the vision system, by getting the rotation and translation matrices.

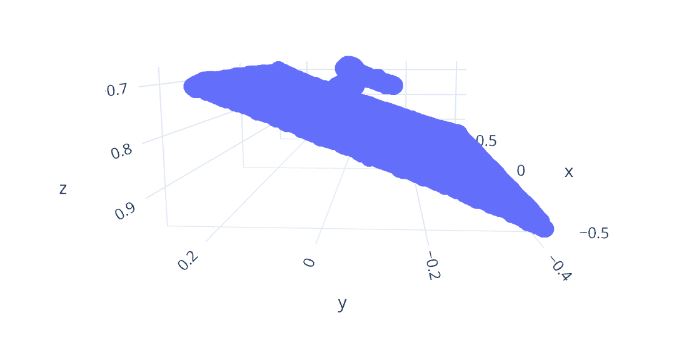

I already have the 3D local coordinates of the points, from the camera perspective, but now I need to convert them to world/global coordinates. Since this camera has only depth information I was thinking: is it possible to calibrate this vision system by saying where is the ground plane? How shoul I proceed to put the blue plane as the ground plane of my vison system?

(note, in addition to the ground plane there's also an object on the plane)

I already tried using the solvePnP to get the rotation and translation matrices, but with no luck. Thanks in advance.