Correctly interpreting the Pose (Rotation and Translation) after 'recoverPose' from Essential matrix

Hi,

I have been breaking my head trying to correctly interpret the results of recoverPose from Essential matrix. Here are the high level steps I am using:

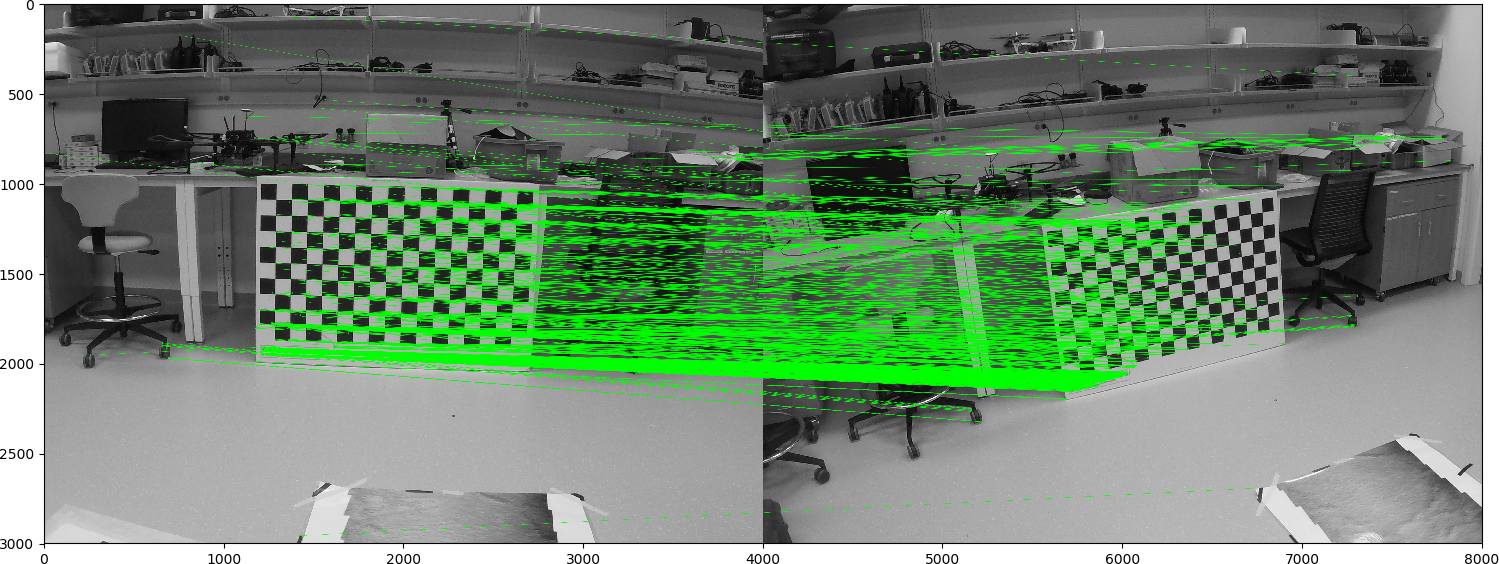

1. Detect ORB features in two images

2. Match featues using BFMatcher

3. findEssential across two images

4. recoverPose ie. R,T from the two images

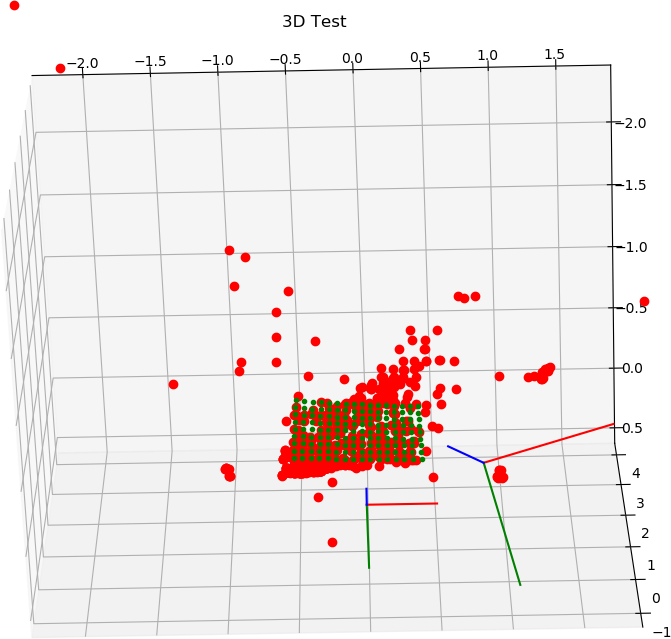

5. Triangulate the good featues (masked from recoverPose) using the R, T to created 3d point-clouds (landmarks)

6. As a ground truth, I also extract Chess board corners from the image and triangulate them using the R, T calculated above. A good planar formation for chess board corners indicates that R, T are accurate for triangulation.

7. Plot everything

So as we can see from images 1488 and 1490, the camera is moving to the left - up AND it in pointing down and to the right. However the plot of R and T of the 2nd position reflects something completely different.

I have tried inverting both using R' and -(R')*T, but that doesn't plot correctly either. I have tried a bunch of different combinations, but none seem to make sense.

So what gives???

The python script and test images can be found here. For reference the python code is:

import numpy as np

import cv2

from matplotlib import pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

def plot_pose3_on_axes(axes, gRp, origin, axis_length=0.1):

"""Plot a 3D pose on given axis 'axes' with given 'axis_length'."""

# get rotation and translation (center)

#gRp = pose.rotation().matrix() # rotation from pose to global

#t = pose.translation()

#origin = np.array([t.x(), t.y(), t.z()])

# draw the camera axes

x_axis = origin + gRp[:, 0] * axis_length

line = np.append(origin, x_axis, axis=0)

axes.plot(line[:, 0], line[:, 1], line[:, 2], 'r-')

y_axis = origin + gRp[:, 1] * axis_length

line = np.append(origin, y_axis, axis=0)

axes.plot(line[:, 0], line[:, 1], line[:, 2], 'g-')

z_axis = origin + gRp[:, 2] * axis_length

line = np.append(origin, z_axis, axis=0)

axes.plot(line[:, 0], line[:, 1], line[:, 2], 'b-')

img1 = cv2.imread('/home/vik748/data/chess_board/GOPR1488.JPG',1) # queryImage

img2 = cv2.imread('/home/vik748/data/chess_board/GOPR1490.JPG',1)

fx = 3551.342810

fy = 3522.689669

cx = 2033.513326

cy = 1455.489194

K = np.float64([[fx, 0, cx],

[0, fy, cy],

[0, 0, 1]])

D = np.float64([-0.276796, 0.113400, -0.000349, -0.000469]);

print(K,D)

# Convert images to greyscale

gr1=cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

gr2=cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)

#Initiate ORB detector

detector = cv2.ORB_create(nfeatures=25000, edgeThreshold=15, patchSize=125, nlevels=32,

fastThreshold=20, scaleFactor=1.2, WTA_K=2,

scoreType=cv2.ORB_HARRIS_SCORE, firstLevel=0)

# find the keypoints and descriptors with ORB

kp1, des1 = detector.detectAndCompute(gr1,None)

kp2, des2 = detector.detectAndCompute(gr2,None)

print ("Points detected: ",len(kp1), " and ", len(kp2))

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

matches = bf.match(des1,des2)

kp1_match = np.array([kp1[mat.queryIdx].pt for mat in matches])

kp2_match = np.array([kp2[mat.trainIdx].pt for mat in matches])

kp1_match_ud = cv2.undistortPoints(np.expand_dims ...

I made some code to test the Essential matrix here.

If the Essential matrix is correctly estimated (keep in mind that the Essential matrix needs points to be in a general configuration (not on a planar surface)), the Essential matrix decomposition should give you the transformation to go from one camera frame to the other one. Also, the translation is estimated up to a scale factor.

@Eduard: Different question concerning that topic. Is it necessary to normalize your image point coordinates as stated in Hartley, Zisserman?

@Eduardo: Different question concerning that topic. Is it necessary to normalize your image point coordinates as stated in Hartley, Zisserman?

@Grillteller

Yes.

For the Fundamental matrix, you are working with 2D image points in pixel. The Essential matrix is:

E = K^t . F . Kand you are working with points in the normalized camera frame (z=1).