Centering opencv rotation [closed]

I'm having difficulties getting opencv rotations to center.

The rotation must retain all data so no clipping is allowed.

My first test case is using 90 and -90 degrees to simplify the transformation matrix (see https://docs.opencv.org/3.0-beta/doc/...)

I also thought the best way to observe rotations is to use a simple case where the border pixel values are set to observe how the box rotates.

The python code I tried came from Flemin's Blog on rotation (http://john.freml.in/opencv-rotation)

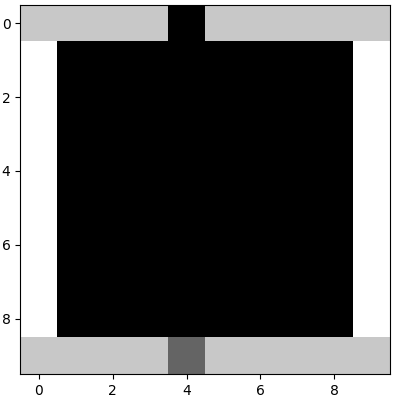

Below is a picture of the original non-rotated image in python. Use the grey point as (4,9) for reference.

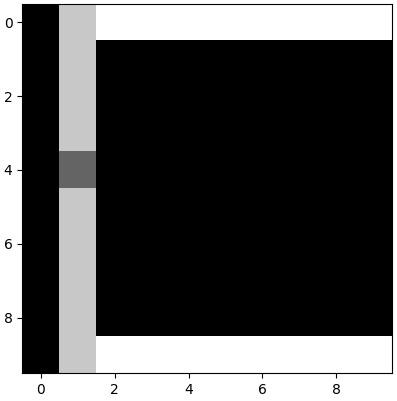

Then after running the python script (script below), I get a rotation where it is shifted to the right one column. Note the reference point is at (1,4) when it should be at (0,4)

Below is the Python script. I added width and height offsets to the function to allow me to experiment with offsets to the tx and ty rotation parameters. I found that setting the width offset to 1 made the 90 degree rotation case match Matlab, but it didn't help -90.

UPDATE 1/19 9AM: I tried setting offset = -0.5 in the function rotate_about_center() below and the 90 and -90 degree rotations center as expected. For a 10x10 image, the reasoning why this may work is that the center point defined by (cols/2, rows/2) is not (5,5), but rather (4.5, 4.5). The same logic applied to a 11x11 image: the center is not (5.5,5.5) but rather (5,5). Rotations at 45 and -45 don't center - meaning they visually don't look centered in the box computed of size nw x nh. So I think I understand why a "center" equal to (cols/2 - 0.5, rows/2 - 05) works but a center of (cols/2, rows/2) does not, however, most examples I've found do not subtract the 0.5.

import cv2

from matplotlib import pyplot as plt

import functools

import math

bwimshow = functools.partial(plt.imshow, vmin=0, vmax=255,

cmap=plt.get_cmap('gray'))

def rotate_about_center(src, angle, widthOffset=0., heightOffset=0, scale=1.):

w = src.shape[1]

h = src.shape[0]

# Add offset to correct for center of images.

wOffset = -0.5

hOffset = -0.5

rangle = np.deg2rad(angle) # angle in radians

# now calculate new image width and height

nw = (abs(np.sin(rangle)*h) + abs(np.cos(rangle)*w))*scale

nh = (abs(np.cos(rangle)*h) + abs(np.sin(rangle)*w))*scale

print("nw = ", nw, "nh = ", nh)

# ask OpenCV for the rotation matrix

rot_mat = cv2.getRotationMatrix2D((nw*0.5 + wOffset, nh*0.5 + hOffset), angle, scale)

# calculate the move from the old center to the new center combined

# with the rotation

rot_move = np.dot(rot_mat, np.array([(nw-w)*0.5 + widthOffset, (nh-h)*0.5 + heightOffset,0]))

# the move only affects the translation, so update the translation

# part of the transform

rot_mat[0 ...

this is not a matlab forum (don't expect anyone here to understand, what you're doing there)

but things will get better (promised !), if you can abstract away from matlab, and clearly state, what you want to happen on the opencv side !

(like: flip() or rotate() might just do , what you want)

Point taken. Rephrased as a rotation centering problem and removed Matlab reference.