Projecting Rays using OpenCV and Camera Matrix

I would like to cast rays from the camera and check the intersected points on the table, which is I assume at (0,0,0) with normal (0,1,0).. so for example I choose a point on a table by mouse, then I cast rays from the origin (0,0,0) to the plane, but the problem is I get a false point, that is not on the plane but still positive.

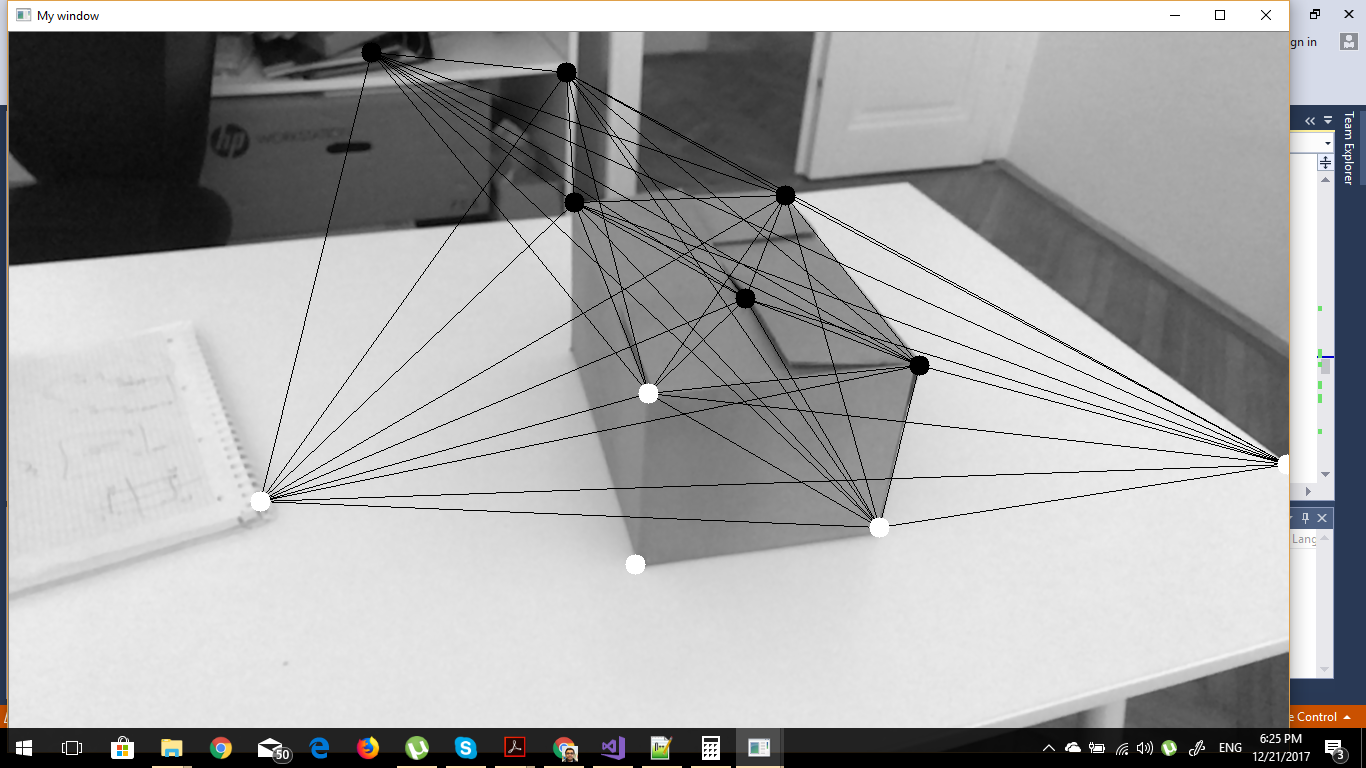

here is a picture to show what I'm doing

//Generate a camera intrinsic matrix

Mat K = (Mat_<double>(3, 3)

<< 1600, 0,src.cols/2,

0, 1600,src.rows/2,

0, 0, 1);

Mat invCameraIntrinsics = K.inv();

cout << "inv" << invCameraIntrinsics;

std::vector<cv::Vec3d> pointsTransformed3D;

std::vector<cv::Vec3d> points3D;

for (int i = 0; i < corners.size(); i++)

{

cv::Vec3d pt;

pt[2] = 1.0f;

pt[0] = (corners[i].x );

pt[1] = (corners[i].y );

points3D.push_back(pt);

}

cv::transform(points3D, pointsTransformed3D, invCameraIntrinsics);

Mat rot = (Mat_<double>(3, 3)

<< 0.8744617, 0.2258282, -0.4293233,

0.0608088, 0.8270180, 0.5588771,

0.4812683, -0.5148232, 0.7094631);

std::vector<cv::Point3d> pointsRotated;

Mat translVec(3, 1, CV_64F);

translVec.at<double>(0, 0) = 21.408294677734375;

translVec.at<double>(1, 0) = 531.1319580078125;

translVec.at<double>(2, 0) = 705.74224853515625;

const Mat camera_translation = (-rot * translVec);

cv::transform(pointsTransformed3D, pointsRotated, rot.inv());

std::vector<Ray> rays;

for (int i = 0; i < pointsRotated.size(); i++)

{

Ray ray;

ray.origin = Vec3f(camera_translation.at<double>(0,0), camera_translation.at<double>(1,0),

camera_translation.at<double>(2,0));

ray.direction = Vec3f(pointsRotated[i][0], pointsRotated[i][1], pointsRotated[i][2]);

rays.push_back(ray);

}

std::vector<cv::Vec3f> contacts;

for (int i = 0; i < pointsRotated.size(); i++)

{

Vec3f pt(0, 0,0);

cv::Vec3f contact;

std::pair<bool, double> test = linePlaneIntersection(rays[i].direction, rays[i].origin, Vec3f(0, 1, 0), pt);

if (test.first == true)

{

cv::Vec3f contact(rays[i].origin + (rays[i].direction) * test.second);

contacts.push_back(contact);

}

}

It looks like you're generating the rays correctly, but you do know that the camera is (0,0,0) in that coordinate system, right? So the table would be somewhere at a positive z, which does not match what you said.

I would expect all of the Rays there to have a +z axis.

Thanks for your comment. I know the position of the camera... should I generate the the ray from the origin (position) of the camera ?

Yes. The line of sight is from the camera, therefore your ray should be too.

How about transforming the 2D points into 3D points ? is the above code correct ? or should I use the ViewMatrix and get the inverse of it then transform the 2D points into 3D using that inverse matrix ?

That's correct. Of course, there's no depth information, but that's what your plane is for.

Thanks. So the problem of measuring the cube is reduced to Finding 3D positions, generate rays from the Camera position into the plane(the table) and see intersected points, then find perpendicular lines ?

Yep. The hardest part of that is probably the camera position relative to the table, but ARUCO markers provide an easy solution to that if you don't already have one.

Thanks. How would I convert those corner points into 3D positions ? I'm having problem with that