Template matching: find rotation of object on scene.

Hi,

I'm trying to detect some objects on scene and find angles of rotation relative to the axis.

Let me show more detailed.

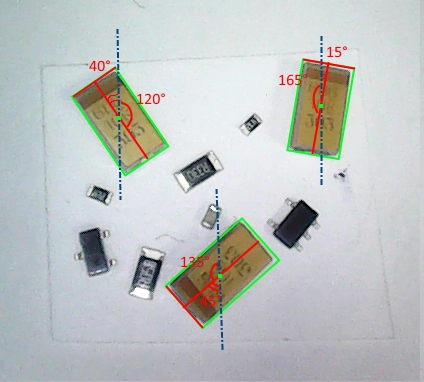

I have got pictures from camera, something like this (there are some electronic components, they may be mixed and rotated in random way):

I tried to use template matching. I used next image as template:

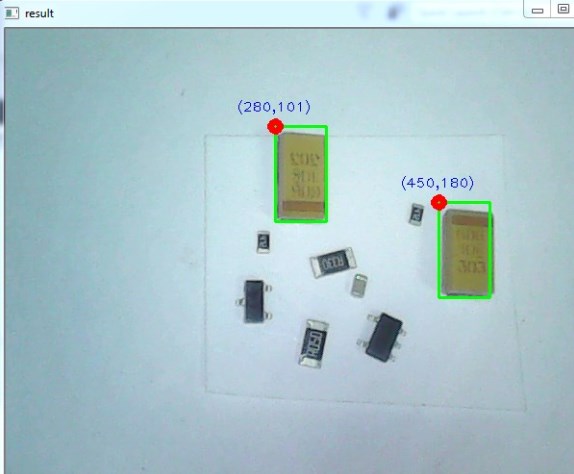

After processing I got next result (with some drawings over it):

How can I detect that one of them is rotated for 180 degrees?

I'm using template match tutorial as basic.

Here is how image looks like after template matching (CV_TM_SQDIFF_NORMED):

Not sure that it is possible to say where is top side or bottom for mathched regions... Maybe I'm moving in wrong way and I need to use another algoritm?

One more thing:

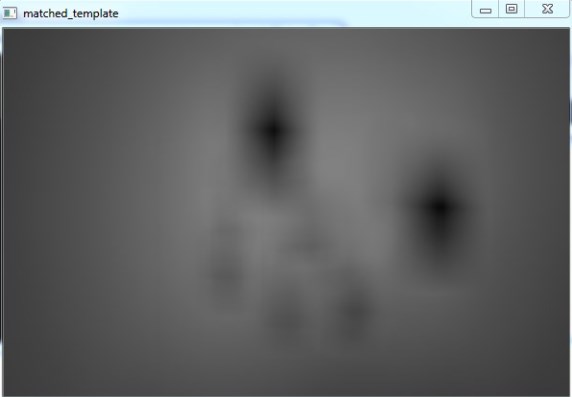

As you can see, when objet is rotated at 90 degree, it is harder to find maching (even with normalization):

UPDATE

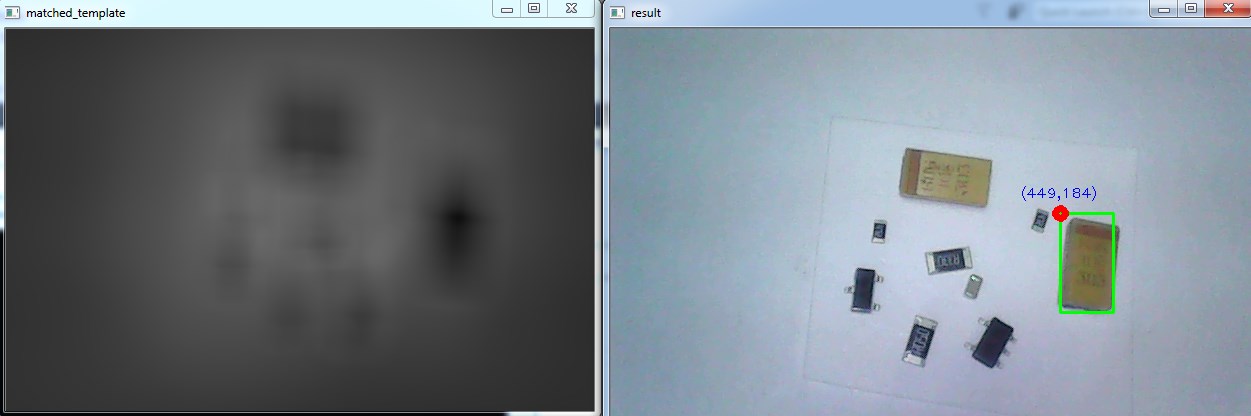

Here is what I want to get in final:

As I see in case I can find rotation - I can automatically find top of component (I want to make remark, that It may be ok to use color detection for fat brown strip on top of this component, but others (like two black diodes with 3 and 5 pins) may not be detectable by this way, so I'm pretty sure that I need use some kind of template here anyway).

here is code:

main:

int main(int argc, char** argv)

{

Mat image_input, component_template;

VideoCapture cap(0);

cap >> image_input;

//Mat image_input = imread("d:\\SRC.jpg");

component_template = imread("d:\\TMP.jpg");

vector<Point> match_centers = imageComparation(component_template, image_input, CV_TM_SQDIFF_NORMED, 0.9);

drawMatch(component_template, image_input, match_centers);

imshow("result", image_input);

waitKey(0);

return 0;

}

compare image function:

vector<point> imageComparation(Mat object, Mat scene, int match_method, float peek_percent) { Mat img_display; scene.copyTo(img_display);

// Create the result matrix

int result_cols = scene.cols - object.cols + 1;

int result_rows = scene.rows - object.rows + 1;

Mat result(result_cols, result_rows, CV_32FC1);

// match scene with template

matchTemplate(scene, object, result, match_method);

imshow("matched_template", result);

//normalize(result, result, 0, 1, NORM_MINMAX, -1, Mat());

normalize(result, result, 0, 1, NORM_MINMAX, -1, Mat());

imshow("normalized", result);

// Localizing the best match with minMaxLoc

double minVal; double maxVal; Point minLoc; Point maxLoc;

Point matchLoc;

// For SQDIFF and SQDIFF_NORMED, the best matches are lower values. For all the other methods, the higher the better

if (match_method == CV_TM_SQDIFF || match_method == CV_TM_SQDIFF_NORMED)

{

matchLoc = minLoc;

//threshold(result, result, 0.1, 1, CV_THRESH_BINARY_INV);

threshold(result, result, 0.1, 1, CV_THRESH_BINARY_INV);

imshow("threshold_1", result);

}

else

{

matchLoc = maxLoc;

threshold(result, result, 0.9, 1, CV_THRESH_TOZERO);

imshow("threshold_2", result);

}

vector<Point> res;

maxVal = 1.f;

while (maxVal > peek_percent)

{

minMaxLoc(result, &minVal, &maxVal, &minLoc, &maxLoc, Mat());

if (maxVal > peek_percent)

{

rectangle(result, Point(maxLoc.x - object.cols / 2, maxLoc.y - object.rows / 2), Point(maxLoc.x + object.cols / 2, maxLoc.y + object.rows / 2), Scalar::all(0), -1);

res.push_back(maxLoc);

}

}

return res;

}

drawing stuff:

void drawMatch(Mat object, Mat scene, vector<point> match_centers) {

for (size_t i = 0; i < match_centers ...

take a look at https://docs.opencv.org/master/d1/dee...

why not build a cascade approach

finding an all in one solutions is in most cases the worst approach