How to extract Angle , Scale , transition and shear for rotated and scaled object

Problem description

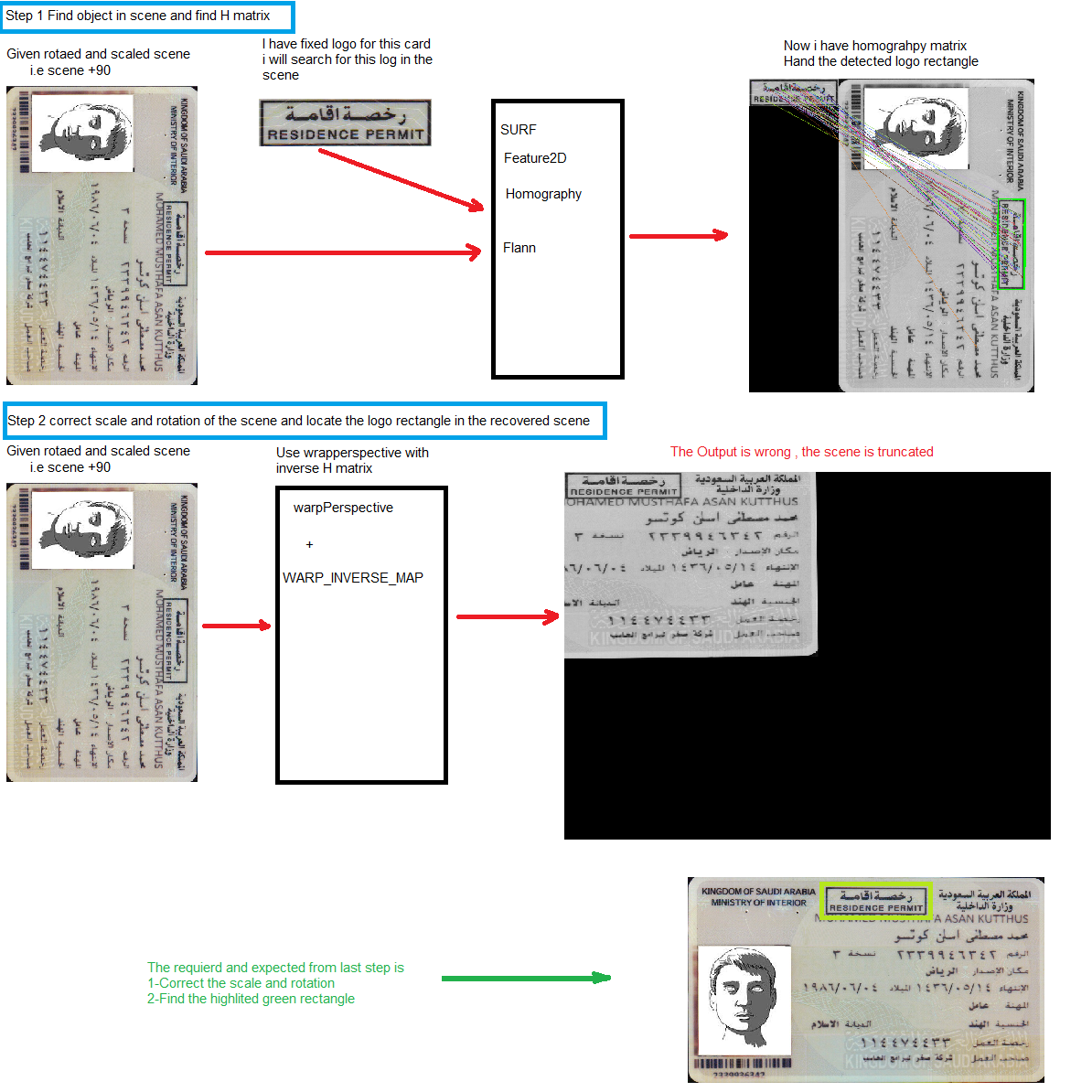

I have rotated and scaled scene and need to correct the scale and rotation , then find rectangle known object in the last fixed image

Input

-Image scense from Camera or scanner -Normalized (normal scale and 0 degree rotation )templeate image for known object

Requiered output

1-correct the scale and the rotation for the input scene 2-find rectnagle

the following figure explain what is the input and steps to find the output

I 'm using the following sample [Features2D + Homography to find a known object] (http://docs.opencv.org/2.4/doc/tutori...) to find rotated and scaled object .

I used the following code to do the process

//read the input image

Mat img_object = imread( strObjectFile, CV_LOAD_IMAGE_GRAYSCALE );

Mat img_scene = imread( strSceneFile, CV_LOAD_IMAGE_GRAYSCALE );

if( img_scene.empty() || img_object.empty())

{

return ERROR_READ_FILE;

}

//Step 1 Find the object in the scene and find H matrix

//-- 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_object, keypoints_scene;

detector.detect( img_object, keypoints_object );

detector.detect( img_scene, keypoints_scene );

//-- 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_object, descriptors_scene;

extractor.compute( img_object, keypoints_object, descriptors_object );

extractor.compute( img_scene, keypoints_scene, descriptors_scene );

//-- 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors_object.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_object.rows; i++ )

{

if( matches[i].distance < 3*min_dist )

{

good_matches.push_back( matches[i]);

}

}

Mat img_matches;

drawMatches( img_object, keypoints_object, img_scene, keypoints_scene,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//Draw matched points

imwrite("c:\\temp\\Matched_Pints.png",img_matches);

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypoints_object[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows ); obj_corners[3] = cvPoint( 0, img_object.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( img_matches, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

//-- Show detected matches

//imshow( "Good Matches & Object detection", img_matches );

imwrite ...

Perhaps I'm missing something, but why not just do warpPerspective with the WARP_INVERSE_MAP flag? Why do you need to decompose the homography matrix?

Thanks @Tetragramm , i don't know that WARP_INVERSE_MAP will recover my original image , i will try it but how get the final object rectangle in the recovered image , which function will do calculate the rectangle.

i was trying to do the mathematics by my self , you saved my time

HI @Tetragramm , i have used warpPerspective with the WARP_INVERSE_MAP to get the recovered image after removing the rotation ,scale ,shear and transition , the rotation corrected but the image is shifted , also how i can calculate the recovered image size , i will add images to the question to understand what i mean

So, I'm not quite sure what you're trying to do, but I'm sure we can do it all without decomposing the homography.

The output image there looks like you successfully transformed from the scene to the model. If you made the output the same size as the model image, you would just have the scene box matching the model box, ready for denoising or whatever you're doing.

Then to put it back into your image, you just use warpPerspective again, but this time without the INVERSE_MAP flag, and with the BORDER_TRANSPARENT type to place it back where it originally came from.

Am I correct that that is what you wanted to do, just that small box? If you're trying to do something with the whole permit, we'll need to change it up a little.

what i'm doing as follow

I had tried warpPerspective + BORDER_TRANSPARENT without INVERSE_MAP but the result is flipped in y axis . using warpPerspective with WARP_INVERSE_MAP gives near to correct result , but the starting point of the recovered image (top left corner) is object top left corner not the scene top left corner, my be we need to recalculate the Homography Matrix to change the top left corner?