Problem description

I have rotated and scaled scene and need to correct the scale and rotation , then find rectangle known object in the last fixed image

Input

-Image scense from Camera or scanner

-Normalized (normal scale and 0 degree rotation )templeate image for known object

Requiered output

1-correct the scale and the rotation for the input scene

2-find rectnagle

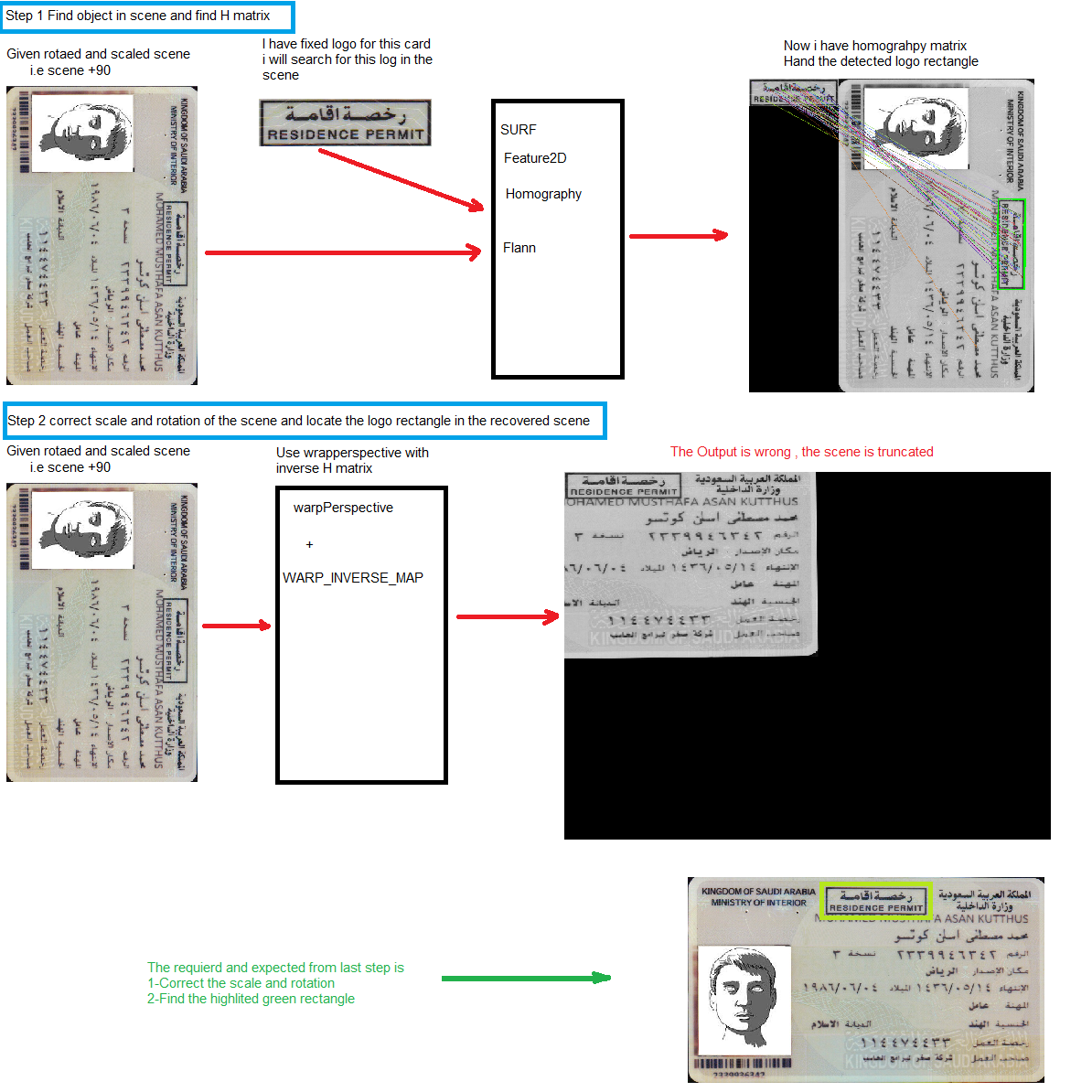

the following figure explain what is the input and steps to find the output

I 'm using the following sample [Features2D + Homography to find a known object] (http://docs.opencv.org/2.4/doc/tutorials/features2d/feature_homography/feature_homography.html) to find rotated and scaled object .

.

I used the following code to do the process

//read the input image

Mat img_object = imread( strObjectFile, CV_LOAD_IMAGE_GRAYSCALE );

Mat img_scene = imread( strSceneFile, CV_LOAD_IMAGE_GRAYSCALE );

if( img_scene.empty() || img_object.empty())

{

return ERROR_READ_FILE;

}

//Step 1 Find the object in the scene and find H matrix

//-- 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_object, keypoints_scene;

detector.detect( img_object, keypoints_object );

detector.detect( img_scene, keypoints_scene );

//-- 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_object, descriptors_scene;

extractor.compute( img_object, keypoints_object, descriptors_object );

extractor.compute( img_scene, keypoints_scene, descriptors_scene );

//-- 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors_object.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_object.rows; i++ )

{

if( matches[i].distance < 3*min_dist )

{

good_matches.push_back( matches[i]);

}

}

Mat img_matches;

drawMatches( img_object, keypoints_object, img_scene, keypoints_scene,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//Draw matched points

imwrite("c:\\temp\\Matched_Pints.png",img_matches);

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypoints_object[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( img_object.cols, 0 );

obj_corners[2] = cvPoint( img_object.cols, img_object.rows ); obj_corners[3] = cvPoint( 0, img_object.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( img_matches, scene_corners[0] + Point2f( img_object.cols, 0), scene_corners[1] + Point2f( img_object.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_object.cols, 0), scene_corners[2] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_object.cols, 0), scene_corners[3] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_object.cols, 0), scene_corners[0] + Point2f( img_object.cols, 0), Scalar( 0, 255, 0), 4 );

//-- Show detected matches

//imshow( "Good Matches & Object detection", img_matches );

imwrite("c:\\temp\\Object_detection_result.png",img_matches);

//Step 2 correct the scene scale and rotation and locate object in the recovered scene

Mat img_Recovered;

//1-calculate new image size for the recovered image

// i find correct way for the new size now take the diagonal and ignore the scale

int idiag = sqrt(double(img_scene.cols * img_scene.cols + img_scene.rows * img_scene.rows));

Size ImgSize = Size(idiag, idiag);//initial image size

//2-find the warped (recovered , corrected scene)

warpPerspective(img_scene,img_Recovered,H,ImgSize,CV_INTER_LANCZOS4+WARP_INVERSE_MAP);

//3-find the logo (object , model,...) in the recovered scene

std::vector<Point2f> Recovered_corners(4);

perspectiveTransform( scene_corners, Recovered_corners, H.inv());

imwrite("c:\\temp\\Object_detection_Recoverd.png",img_Recovered);

it works fine to detect the object , the object is saved without scale , without rotation , and without shear

now i want get the original image scene back after denoising it, so i need to calculate the following:

-Rotation angle

-Scale

-Shear angle

-transation

so the my question is how to use the Homography matrix in the sample to get the mentioned values

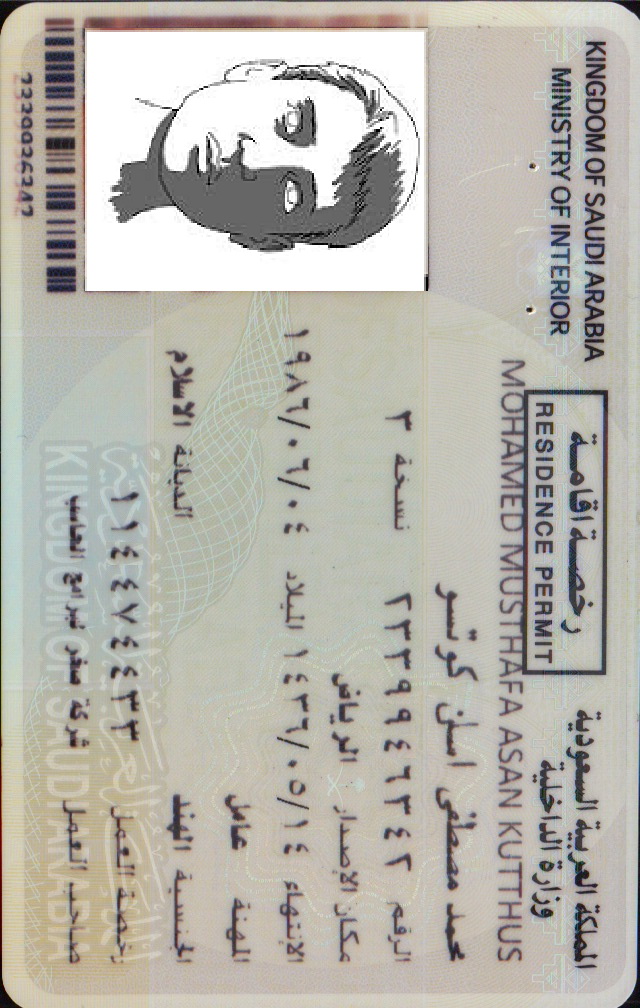

had tryed to get the recovered (denoised) image by using wrapperspective with WARP_INVERSE_MAP but the image not converted correctly Here it 's object image i used

used

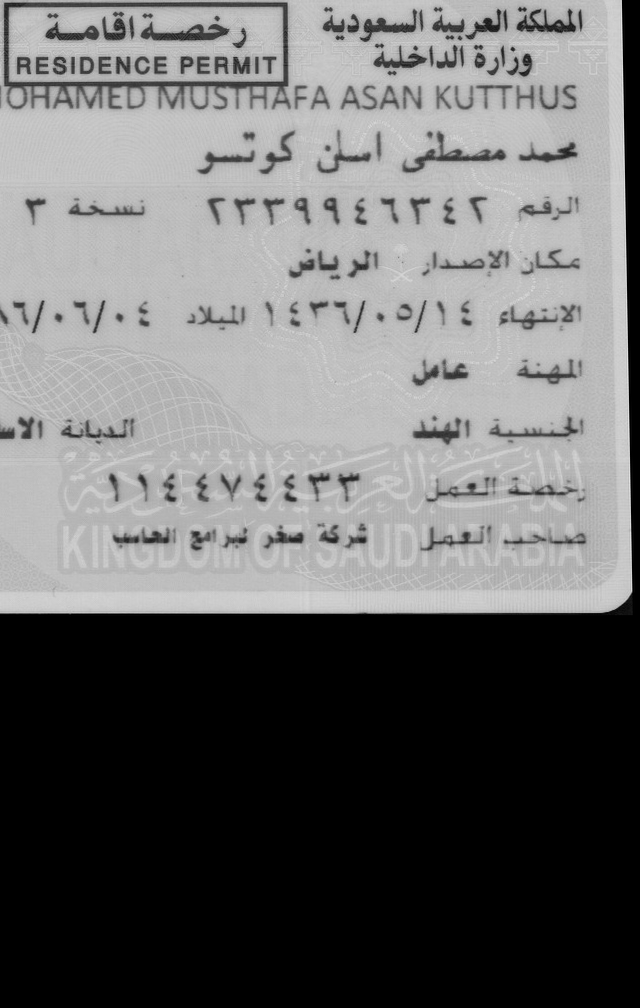

and here it's the scene image i used

used

then after calculating Homography matrix H as described in the sample

i used the following code

Mat img_Recovered;

warpPerspective(img_scene,img_Recovered,H,img_scene.size(),CV_INTER_LANCZOS4+WARP_INVERSE_MAP);

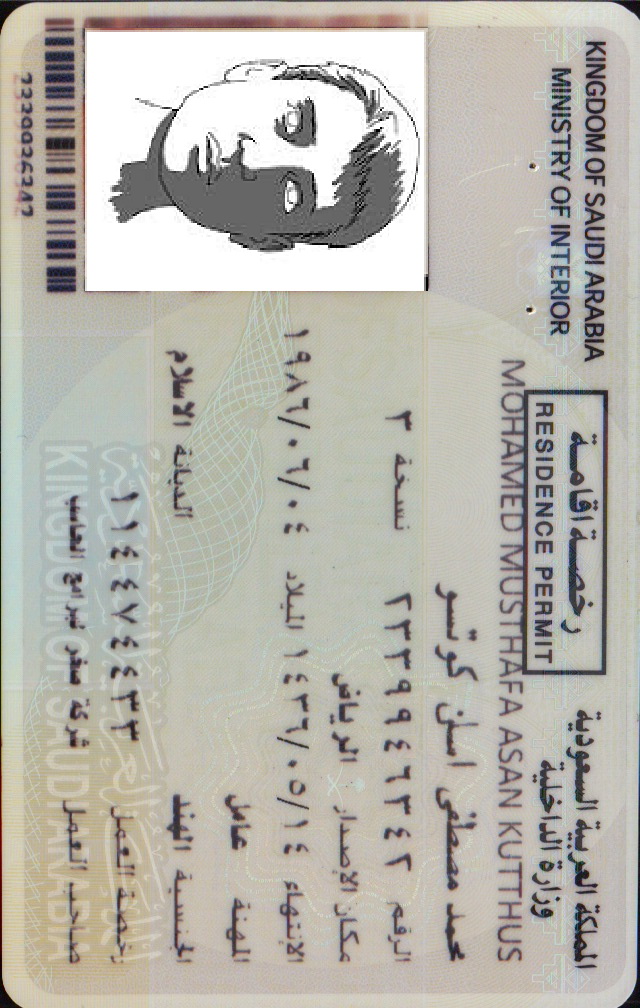

i got the following image

as you see the recovered image does not returned correctly , i noticed that the recoverd image is drawn from starting point of object ,

there is alot of question here

1-how to caluate the correct image size of the recoverd image

2-how to get the recoverd image correctly

3-how to get the object rectangle in the recovered image

4-how to know the rotation angle and scale

thanks for help

and here it's the scene image i used

and here it's the scene image i used

then after calculating Homography matrix H as described in the sample

i used the following code

then after calculating Homography matrix H as described in the sample

i used the following code