|

2014-02-12 08:22:43 -0600

| answered a question | How to compare two image and need result in percentage wise? |

|

2014-02-12 07:37:03 -0600

| received badge | ● Supporter

(source)

|

|

2014-02-10 06:28:59 -0600

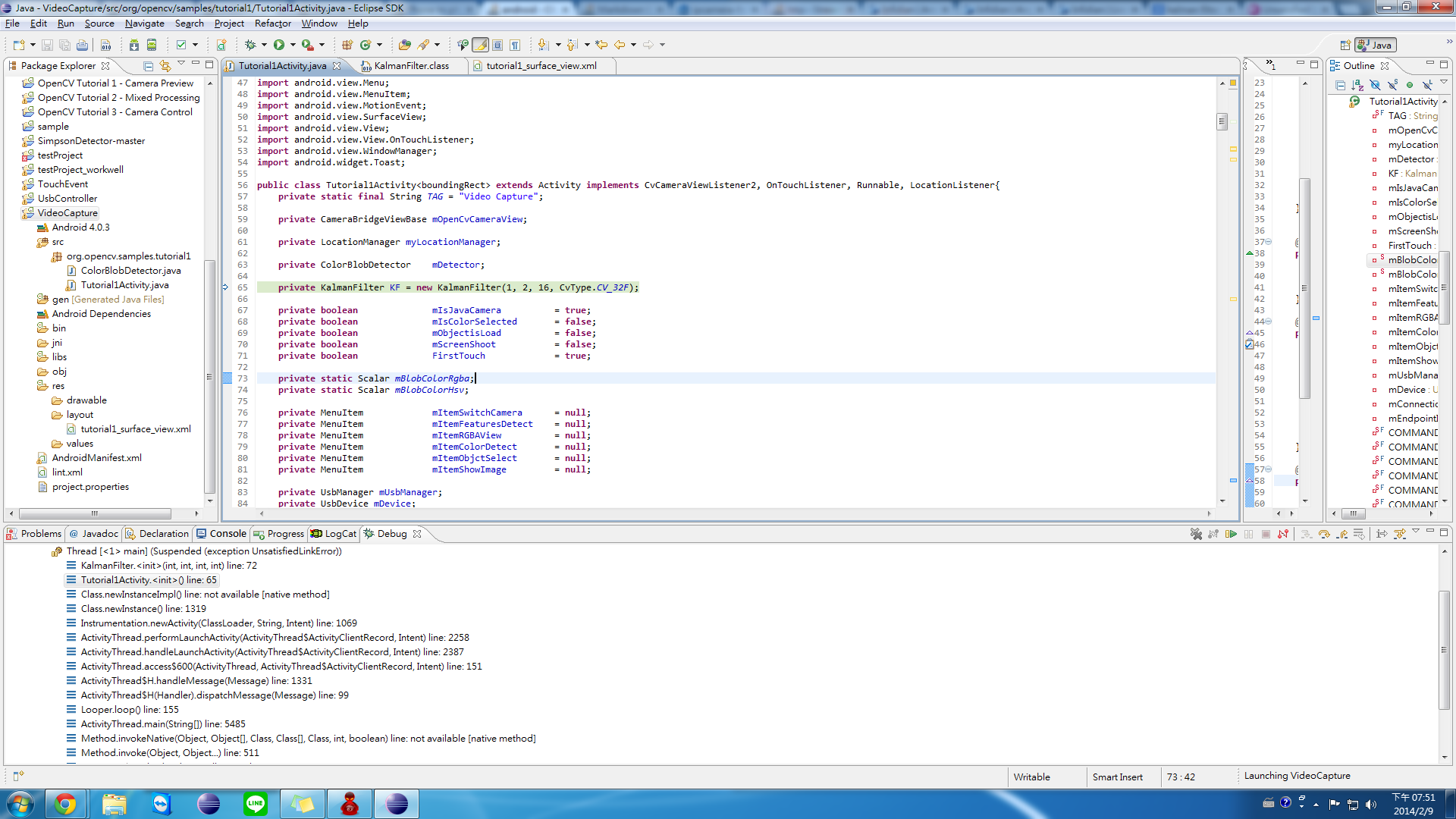

| asked a question | Can't use KalmanFilter in Android I'm writing an apps it can track an objcet 's center point , but when i define the KalmanFilter it show me this  and this is my code public class KalmanFilter<boundingRect> extends Activity implements CvCameraViewListener2, OnTouchListener, Runnable, LocationListener{

private static final String TAG = "Kalman Filter";

private CameraBridgeViewBase mOpenCvCameraView;

private LocationManager myLocationManager;

private ColorBlobDetector mDetector;

private KalmanFilter KF = new KalmanFilter(1, 2, 16, CvType.CV_64F);

/*

*

*

*

*

*

*/

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

mGray = inputFrame.gray();

/*

*

* code for color detect

*

*/

CenterX = mRgba.width() / 2;

CenterY = mRgba.rows() / 2;

int ObjectCenterX = (int)((mboundingRect.tl().x + mboundingRect.br().x) / 2);

int ObjectCenterY = (int)((mboundingRect.tl().y + mboundingRect.br().y) / 2);

Core.circle(mRgba, new Point(ObjectCenterX, ObjectCenterY), 5, ColorGreen, 2);

try {

Mat KalmanObjectPoint = new Mat(CvType.CV_32F);

int[] KalmanPreObjectCenter = {ObjectCenterX, ObjectCenterY};

KalmanObjectPoint.put(0, 0, KalmanPreObjectCenter[0]);

KalmanObjectPoint.put(0, 1, KalmanPreObjectCenter[1]);

KalmanObjectPoint = myKalmanFilter(KalmanObjectPoint);

} catch (Exception e) {

Log.e("In camera fram: ", e.toString());

}

}

/***********************************Kalman filter***********************************************/

private Mat myKalmanFilter(Mat objectPoint){

Mat correctMat = new Mat(CvType.CV_64F);

Mat predictMat = new Mat(CvType.CV_64F);

try {

correctMat = objectPoint;

KF.correct(correctMat);

KF.predict(predictMat);

} catch (Exception e) {

Log.e("myKalmanFilter: ", e.toString());

}

return predictMat;

}

} any help ? |

|

2013-12-21 02:44:54 -0600

| received badge | ● Scholar

(source)

|

|

2013-12-20 06:56:25 -0600

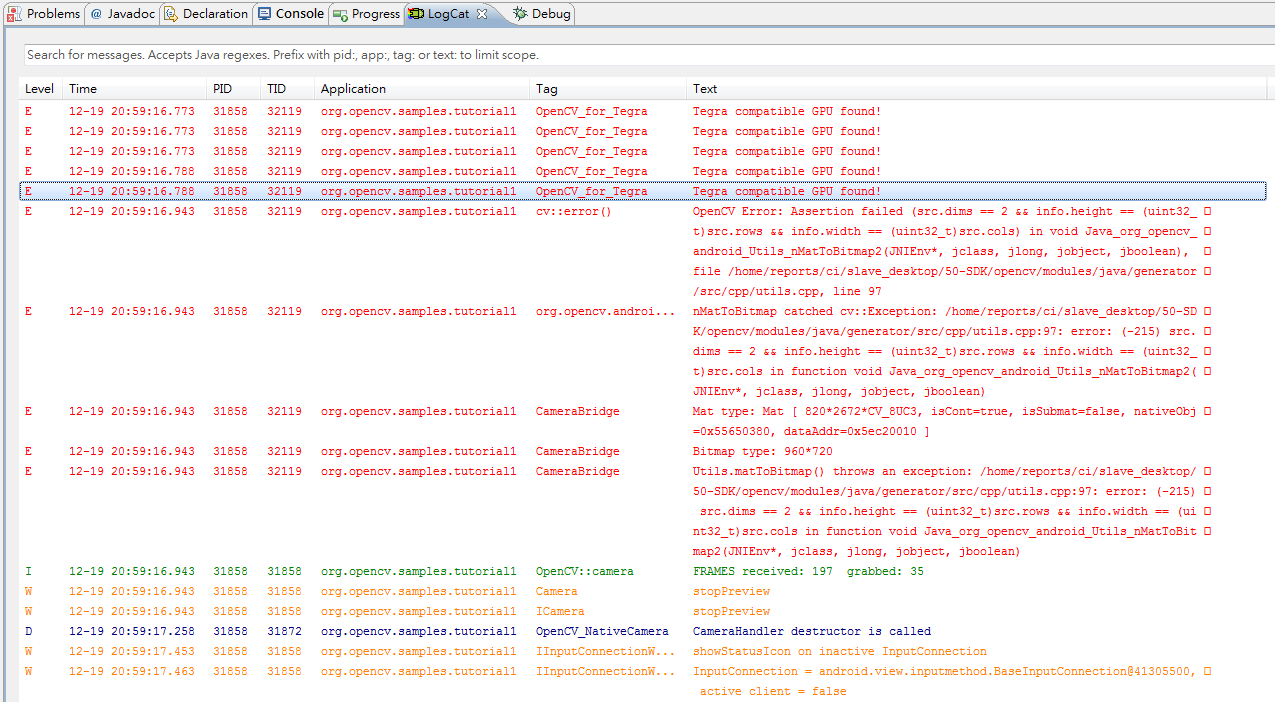

| asked a question | Android - Object detect I'm trying to implement object tracking in with features detect , but I got the following error: It no need to show the object on screen but I need mark the object what I want to track , and here is my code .

It no need to show the object on screen but I need mark the object what I want to track , and here is my code . public void onCameraViewStarted(int width, int height) {

mRgba = new Mat();

mGray = new Mat();

mView = new Mat();

mObject = new Mat();

}

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

switch (viewMode) {

case VIEW_MODE_RGBA:

return mRgba;

case VIEW_MODE_FeatureDetect:

try {

mGray = inputFrame.gray();

mObject = new Mat();

mObject = Highgui.imread(Environment.getExternalStorageDirectory()+ "/Android/data/" + getApplicationContext().getPackageName() + "/Files/Object.jpg", Highgui.CV_LOAD_IMAGE_GRAYSCALE);

mView = mGray.clone();

FeatureDetector myFeatureDetector = FeatureDetector.create(FeatureDetector.ORB);

MatOfKeyPoint keypoints = new MatOfKeyPoint();

myFeatureDetector.detect(mGray, keypoints);

MatOfKeyPoint objectkeypoints = new MatOfKeyPoint();

myFeatureDetector.detect(mObject, objectkeypoints);

DescriptorExtractor Extractor = DescriptorExtractor.create(DescriptorExtractor.ORB);

Mat sourceDescriptors = new Mat();

Mat objectDescriptors = new Mat();

Extractor.compute(mGray, keypoints, sourceDescriptors);

Extractor.compute(mGray, objectkeypoints, objectDescriptors);

DescriptorMatcher matcher = DescriptorMatcher.create(DescriptorMatcher.BRUTEFORCE);

MatOfDMatch matches = new MatOfDMatch();

matcher.match(sourceDescriptors, objectDescriptors, matches);

Features2d.drawMatches(mGray, keypoints, mObject, objectkeypoints, matches, mView);

return mView;

} catch (Exception e) {

Log.d("Exception",e.getMessage());

}

}

return mRgba;

}

Sorry about my English , I hope you understand what I'm asking . Thanks for any suggestion . EDIT Thanks Moster's suggestion , I add the code Imgproc.resize(mView, mView, mGray.size());

after Features2d.drawMatches(mGray, keypoints, mObject, objectkeypoints, matches, mView); , and it can work . |

|

2013-12-02 02:47:15 -0600

| asked a question | Which smart phone support NativeCameraView? I want to know which smart phone with Android OS can support NativeCameraView , it had better publish in recent year. |

|

2013-11-29 04:54:13 -0600

| answered a question | Can't open Nativecamera with Nexus7 in android 4.3 I think my Nexus 7 is not support NativeCameravie , because it can work on HTC OneX |

|

2013-11-26 07:32:25 -0600

| received badge | ● Editor

(source)

|

|

2013-11-26 07:27:55 -0600

| asked a question | Can't open Nativecamera with Nexus7 in android 4.3 I was try with OpenCV tutorial 1 - Camera Preview , Javacameraview is work fine , but when I toggle to Nativecameraview the logchat show this I/OCVSample::Activity(4802): called onOptionsItemSelected; selected item: Toggle Native/Java camera

D/FpsMeter(4802): 14.66 FPS@1920x1080

D/JavaCameraView(4802): Disconnecting from camera

D/JavaCameraView(4802): Notify thread

D/JavaCameraView(4802): Wating for thread

D/JavaCameraView(4802): Finish processing thread

D/CameraBridge(4802): call surfaceChanged event

D/OpenCV::camera(4802): CvCapture_Android::CvCapture_Android(0)

D/OpenCV::camera(4802): Library name: libopencv_java.so

1D/OpenCV::camera(4802): Library base address: 0x738ca000

D/OpenCV::camera(4802): Libraries folder found: /data/app-lib/org.opencv.engine-1/

D/OpenCV::camera(4802): CameraWrapperConnector::connectToLib: folderPath=/data/app-lib/org.opencv.engine-1/

E/OpenCV::camera(4802): ||libnative_camera_r2.3.3.so

E/OpenCV::camera(4802): ||libnative_camera_r4.3.0.so

E/OpenCV::camera(4802): ||libnative_camera_r4.2.0.so

E/OpenCV::camera(4802): ||libnative_camera_r2.2.0.so

E/OpenCV::camera(4802): ||libnative_camera_r4.0.0.so

E/OpenCV::camera(4802): ||libnative_camera_r4.0.3.so

E/OpenCV::camera(4802): ||libnative_camera_r4.1.1.so

E/OpenCV::camera(4802): ||libnative_camera_r3.0.1.so

D/OpenCV::camera(4802): try to load library 'libnative_camera_r4.3.0.so'

D/OpenCV::camera(4802): Loaded library '/data/app-lib/org.opencv.engine-1/libnative_camera_r4.3.0.so'

D/OpenCV_NativeCamera(4802): CameraHandler::initCameraConnect(0x73ef229d, 0, 0x70a92748, 0x0)

D/OpenCV_NativeCamera(4802): Current process name for camera init: org.opencv.samples.tutorial1

D/OpenCV_NativeCamera(4802): Instantiated new CameraHandler (0x73ef229d, 0x70a92748)

I/OpenCV_NativeCamera(4802): initCameraConnect: [antibanding=auto;antibanding-values=off,60hz,50hz,auto;auto-exposure-lock=false;auto-exposure-lock-supported=true;auto-whitebalance-lock=false;auto-whitebalance-lock-supported=true;effect=none;effect-values=none,mono,negative,solarize,sepia,posterize,whiteboard,blackboard,aqua;exposure-compensation=0;exposure-compensation-step=0.166667;flash-mode=off;flash-mode-values=off;focal-length=2.95;focus-areas=(0,0,0,0,0);focus-distances=Infinity,Infinity,Infinity;focus-mode=auto;focus-mode-values=infinity,auto,macro,continuous-video,continuous-picture;horizontal-view-angle=63.8164;jpeg-quality=90;jpeg-thumbnail-height=288;jpeg-thumbnail-quality=90;jpeg-thumbnail-size-values=512x288,480x288,256x154,432x288,320x240,176x144,0x0;jpeg-thumbnail-width=512;max-exposure-compensation=12;max-num-detected-faces-hw=0;max-num-detected-faces-sw=0;max-num-focus-areas=1;max-num-metering-areas=1;max-zoom=99;metering-areas=(0,0,0,0,0);min-exposure-compensation=-12;picture-format=jpeg;picture-format-values=jpeg;pic

D/OpenCV_NativeCamera(4802): Supported Cameras: (null)

D/OpenCV_NativeCamera(4802): Supported Picture Sizes: 2592x1944,2048x1536,1920x1080,1600x1200,1280x768,1280x720,1024x768,800x600,800x480,720x480,640x480,352x288,320x240,176x144

D/OpenCV_NativeCamera(4802): Supported Picture Formats: jpeg

D/OpenCV_NativeCamera(4802): Supported Preview Sizes: 1920x1080,1280x720,800x480,768x432,720x480,640x480,576x432,480x320,384x288,352x288,320x240,240x160,176x144

D/OpenCV_NativeCamera(4802): Supported Preview Formats: yuv420p,yuv420sp

D/OpenCV_NativeCamera(4802): Supported Preview Frame Rates: 15,24,30

D/OpenCV_NativeCamera(4802): Supported Thumbnail Sizes: 512x288,480x288,256x154,432x288,320x240,176x144,0x0

D/OpenCV_NativeCamera(4802): Supported Whitebalance Modes: auto,incandescent,fluorescent,warm-fluorescent,daylight,cloudy-daylight,twilight,shade

D/OpenCV_NativeCamera(4802): Supported Effects: none,mono,negative,solarize,sepia,posterize,whiteboard,blackboard,aqua

D/OpenCV_NativeCamera(4802): Supported Scene Modes: auto,landscape,snow,beach,sunset,night,portrait,sports,steadyphoto,candlelight,fireworks,party,night-portrait,theatre,action

D/OpenCV_NativeCamera(4802): Supported Focus Modes: infinity,auto,macro,continuous-video,continuous-picture

D/OpenCV_NativeCamera(4802): Supported Antibanding Options: off,60hz,50hz,auto

D/OpenCV_NativeCamera(4802 ... (more) |

|

2013-11-20 05:28:27 -0600

| commented question | grab() always returning false I got similar problem , my code is like this

' public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

mGray = inputFrame.gray();

VideoCapture mcapture = new VideoCapture(0);

mcapture.open(Highgui.CV_CAP_ANDROID_COLOR_FRAME);

if(!mcapture.isOpened()){

Core.putText(mRgba, "Capture Fail", new Point(50, 50), BIND_AUTO_CREATE, BIND_AUTO_CREATE, Color_Green);

}else{

Mat frame = new Mat();

Imgproc.cvtColor(mRgba, frame, Imgproc.COLOR_RGB2GRAY);

mcapture.retrieve(frame, 3);

mRgba = frame; }

return mRgba;

}'

but mcapture.isOpened() is always false |

|

2013-11-19 08:12:35 -0600

| asked a question | findHomography - The line come out not around object I'm doing feature matching. For now , I can find features and draw descriptor but the line came out was not around the object even not a rectangle , I stuck on it for few days.

Thanks for the help.

here is my resoult private void Featrue_found(){

MatOfKeyPoint templateKeypoints = new MatOfKeyPoint();

MatOfKeyPoint keypoints = new MatOfKeyPoint();

MatOfDMatch matches = new MatOfDMatch();

Object = new Mat(CvType.CV_32FC2);

Object = Highgui.imread(Environment.getExternalStorageDirectory()+ "/Android/data/" + getApplicationContext().getPackageName() + "/Files/Object.jpg", Highgui.CV_LOAD_IMAGE_UNCHANGED);

Resource = new Mat(CvType.CV_32FC2);

Resource = Highgui.imread(Environment.getExternalStorageDirectory()+ "/Android/data/" + getApplicationContext().getPackageName() + "/Files/Resource.jpg", Highgui.CV_LOAD_IMAGE_UNCHANGED);

Mat imageOut = Resource.clone();

//Don't use "FeatureDetector.FAST" result was too bad

//"FeatureDetector.SURF"、"FeatureDetector.SURF" non-free method

//"FeatureDetector.ORB" is better for now

//FeatureDetector.SIFT cna't use

FeatureDetector myFeatures = FeatureDetector.create(FeatureDetector.ORB);

myFeatures.detect(Resource, keypoints);

myFeatures.detect(Object, templateKeypoints);

DescriptorExtractor Extractor = DescriptorExtractor.create(DescriptorExtractor.ORB);

Mat descriptors1 = new Mat();

Mat descriptors2 = new Mat();

Extractor.compute(Resource, keypoints, descriptors1);

Extractor.compute(Resource, templateKeypoints, descriptors2);

//add Feature descriptors

DescriptorMatcher matcher = DescriptorMatcher.create(DescriptorMatcher.BRUTEFORCE);

matcher.match(descriptors1, descriptors2, matches);

List<DMatch> matches_list = matches.toList();

MatOfDMatch good_matches = new MatOfDMatch();

double max_dist = 0; double min_dist = 99;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors1.rows(); i++ )

{

double dist = matches_list.get(i).distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )

for( int i = 0; i < descriptors1.rows(); i++ )

{

if( matches_list.get(i).distance < 3*min_dist ){

MatOfDMatch temp = new MatOfDMatch();

temp.fromArray(matches.toArray()[i]);

good_matches.push_back(temp);

}

}

Features2d.drawMatches(Resource, keypoints, Object, templateKeypoints, good_matches, imageOut);

LinkedList<Point> objList = new LinkedList<Point>();

LinkedList<Point> sceneList = new LinkedList<Point>();

List<DMatch> good_matches_list = good_matches.toList();

List<KeyPoint> keypoints_objectList = templateKeypoints.toList();

List<KeyPoint> keypoints_sceneList = keypoints.toList();

for(int i = 0; i<good_matches_list.size(); i++)

{

objList.addLast(keypoints_objectList.get(good_matches_list.get(i).queryIdx).pt);

sceneList.addLast(keypoints_sceneList.get(good_matches_list.get(i).trainIdx).pt);

}

MatOfPoint2f obj = new MatOfPoint2f();

obj.fromList(objList);

MatOfPoint2f scene = new MatOfPoint2f();

scene.fromList(sceneList);

//findHomography

//Mat hg = Calib3d.findHomography(obj, scene);

Mat hg = Calib3d.findHomography(obj, scene, Calib3d.RANSAC, min_dist);

Mat obj_corners = new Mat(4,1,CvType.CV_32FC2);

Mat scene_corners = new Mat(4,1,CvType.CV_32FC2);

obj_corners.put(0, 0, new double[] {0,0});

obj_corners.put(1, 0, new double[] {Object.cols(),0});

obj_corners.put(2, 0, new double[] {Object.cols(),Object.rows()});

obj_corners.put(3, 0, new double[] {0,Object.rows()});

//obj_corners:input

Core.perspectiveTransform(obj_corners, scene_corners, hg);

Core.line(imageOut, new Point(scene_corners.get(0,0)), new Point(scene_corners.get(1,0)), new Scalar(0, 255, 0),4);

Core.line(imageOut, new Point(scene_corners.get(1,0)), new Point(scene_corners.get(2,0)), new Scalar(0, 255, 0),4);

Core.line(imageOut, new Point(scene_corners.get(2,0)), new Point(scene_corners.get(3,0)), new Scalar(0, 255, 0),4);

Core.line(imageOut, new Point(scene_corners.get(3,0)), new Point(scene_corners.get(0,0)), new Scalar(0, 255, 0),4 ...

(more) |