|

2017-10-05 10:26:10 -0600

| received badge | ● Student

(source)

|

|

2016-11-11 01:48:50 -0600

| commented question | Detect roman numerals in image I would start without cropping. I guess it can be added later if needed.

I'm using matchTemplate with TM_CCOEFF_NORMED. I did some experiment with matching methods, this one gave better results for my case. But I guess it is more computation intensive.

I couldn't find any relevant tutorials for my case yet. But I didn't look throughly either. |

|

2016-11-09 00:56:44 -0600

| commented question | Detect roman numerals in image I guess working with corners and lines may solve some of the problems I mentioned above. But it may have its own issues. |

|

2016-11-09 00:50:49 -0600

| commented question | Detect roman numerals in image Cropping reduces size of the image you are working on which is nice, but I doubt it will help with the detection rate. If your region of interest has white background clearly seperated from the rest of the image, then you won't get any detection elsewhere anyways. If your scene is contains light colours for some reason then it will hard to determine where to crop.

I must say I'm beginner myself, these are only ideas. I can be wrong. |

|

2016-11-09 00:30:24 -0600

| commented question | Detect roman numerals in image I'm working on a similar task: detecting some symbols on white backgroud. I'm using templates, because it seemed easier to implement. However I'm facing a number of problems. Maybe some of them applies to your application as well.

Same symbol can appear in a numer of shapes. Sometimes lines are thicker or symbol itself is wider in one dimension. If your dataset contains different fonts etc. this can be an issue for you too. I set more than one pattern for each symbol Which helped a little.

Some symbols are similar to each other (only difference being one dot etc.) which leads false positives.

Some symbols are too basic, like empty triangle or circle. This shapes can appear in image by chance For your case maybe "I" can be a problem. |

|

2016-11-08 05:09:35 -0600

| commented question | Detect roman numerals in image For the image processing you can try (maybe adaptive) thresholding and then opening+closing to eleminate noise to some extent. You can check out tesseract library if reading numerals is your main goal. |

|

2016-11-08 04:26:09 -0600

| commented question | Template Matching with a mask My mask is the third one. It somehow didn't show in question before, I added it now. |

|

2016-11-08 04:16:03 -0600

| received badge | ● Editor

(source)

|

|

2016-11-08 02:09:00 -0600

| asked a question | Template Matching with a mask I'm trying to use template matching with a mask but I'm getting significantly lower scores than I expect. class Main {

public static void main(String args[]){

String inputFile = "D:\\Projects\\Smileywork\\p.bmp";

String templateFile = "D:\\Projects\\Smileywork\\p2.bmp";

String externalMaskFile = "D:\\Projects\\Smileywork\\p_mask.bmp";

System.loadLibrary("opencv_java310");

int match_method = Imgproc.TM_CCORR_NORMED;

Mat img = Imgcodecs.imread(inputFile, Imgcodecs.CV_LOAD_IMAGE_GRAYSCALE);

Mat templ = Imgcodecs.imread(templateFile, Imgcodecs.CV_LOAD_IMAGE_GRAYSCALE);

Mat mask = Imgcodecs.imread(externalMaskFile, Imgcodecs.CV_LOAD_IMAGE_GRAYSCALE);

int result_cols = img.cols() - templ.cols() + 1;

int result_rows = img.rows() - templ.rows() + 1;

Mat result = new Mat(result_rows, result_cols, CvType.CV_8U);

MinMaxLocResult mmr = match(match_method, img, templ, result, mask);

System.out.println("Score:" + mmr.maxVal);

}

private static MinMaxLocResult match(int match_method, Mat img, Mat templ, Mat result) {

Imgproc.matchTemplate(img, templ, result, match_method);

MinMaxLocResult mmr = Core.minMaxLoc(result);

return mmr;

}

private static MinMaxLocResult match(int match_method, Mat img, Mat templ, Mat result, Mat mask) {

Imgproc.matchTemplate(img, templ, result, match_method, mask);

MinMaxLocResult mmr = Core.minMaxLoc(result);

return mmr;

}

}

Here are p, p2 and p_mask:

All of them have the same size, they are 24-bit bmp's, but contain only black and white pixels

I get a score of 0.059. Without a mask I get 0.853. I'd understand some lower score since some white areas are not here to match, but I feel I should've got somewhat closer value. |

|

2016-06-23 01:29:46 -0600

| received badge | ● Enthusiast

|

|

2016-05-20 00:21:59 -0600

| received badge | ● Supporter

(source)

|

|

2016-05-20 00:21:57 -0600

| received badge | ● Scholar

(source)

|

|

2016-05-18 08:00:07 -0600

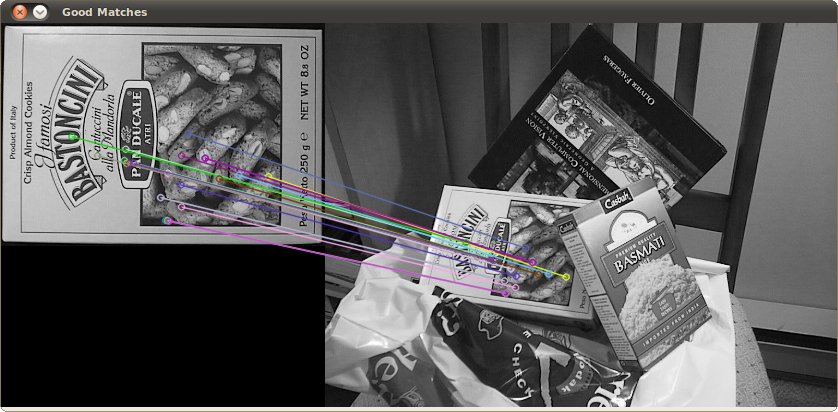

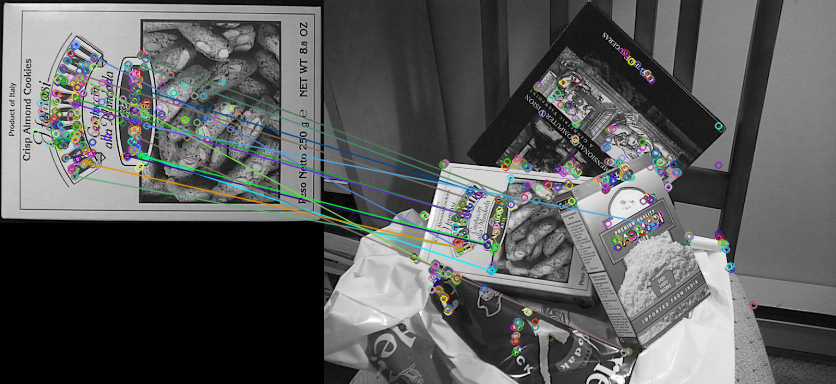

| asked a question | ORB Detector / ORB Extractor retrieve bad results I'm trying to get similar results as in

"http://docs.opencv.org/2.4/doc/tutorials/features2d/feature_flann_matcher/feature_flann_matcher.html#feature-flann-matcher"

but I'm employing ORB rather than SURF and bruteforce instead of FLANN. Descriptors and matches I get seem almost random.

"http://docs.opencv.org/2.4/doc/tutorials/features2d/feature_flann_matcher/feature_flann_matcher.html#feature-flann-matcher"

but I'm employing ORB rather than SURF and bruteforce instead of FLANN. Descriptors and matches I get seem almost random.

My code is as follows

My code is as follows package main;

import java.util.ArrayList;

import java.util.List;

import org.opencv.features2d.*;

import org.opencv.core.*;

import org.opencv.imgcodecs.*;

public class Main

{

public static void main(String[] args)

{

System.loadLibrary("opencv_java310");

Mat img1 = Imgcodecs.imread("D:\\Projects\\HelloOpenCV\\box.png", Imgcodecs.CV_LOAD_IMAGE_GRAYSCALE);

Mat img2 = Imgcodecs.imread("D:\\Projects\\HelloOpenCV\\box_in_scene.png", Imgcodecs.CV_LOAD_IMAGE_GRAYSCALE);

FeatureDetector detector = FeatureDetector.create(FeatureDetector.ORB);

MatOfKeyPoint keypoints1 = new MatOfKeyPoint();

detector.detect(img1, keypoints1);

FeatureDetector detector2 = FeatureDetector.create(FeatureDetector.ORB);

MatOfKeyPoint keypoints2 = new MatOfKeyPoint();

detector2.detect(img2, keypoints2);

DescriptorExtractor extractor = DescriptorExtractor.create(DescriptorExtractor.ORB);

Mat descriptors1 = new Mat();

extractor.compute(img1, keypoints1, descriptors1);

DescriptorExtractor extractor2 = DescriptorExtractor.create(DescriptorExtractor.ORB);

Mat descriptors2 = new Mat();

extractor2.compute(img2, keypoints2, descriptors2);

DescriptorMatcher matcher = DescriptorMatcher.create(DescriptorMatcher.BRUTEFORCE);

MatOfDMatch matches = new MatOfDMatch();

matcher.match(descriptors1, descriptors2, matches);

/**/

List<DMatch> matchesList = matches.toList();

double maxDistance = 0;

double minDistance = 1000;

int rowCount = matchesList.size();

for (int i = 0; i < rowCount; i++)

{

double dist = matchesList.get(i).distance;

if (dist < minDistance) minDistance = dist;

if (dist > maxDistance) maxDistance = dist;

}

List<DMatch> goodMatchesList = new ArrayList<DMatch>();

double upperBound = 1.6 * minDistance;

for (int i = 0; i < rowCount; i++)

{

if (matchesList.get(i).distance <= upperBound)

{

goodMatchesList.add(matchesList.get(i));

}

}

MatOfDMatch goodMatches = new MatOfDMatch();

goodMatches.fromList(goodMatchesList);

Mat img_matches = new Mat();

Features2d.drawMatches(img1, keypoints1, img2, keypoints2, goodMatches, img_matches);

Imgcodecs.imwrite("D:\\Projects\\HelloOpenCV\\Test.bmp", img_matches);

}

}

I feel I'm missing something trivial here. I tried AKAZE/AKAZE either, result was different but not better. |